GenericContainer redis = new GenericContainer(

DockerImageName.parse("redis:5.0.3-alpine"))

.withExposedPorts(6379);

String address = redis.getHost();

Integer port = redis.getFirstMappedPort();

underTest = new RedisBackedCache(address, port);Mocks vs TestContainers

Ivan Ponomarev

| Ivan Ponomarev

|

Why I Decided to Make This Presentation?

A am a user and enthusiast of TestContainers library since 2016

However, quite often I argue with engineers who consider TestContainers to be

an ultimate solution for every problemI would like to share some insights based on my personal experience

in integration testing in Java/KotlinHowever, the takeaways of this talk must be useful not just for Java/Kotlin developers

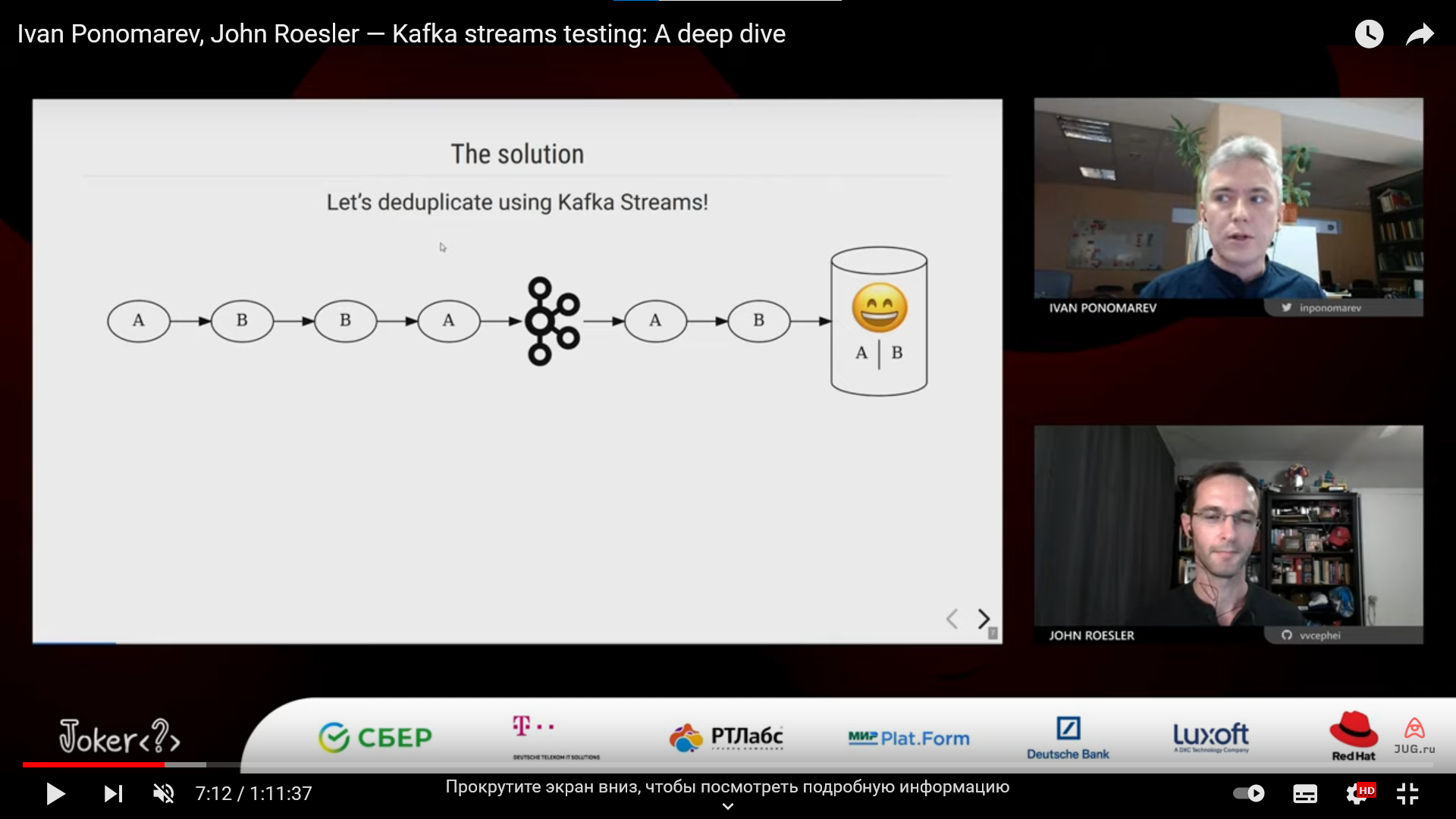

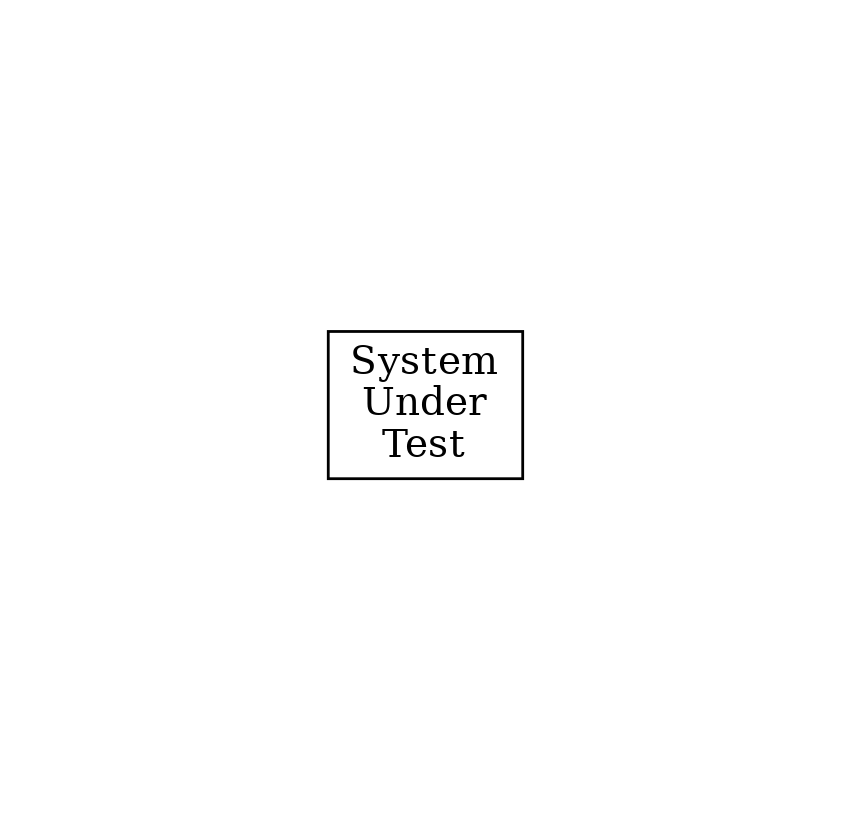

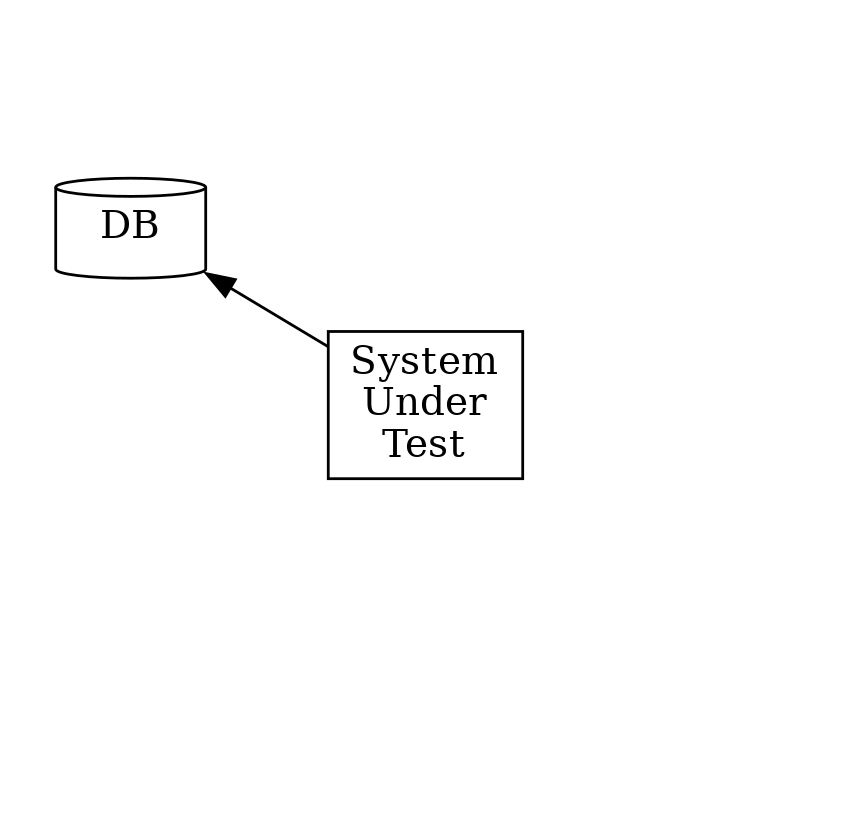

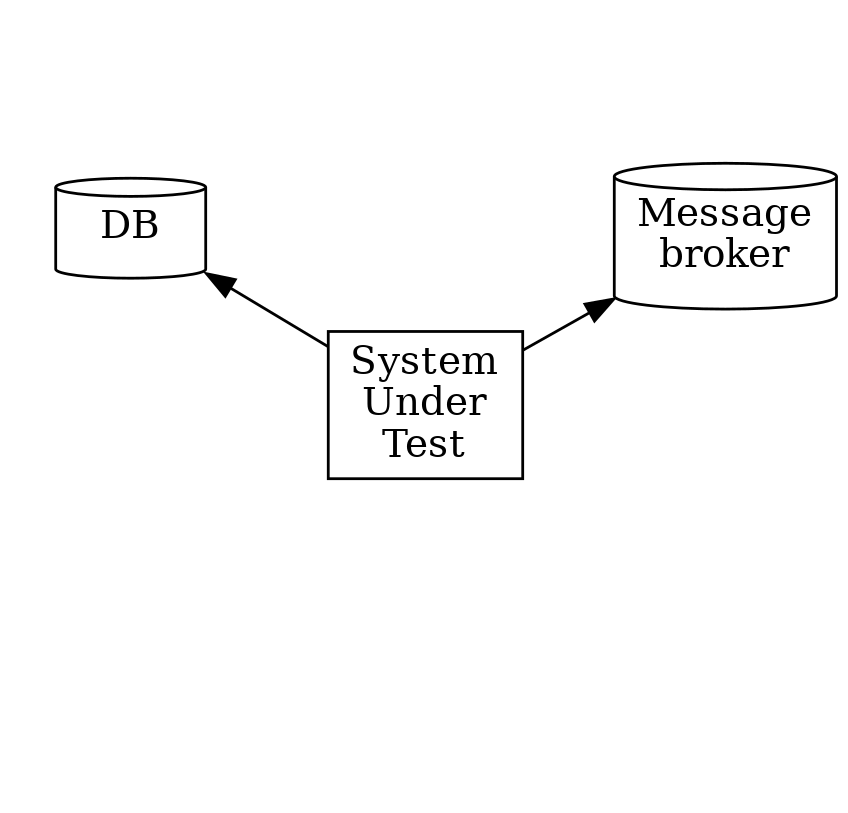

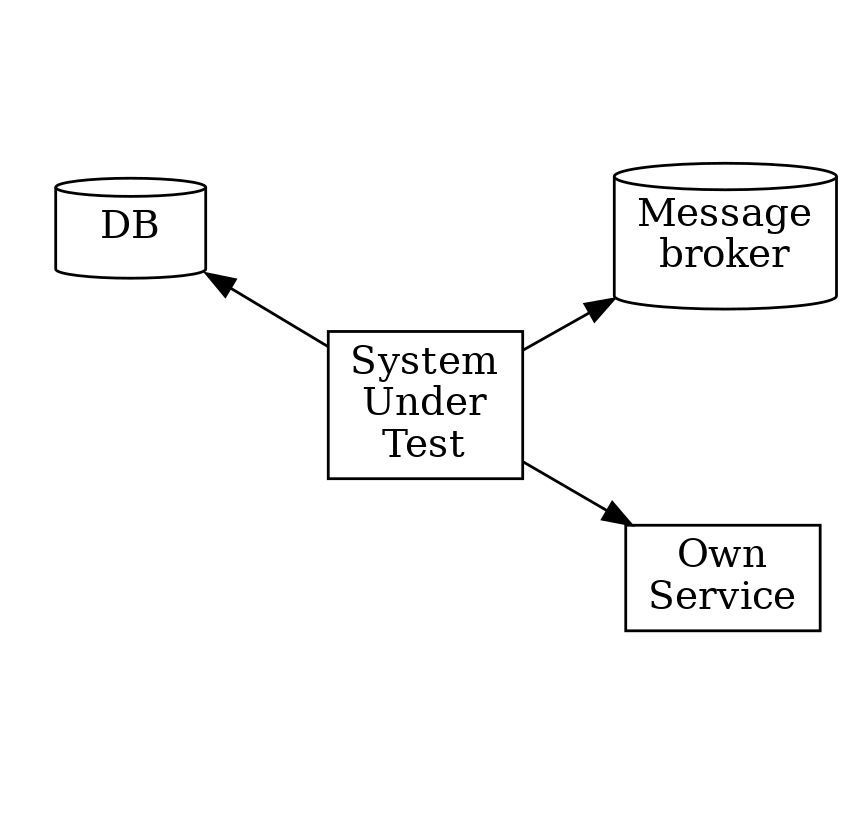

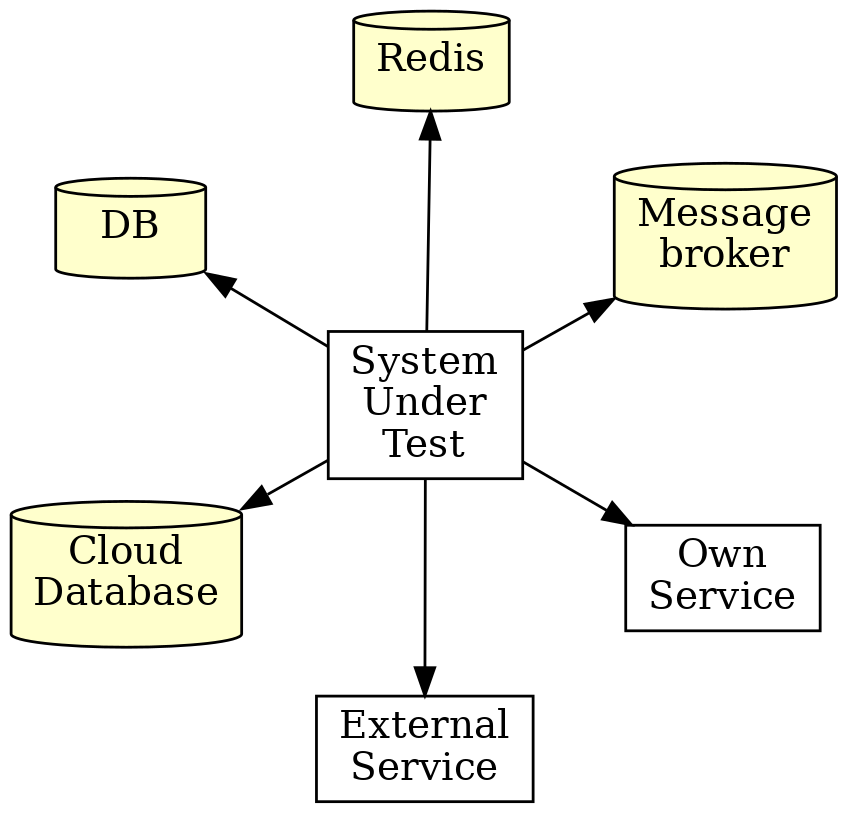

Modern integration test

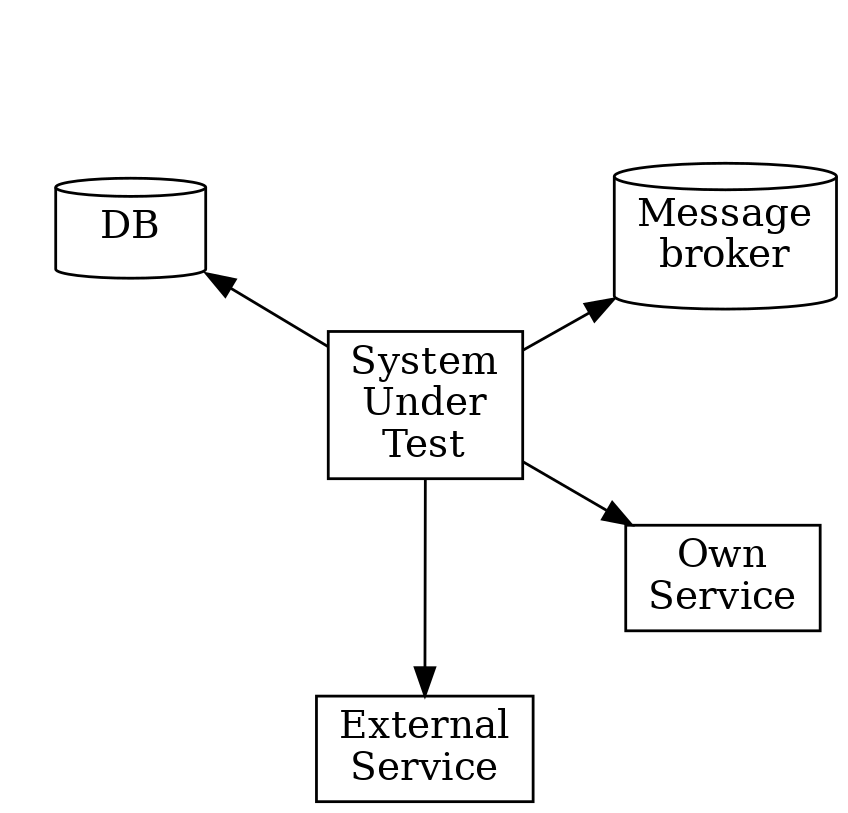

Modern integration test

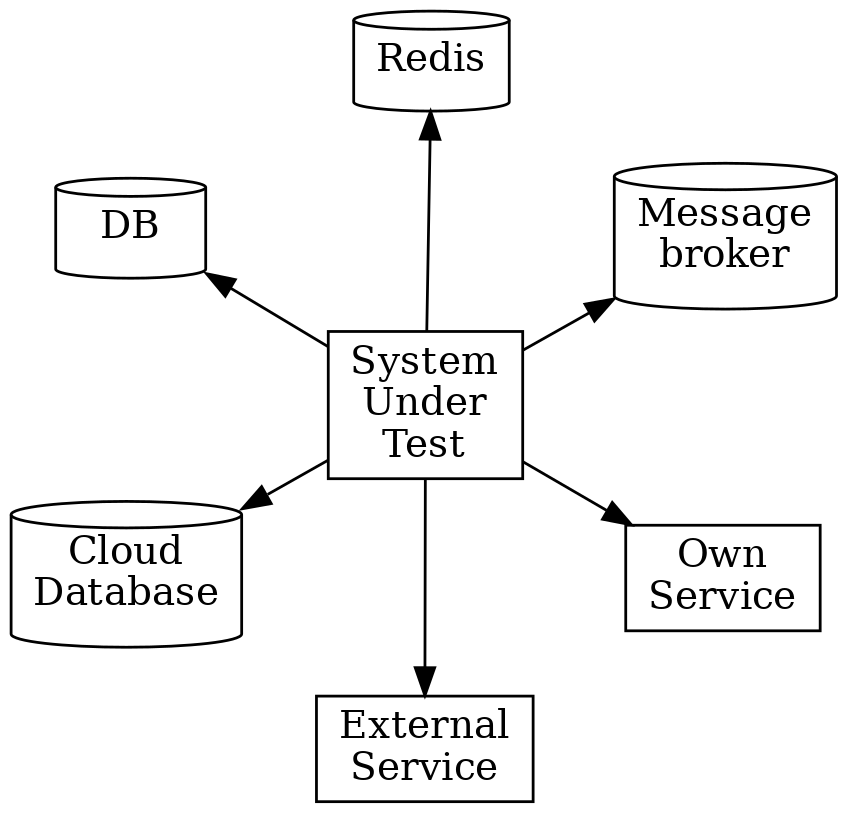

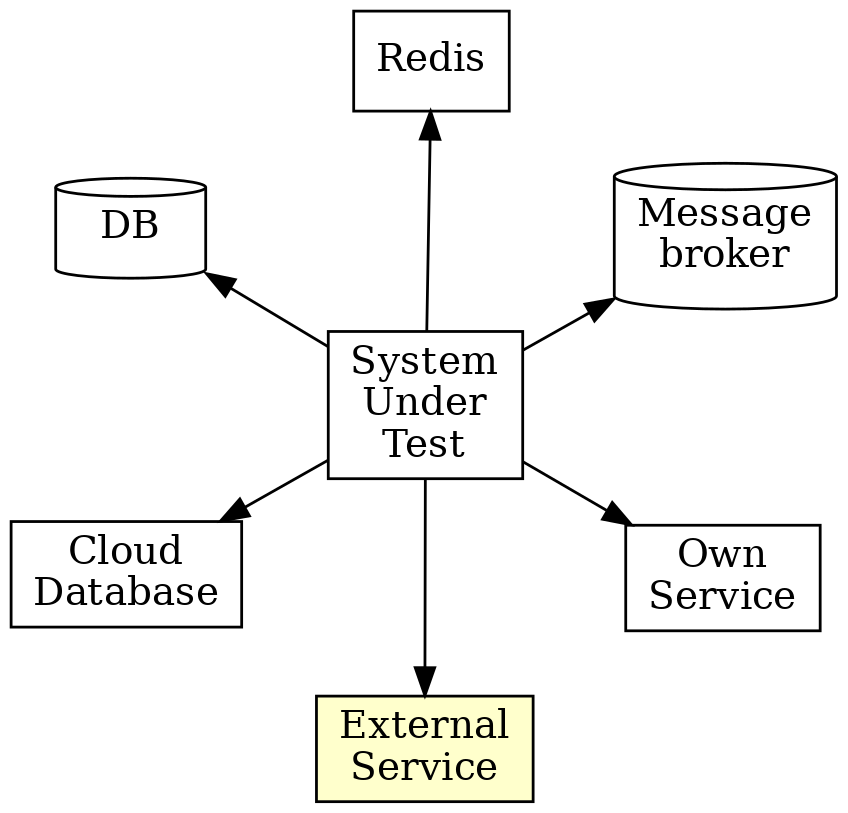

Modern integration test

Modern integration test

Modern integration test

Modern integration test

What do we have?

Mocks | Real Systems |

Using mocks is like learning chemistry from cartoons…

Nothing beats a real experiment, though…

What do we have for Real Experiments?

What do we have for Real Experiments?

"An open source framework for providing throwaway, lightweight instances of databases, message brokers, web browsers, or just about anything that can run in a Docker container."

TestContainers in action

Set of stereotypes

— Mocks are unreliable

— Let’s run everything in test containers and test it!

— Using H2 database for testing is an outdated practice!

… we will address all them later,

but first let’s consider the case of external REST/gRPC services

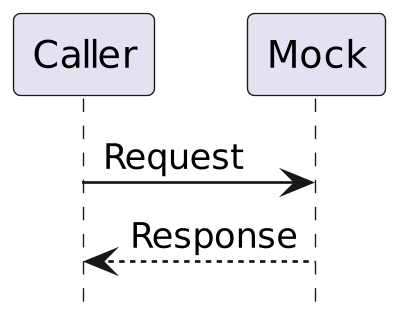

Mocks of external services

Mocks of external services

Mocks | Real Systems | |

|

| |

|

The importance of proper testing of corner cases

Gojko Adzic, Humans vs Computers, 2017

"Let’s release from prisons everyone who has not committed serious crimes"

try {

murders = restClient.loadMurders();

}

catch (IOException e) {

logger.error("failed to load", e);

murders = emptyList();

}WireMock features

Simulate a response from the service

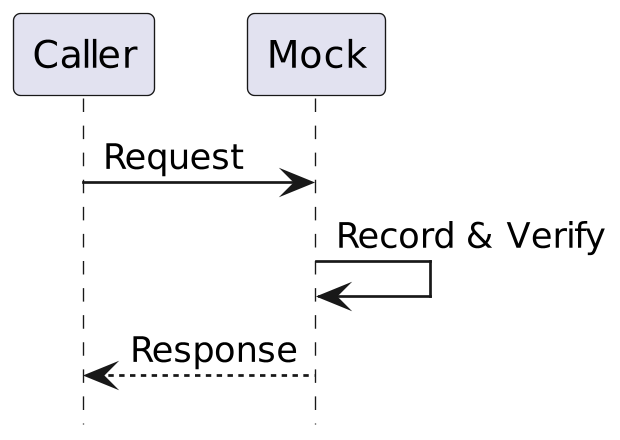

WireMock features

Verify calls to the service

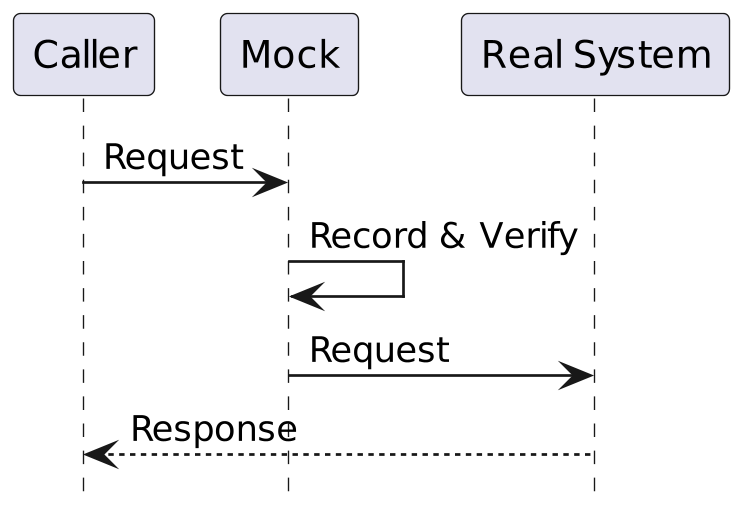

WireMock features

"Spy" by intercepting calls to the real service

What about RDBMS/NoSQL/message brokers etc?

What about RDBMS/NoSQL/message brokers etc?

— They are too complicated to mock!

— Hurray for Testcontainers!

Mocks vs TestContainers: compatibility

Mocks | Testcontainers | |

|

Mocks vs TestContainers: compatibility

Mocks | Testcontainers | |

|

|

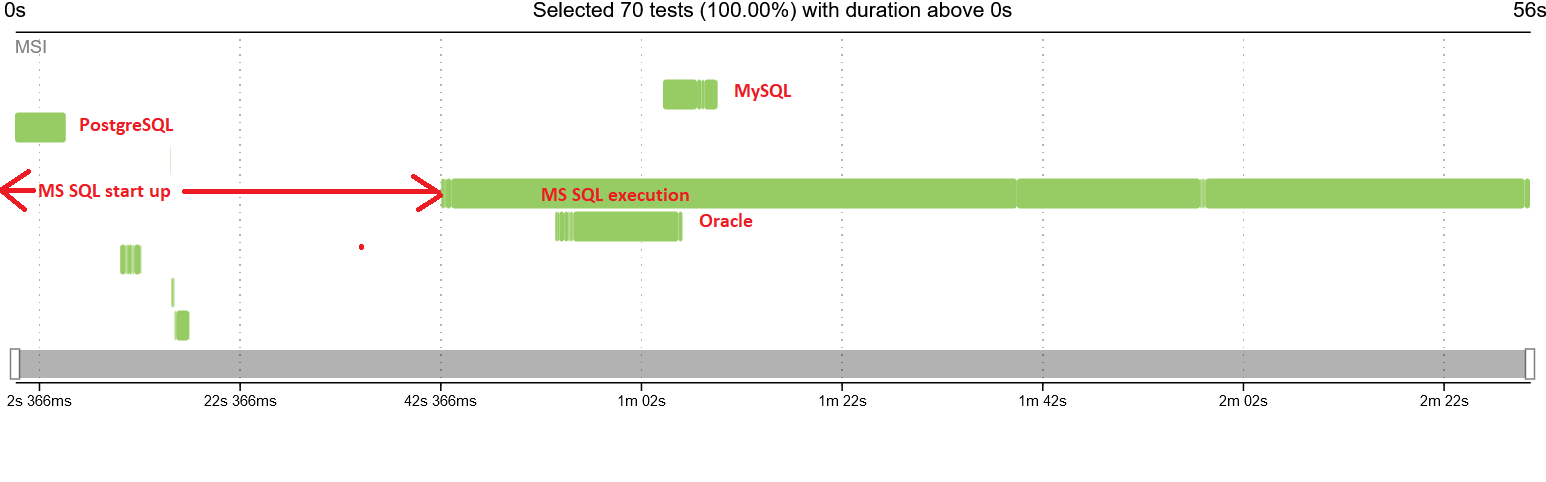

Mocks vs TestContainers: Ease of use and start-up speed

Mocks | Testcontainers | |

|

Mocks vs TestContainers: Ease of use and start-up speed

Mocks vs TestContainers: Ease of use and start-up speed

Mocks | Testcontainers | |

|

|

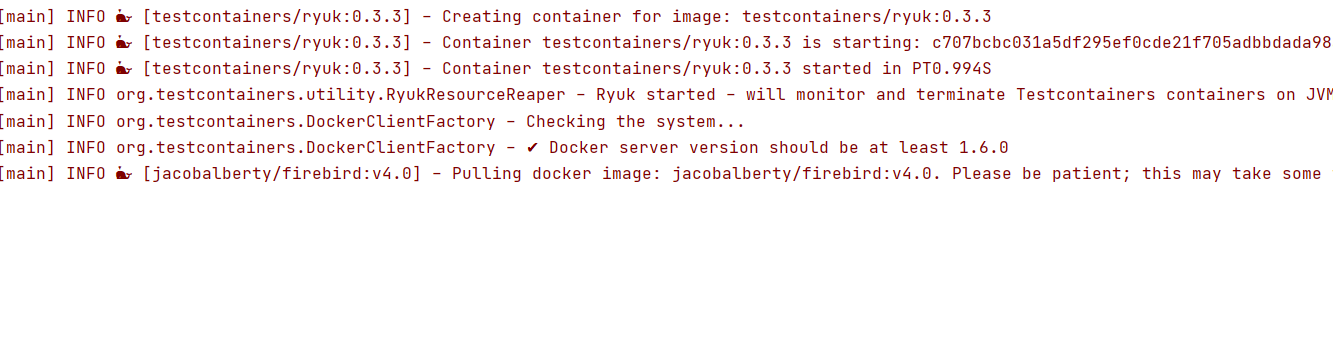

TC Startup time

Worst situation

"I have a laptop with an ARM-based CPU and the Docker image is not compatible!"

"I have a laptop with an ARM-based CPU and the Docker image is not compatible!"

Testcontainers Cloud

Paid service

Paid service Requires good Internet connection (won’t work on a train)

Requires good Internet connection (won’t work on a train)

Convenience

Mocks | Testcontainers | |

|

|

Integration Mocks vs TestContainers

Mocks | Testcontainers | |

|

Integration Mocks vs TestContainers

Mocks | Testcontainers | |

|

|

Availability

Mocks | Testcontainers | |

|

|

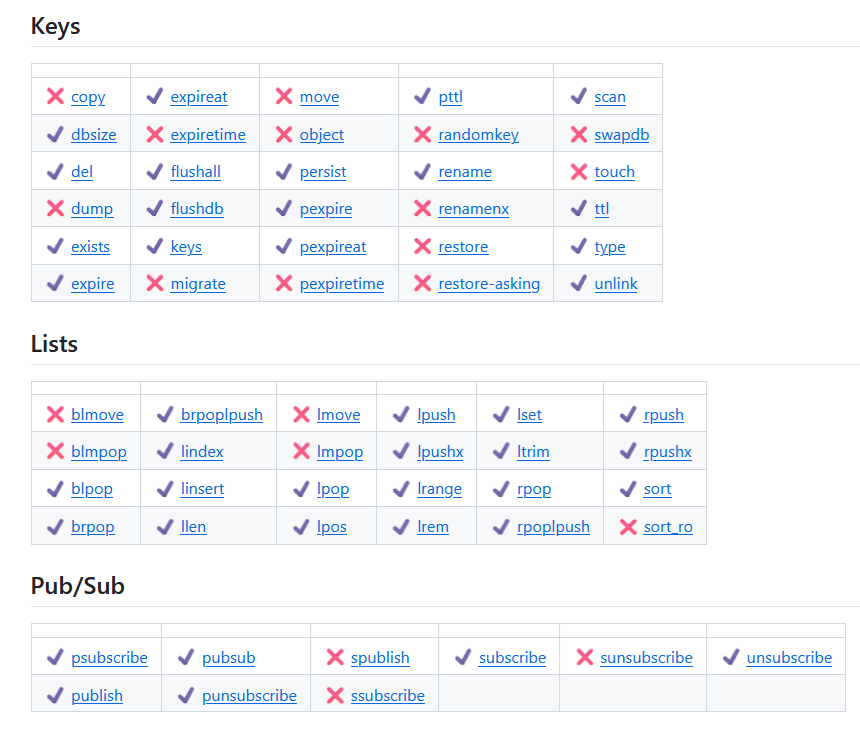

Story № 1. JedisMock and Call Verification

Mocks of Redis in various programming languages

Python | 240 stars | |

NodeJS | 210 stars | |

Java | 151 stars |

JedisMock

Reimplementation of Redis in pure Java (works at the network protocol level)

As of April 2024, supports 153 out of 237 commands (64%)

JedisMock

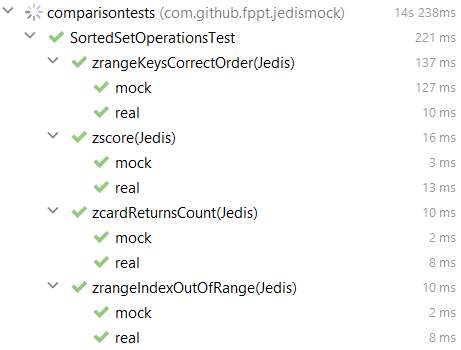

Tested with Comparison tests (running identical scenarios on Jedis-Mock and on containerized Redis)

JedisMock

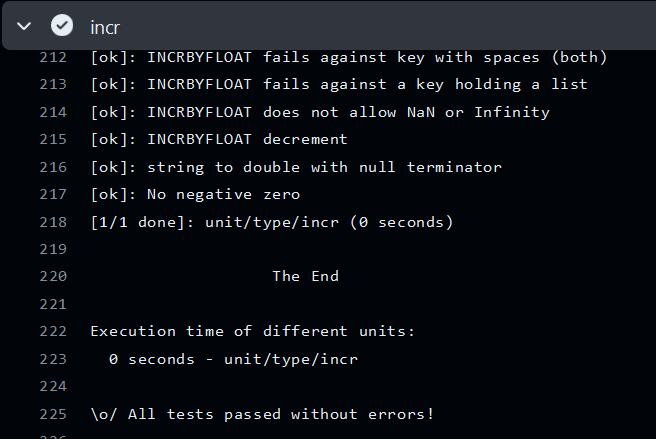

Also tested with a subset of native Redis tests written in Tcl/Tk (tests that are being used for regression testing of Redis itself)

JedisMock

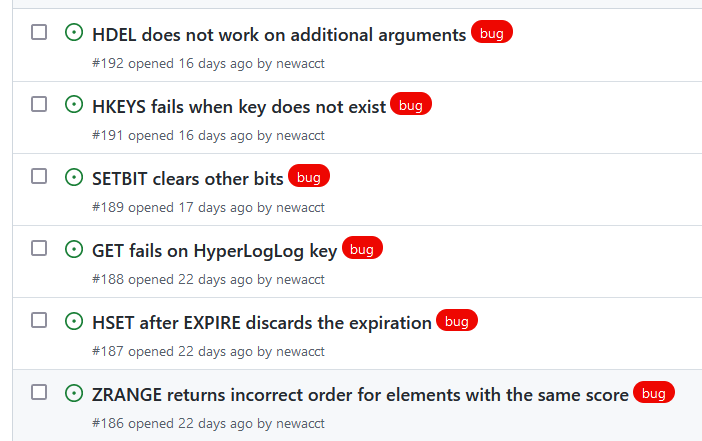

Still behavior which is different from the real Redis is being constantly reported by users (and quickly fixed)

Why mock if we have TestContainers?

GenericContainer redis = new GenericContainer(

DockerImageName.parse("redis:5.0.3-alpine"))

.withExposedPorts(6379);

String address = redis.getHost();

Integer port = redis.getFirstMappedPort();

underTest = new RedisBackedCache(address, port);JedisMock is a regular Maven dependency

//build.gradle.kts

testImplementation("com.github.fppt:jedis-mock:1.1.1")//This binds mock redis server to a random port

RedisServer server = RedisServer

.newRedisServer()

.start();

//Jedis connection:

Jedis jedis = new Jedis(server.getHost(), server.getBindPort());

//Lettuce connection:

RedisClient redisClient = RedisClient

.create(String.format("redis://%s:%s",

server.getHost(), server.getBindPort()));RedisCommandInterceptor: explicitly specified response

RedisServer server = RedisServer.newRedisServer()

.setOptions(ServiceOptions.withInterceptor((state, cmd, params) -> {

if ("get".equalsIgnoreCase(cmd)) {

//explicitly specify the response

return Response.bulkString(Slice.create("MOCK_VALUE"));

} else {

//delegate to the mock

return MockExecutor.proceed(state, cmd, params);

}

})).start();RedisCommandInterceptor: verification

RedisServer server = RedisServer.newRedisServer()

.setOptions(ServiceOptions.withInterceptor((state, cmd, params) -> {

if ("echo".equalsIgnoreCase(cmd)) {

//check the request

assertEquals("hello", params.get(0).toString());

}

//delegate to the mock

return MockExecutor.proceed(state, cmd, params);

})).start();RedisCommandInterceptor: failure simulation

RedisServer server = RedisServer.newRedisServer()

.setOptions(ServiceOptions.withInterceptor((state, cmd, params) -> {

if ("echo".equalsIgnoreCase(cmd)) {

//simulate a failure

return MockExecutor.breakConnection(state);

} else {

//delegate to the mock

return MockExecutor.proceed(state, cmd, params);

}

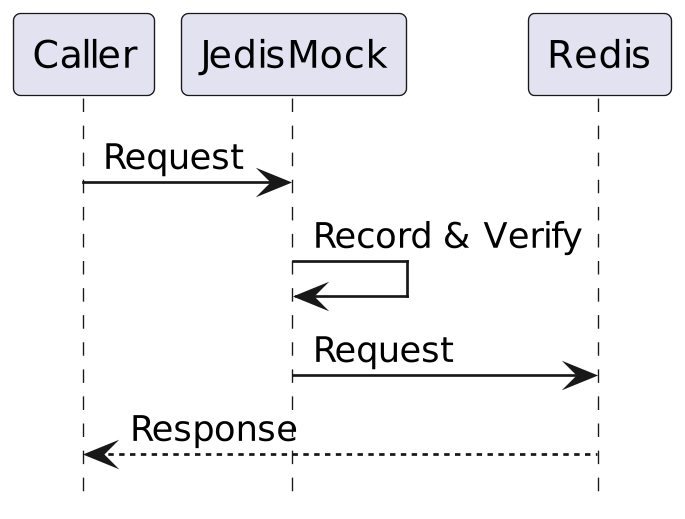

})).start();Working as a Test Proxy

Conclusions on Jedis-Mock

For most Redis testing tasks, TestContainers works.

For most Redis testing tasks, TestContainers works. But if you want to verify the behavior of your own system or study it in situations when Redis itself fails — JedisMock is helpful.

But if you want to verify the behavior of your own system or study it in situations when Redis itself fails — JedisMock is helpful.

Story №2. Kafka Streams TopologyTestDriver

and the Hell of Asynchronous Testing

Kafka Streams testing: possible options

|

TopologyTestDriver

Simple (just a regular Maven dependency)

Simple (just a regular Maven dependency) Fast

Fast Convenient (good API for Arrange and Assert)

Convenient (good API for Arrange and Assert)

The Main Difference:

TopologyTestDriver | Real Kafka | |

Works synchronously (single thread and event loop) | Works asynchronously in multiple threads on multiple containers |

Thought Experiment: Limitations of Asynchronous Tests

We send "ping" and expect the system to return a single response "pong".

2 seconds. No response.

2 seconds. No response. 3 seconds. No response.

3 seconds. No response. 4 seconds. "pong". Are we done?

4 seconds. "pong". Are we done? 5 seconds. Silence.

5 seconds. Silence. 6 seconds. Silence.

6 seconds. Silence. 7 seconds. "boom!"

7 seconds. "boom!"

The Problem with Polling

//5 seconds?? maybe 6? maybe 4?

while (!(records =

consumer.poll(Duration.ofSeconds(5))).isEmpty()) {

for (ConsumerRecord<String, String> rec : records) {

values.add(rec.value());

}

}Fundamental problem: is this the final result, or have we not waited long enough?

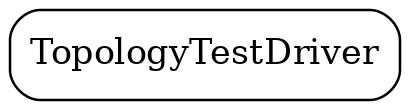

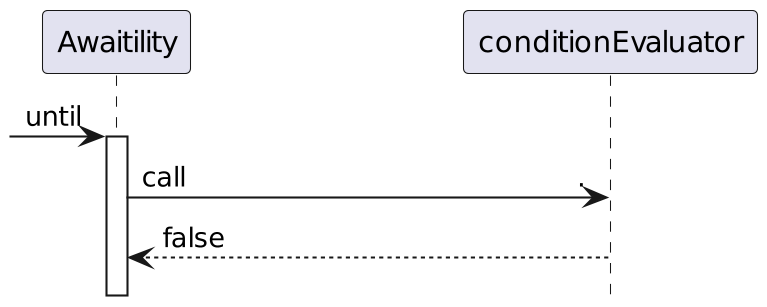

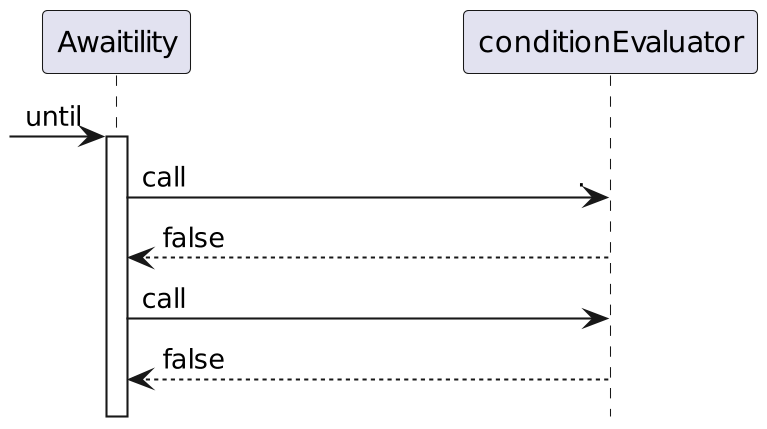

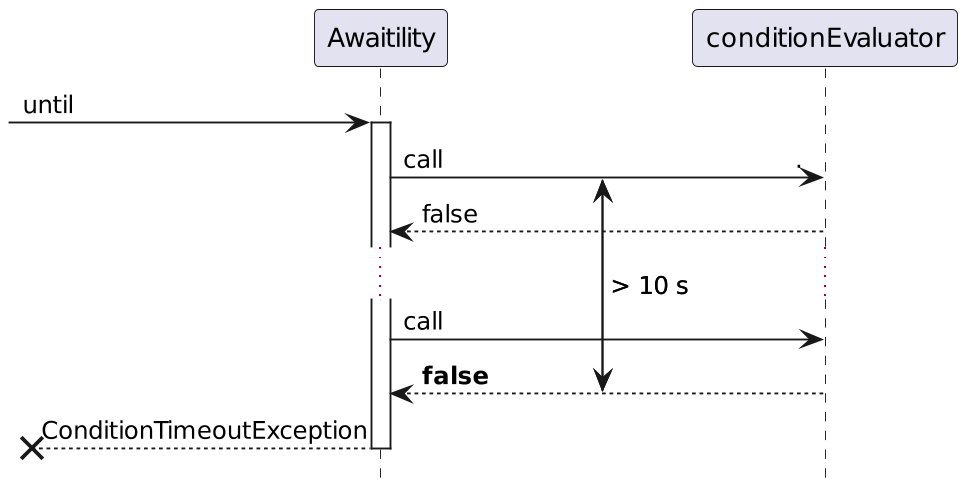

Awaitility: A partial solution to the problem with asynchronous testing

Awaitility.await().atMost(10, SECONDS).until(() ->

{ // returns true

});

Awaitility: A partial solution to the problem with asynchronous testing

Awaitility.await().atMost(10, SECONDS).until(() ->

{ // returns true

});

Awaitility: A partial solution to the problem with asynchronous testing

Awaitility.await().atMost(10, SECONDS).until(() ->

{ // returns true

});

Awaitility: Test failure

Awaitility.await().atMost(10, SECONDS).until(()->

{ // returns false for more than 10 seconds

});

Awaitility DSL Capabilities

atLeast(should not happen earlier)atMost(should happen before expiration)during(should occur throughout the interval)poll interval:

fixed (1, 1, 1, 1…)

Fibonacci (1, 2, 3, 5…)

exponential (1, 2, 4, 8…)

Awaitility speeds up asynchronous tests but does not overcome the fundamental problem of asynchronous tests

Meanwhile, in browser automation…

Modern frameworks, such as Selenide or Playwright provide implicit waits for conditions to be met.

Problems with Awaitility

Arbitrary choice of waiting times leads to flakiness

We enter the slippery path of concurrent Java programming

Real Test with Awaitility: Part 1

//This must be a thread-safe data structure!

List<String> actual = new CopyOnWriteArrayList<>();

ExecutorService service = Executors.newSingleThreadExecutor();

Future<?> consumingTask = service.submit(() -> {

//We must take into account the cooperative termination!

while (!Thread.currentThread().isInterrupted()) {

ConsumerRecords<String, String> records =

consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, String> rec : records) {

actual.add(rec.value());

}}});Real Test with Awaitility: Part 2

try {

Awaitility.await().atMost(5, SECONDS)

.until(() -> List.of("A", "B").equals(actual));

} finally {

//We should not forget to finalize the execution

//even in case of errors!

consumingTask.cancel(true);

service.awaitTermination(200, MILLISECONDS);

}Test with TopologyTestDriver

List<String> values = outputTopic.readValuesToList();

Assertions.assertEquals(List.of("A", "B"), values);What’s the pitfall??

synchronous nature and lack of caching lead to differences in behavior

synchronous nature and lack of caching lead to differences in behavior it’s possible to construct simple code examples that pass the "green" test on TTD, but operate completely incorrectly on a real cluster (refer to https://www.confluent.io/blog/testing-kafka-streams/)

it’s possible to construct simple code examples that pass the "green" test on TTD, but operate completely incorrectly on a real cluster (refer to https://www.confluent.io/blog/testing-kafka-streams/)

Conclusions on KafkaStreams:

TopologyTestDriver (TTD) remains essential, despite its limitations.

TopologyTestDriver (TTD) remains essential, despite its limitations. Understand that TTD may not fully mimic the behavior of a real Kafka cluster.

Understand that TTD may not fully mimic the behavior of a real Kafka cluster. A failure in TTD indicates problems in the code; however, passing tests in TTD do not guarantee code reliability.

A failure in TTD indicates problems in the code; however, passing tests in TTD do not guarantee code reliability. Conduct a limited number of tests on a containerized Kafka cluster when necessary.

Conduct a limited number of tests on a containerized Kafka cluster when necessary.

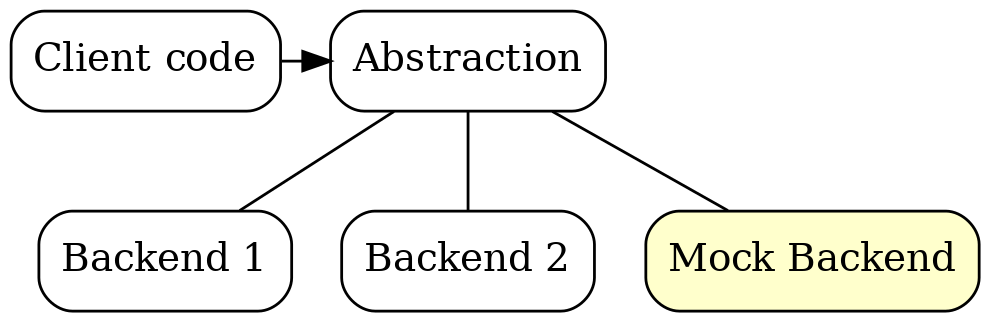

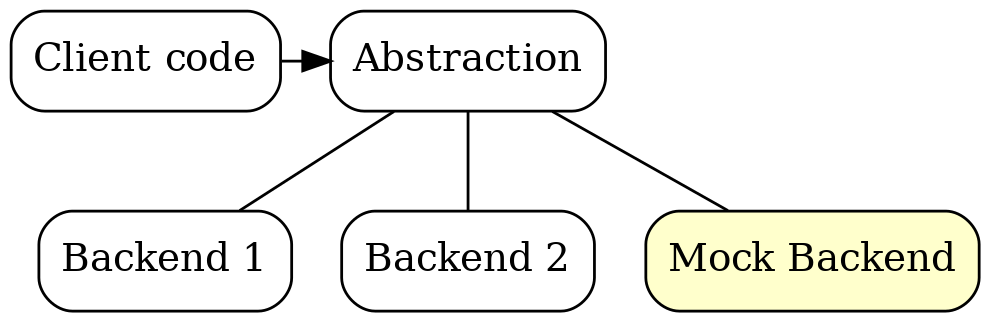

Story №3. Mock as One of the Supported Backends

Apache Beam

Apache Beam is a unified programming model to define and execute data processing pipelines, including ETL, batch and stream (continuous) processing.

SDKs: Java, Python, Go

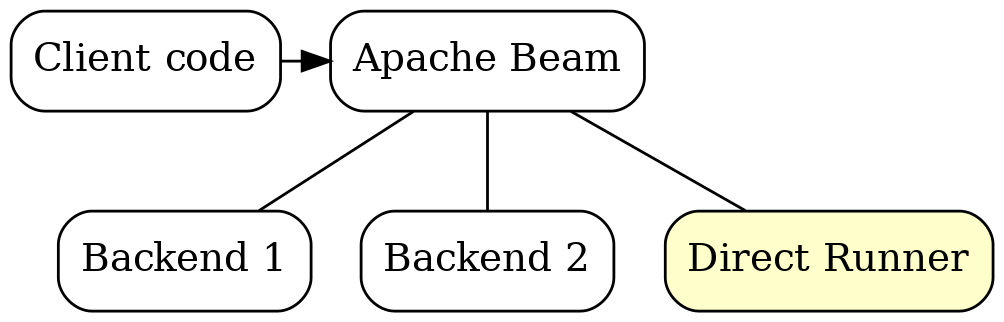

Apache Beam Runners

|

|

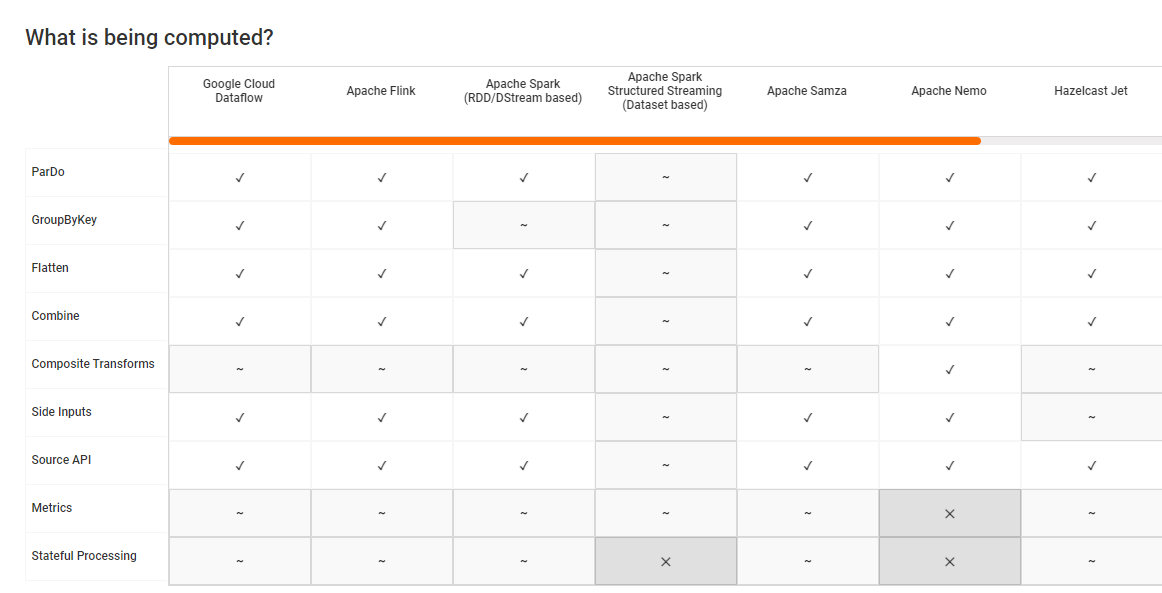

Sets of Functional Capabilities

Matrix of Supported Features (Fragment)

|

Direct Runner

"Direct Runner performs additional checks to ensure that users do not rely on semantics that are not guaranteed by the model… Using the Direct Runner helps ensure that pipelines are robust across different Beam runners." |

Apache Beam’s Direct Runner

Failure on Direct Runner means the code is bad.

Failure on Direct Runner means the code is bad. Successful execution on Direct Runner doesn’t mean the code is good.

Successful execution on Direct Runner doesn’t mean the code is good. How to test Google Cloud Dataflow without Google Cloud is beyond me.

How to test Google Cloud Dataflow without Google Cloud is beyond me.

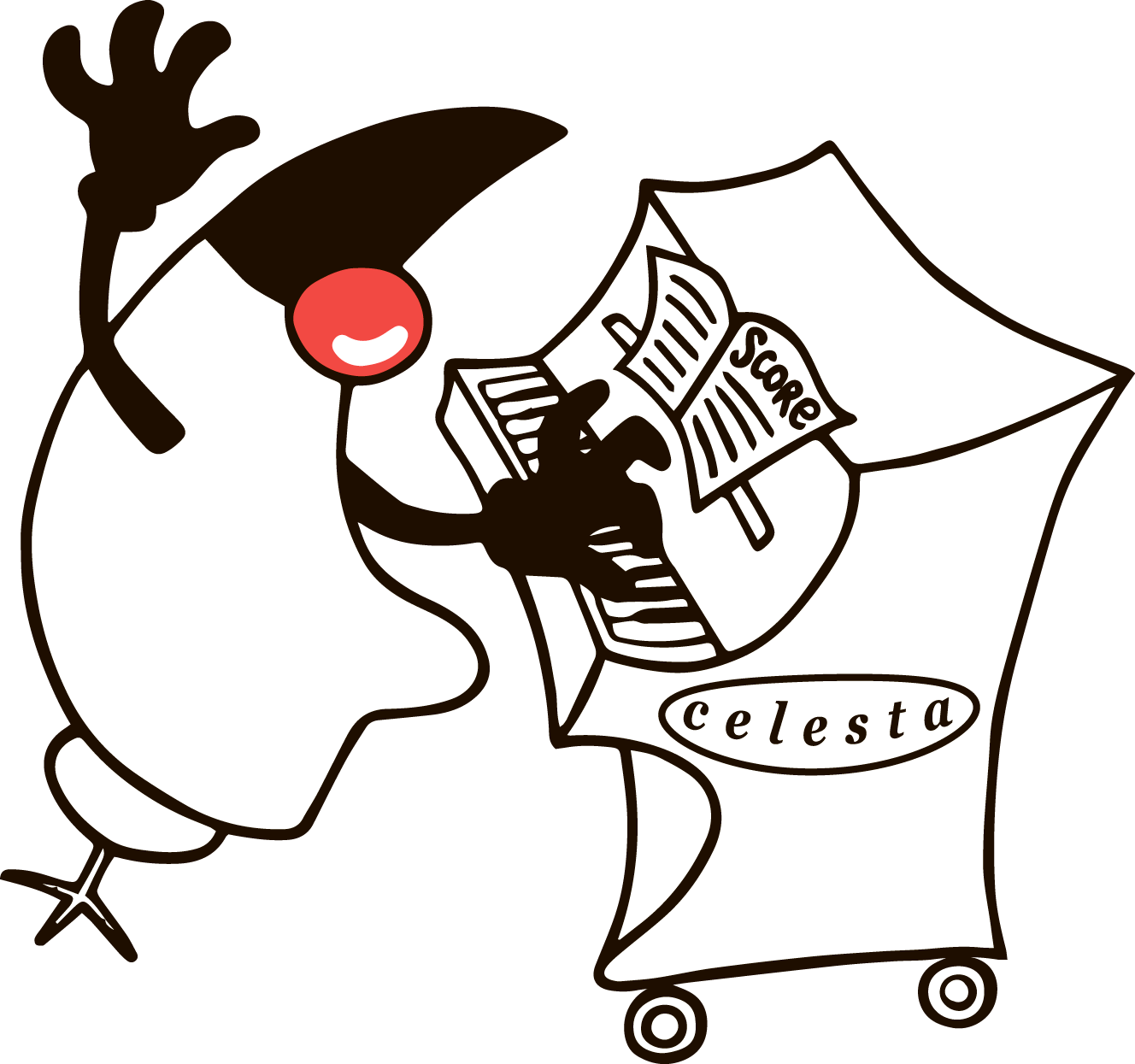

Celesta

|

|

Celesta

|

|

Celesta

Celesta

|

Celesta

|

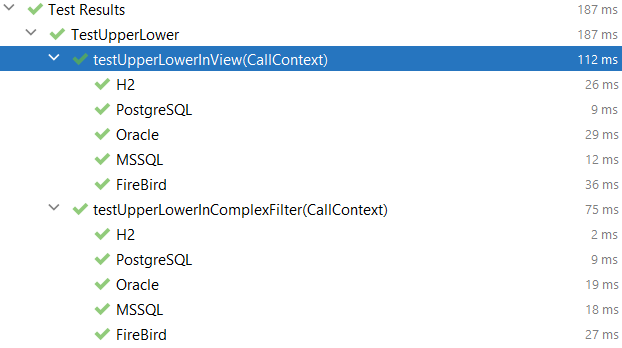

Celesta Comparison Tests

Celesta

Works on H2 ⇒ will work on PostgreSQL, MS SQL, etc.

Celesta

Works on H2 ⇒ will work on PostgreSQL, MS SQL, etc.

In-Memory H2 Capabilities

Starts with an empty database instantly

Starts with an empty database instantly Migrates instantly

Migrates instantly Queries are easily traced

Queries are easily traced

(SET TRACE_LEVEL_SYSTEM_OUT 2) After the test, the state is "forgotten"

After the test, the state is "forgotten"

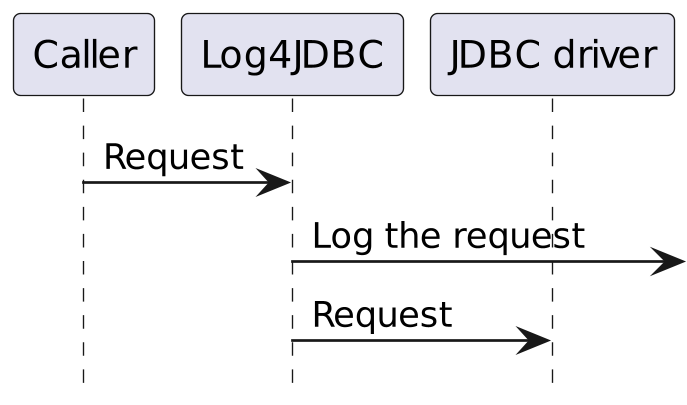

Using the spy JDBC Driver to trace SQL queries

Instead of

jdbc:postgresql://host/databaseuse

jdbc:log4jdbc:postgresql://host/database

CelestaTest: Arrange

@CelestaTest

class OrderDaoTest {

OrderDao orderDao = new OrderDao();

CustomerCursor customer;

ItemCursor item;CelestaTest: Arrange

@CelestaTest

class OrderDaoTest {

OrderDao orderDao = new OrderDao();

CustomerCursor customer;

ItemCursor item;

@BeforeEach

void setUp(CallContext ctx) {

customer = new CustomerCursor(ctx);

customer.setName("John Doe")

.setEmail("john@example.com").insert();

item = new ItemCursor(ctx);

item.setId("12345")

.setName("cheese").setDefaultPrice(42).insert();

}CelestaTest: Local arrange

@Test

void orderedItemsMethodReturnsAggregatedValues(CallContext ctx)

throws Exception {

//ARRANGE

ItemCursor item2 = new ItemCursor(ctx);

item2.setId("2")

.setName("item 2").insert();

OrderCursor orderCursor = new OrderCursor(ctx);

orderCursor.setId(null)

.setItemId(item.getId())

.setCustomerId(customer.getId())

.setQuantity(1).insert();

//and so onCelestaTest: Act & Assert

//ACT

List<ItemDto> result = orderDao.getItems(ctx);

//ASSERT

Approvals.verifyJson(new ObjectMapper()

.writer().writeValueAsString(result));CelestaTest

Works instantly

Works instantly Creates an empty database with the required structure for each test

Creates an empty database with the required structure for each test Encourages writing a large number of tests for all database-related logic

Encourages writing a large number of tests for all database-related logic The price we pay is the limitation of functionality within what Celesta supports.

The price we pay is the limitation of functionality within what Celesta supports.

Conclusions on TestContainers

Can pose problems with startup speed and developer machine configuration.

Can pose problems with startup speed and developer machine configuration. Real services are "black boxes," and it’s difficult to force them into the desired state.

Real services are "black boxes," and it’s difficult to force them into the desired state. Integration tests with "real" services are asynchronous, with insurmountable difficulties. These difficulties need to be understood.

Integration tests with "real" services are asynchronous, with insurmountable difficulties. These difficulties need to be understood.

Conclusions on Mocks

Specialized mocks are easier to connect, start, and execute faster.

Specialized mocks are easier to connect, start, and execute faster. Mocks have special functionality that facilitates testing.

Mocks have special functionality that facilitates testing. Mocks do not behave the same way as the real system. This fact needs to be understood and accepted.

Mocks do not behave the same way as the real system. This fact needs to be understood and accepted. For your system, mocks may simply not exist.

For your system, mocks may simply not exist.

General Conclusions

When forming a testing strategy, one should rely not on stereotypes but on a deep understanding of the system’s characteristics and available tools. The strategy will be different every time!

When forming a testing strategy, one should rely not on stereotypes but on a deep understanding of the system’s characteristics and available tools. The strategy will be different every time! The testability of the system as a whole should be one of the criteria when choosing technologies.

The testability of the system as a whole should be one of the criteria when choosing technologies.

The Most Important Conclusion

You should use both mocks and containers,

You should use both mocks and containers,

but above all, use your own head.

@inponomarev