Kafka Streams API

A Step Beyond Hello World

@inponomarev

Ivan Ponomarev, Synthesized/MIPT

|

|

Everything I show is on GitHub

|

Our plan

| Lecture 1.

Lecture 2.

|  |

Kafka is

|

In Kafka you can

|

|

You can’t do it in Kafka

|

|

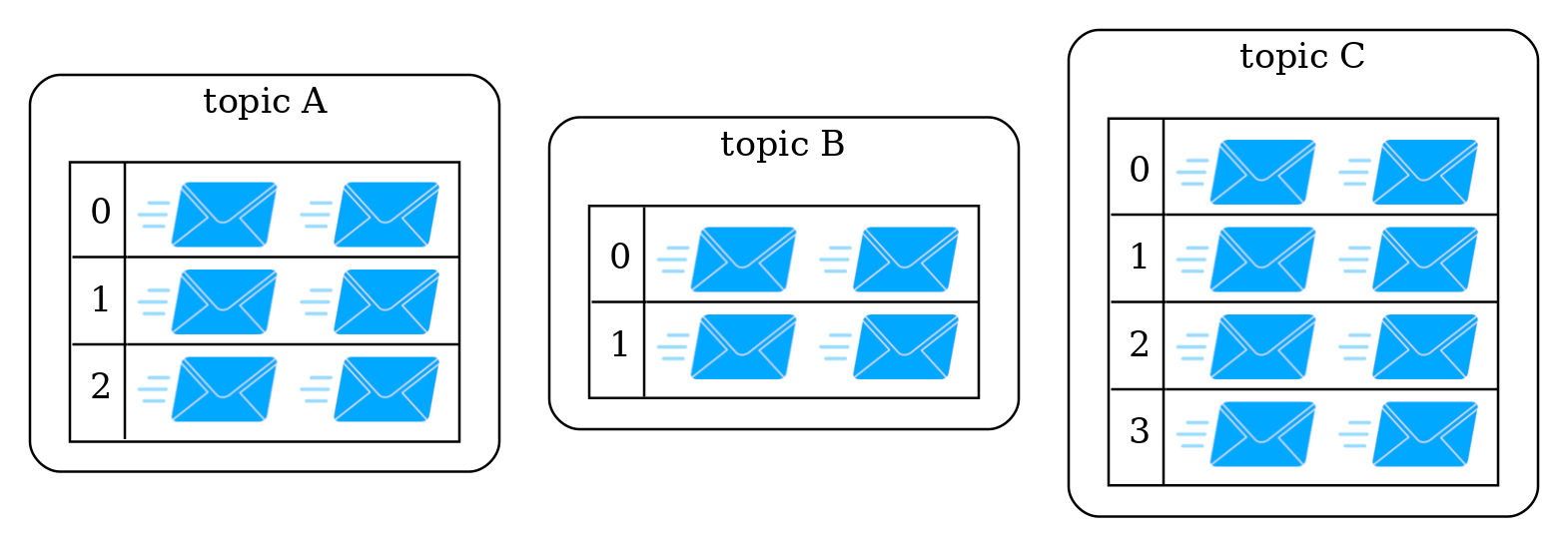

Topics, partitions and messages

Topics, partitions and messages

Topics, partitions and messages

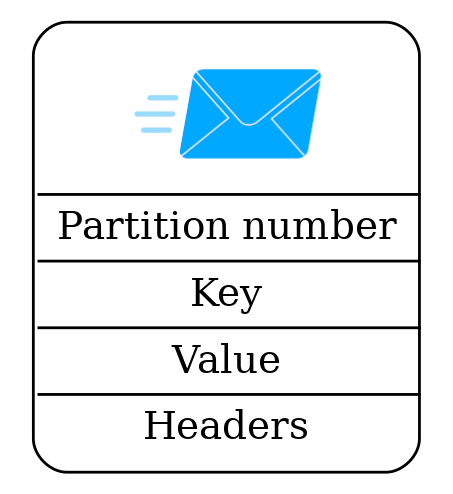

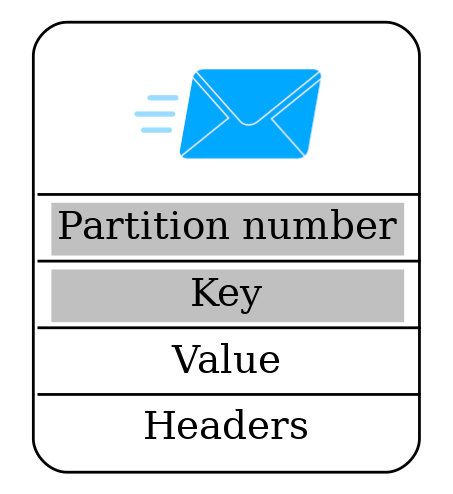

Anatomy of a message

Anatomy of a message

// hash the keyBytes to choose a partition

return Utils.toPositive(Utils.murmur2(keyBytes)) % numPartitions;Reading from Kafka

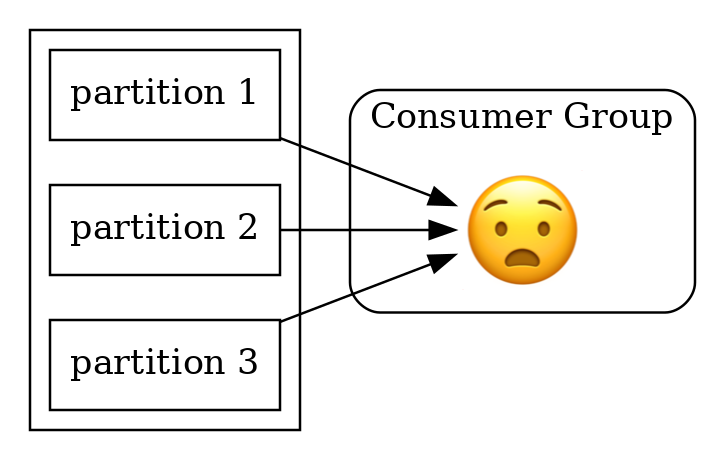

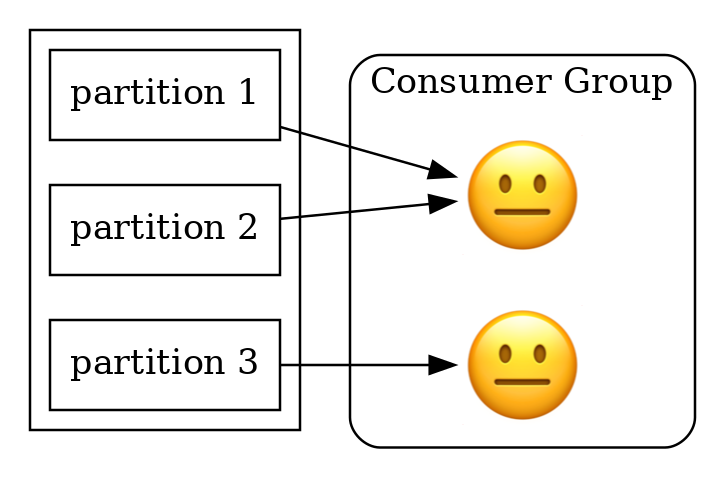

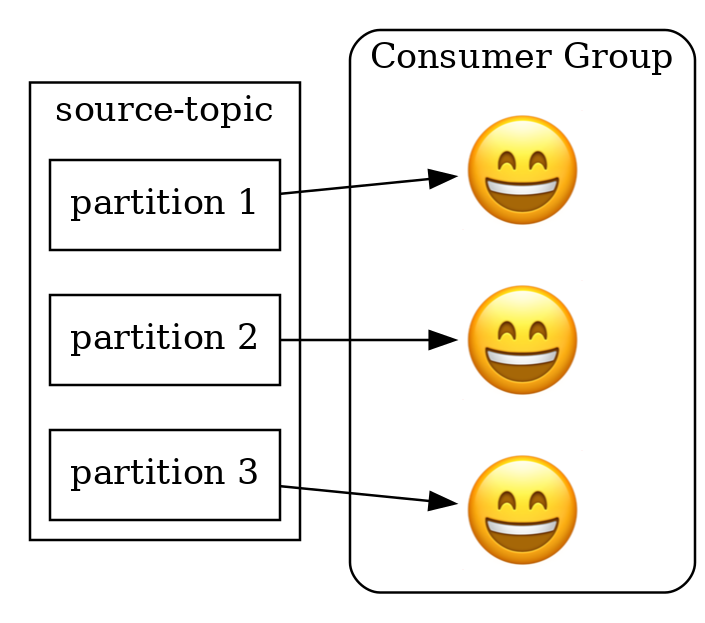

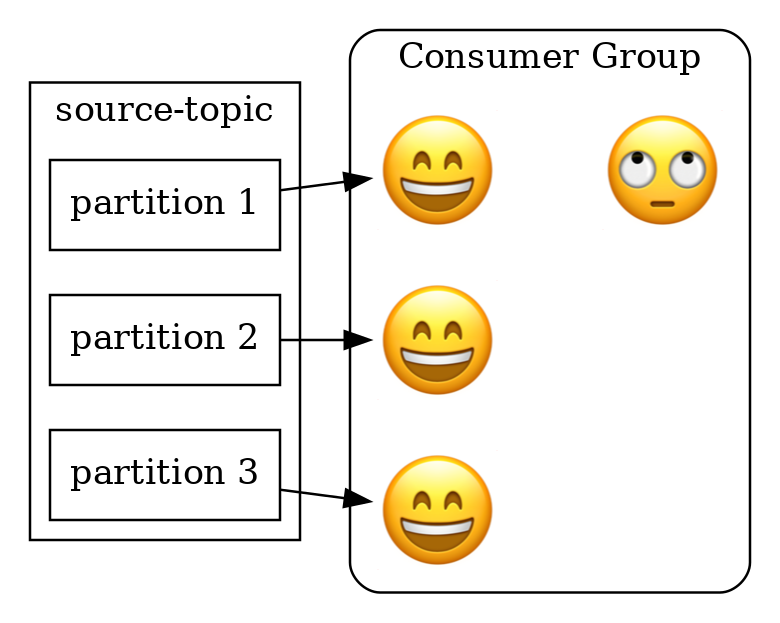

Reading from Kafka

Reading from Kafka

Reading from Kafka

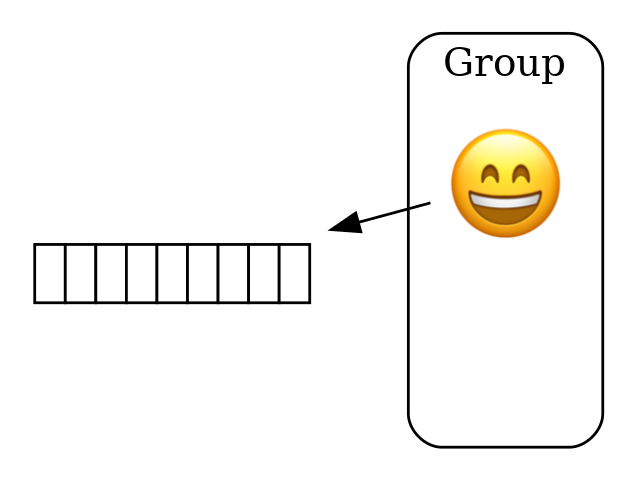

Offset Commit

Offset Commit

Offset Commit

Offset Commit

Offset Commit

Offset Commit

Offset Commit

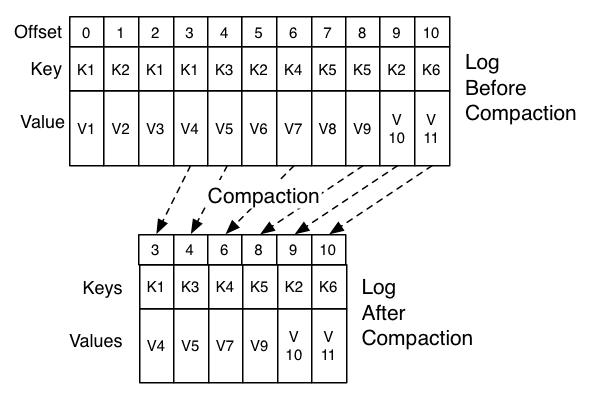

Compacted topics

How Retention works

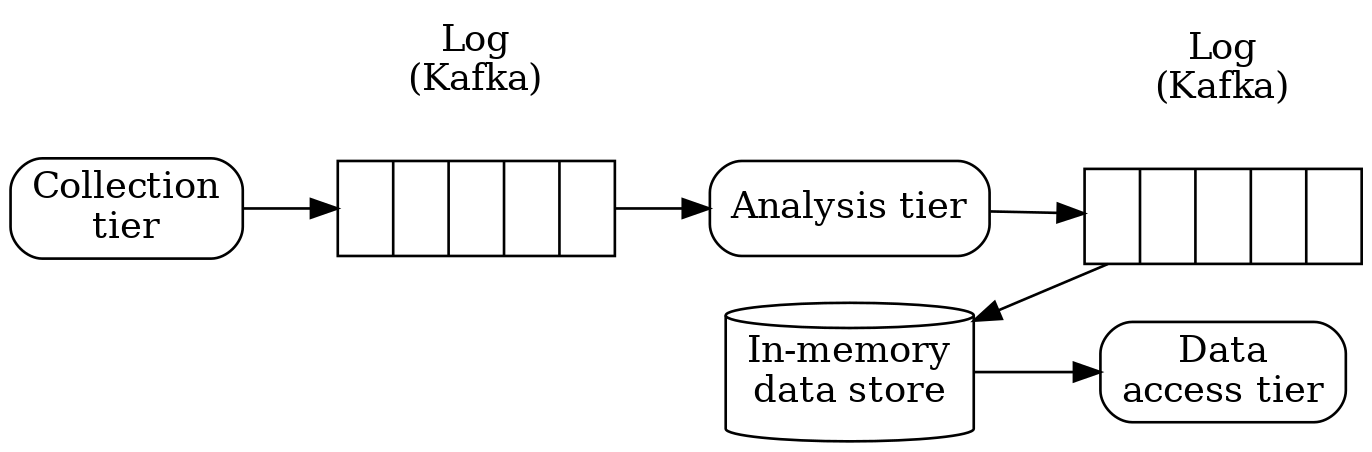

Stream data processing: architecture

Where are streaming systems needed?

Monitoring! Logs!

Track user activity

Anomaly detection (including fraud detection)

|

|

Existing stream processing frameworks

Our plan

| Lecture 1.

Lecture 2.

|  |

Kafka Streams API: the general structure of a KStreams application

StreamsConfig config = ...;

// Here we set all sorts of options

Topology topology = new StreamsBuilder()

//Here we build the topology

....build();Kafka Streams API: the general structure of a KStreams application

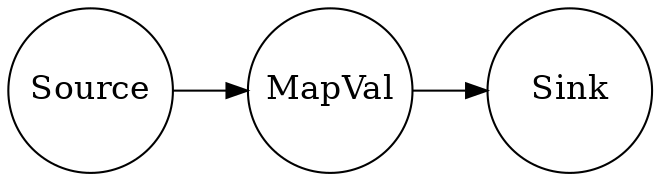

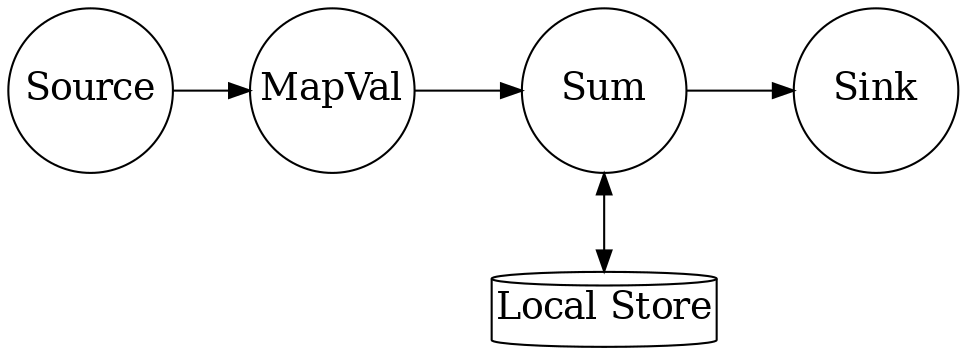

Топология — конвейер обработчиков:

Convert a stream to a stream

| (The author of the animations is Tagir Valeev, see moving pictures here) |

Filtering

| |

Display to the console (terminal operation)

| |

All together in one line

|

It reminds us of something!

"Concatenate two files, convert their lines to lowercase, sort, display the last three lines in alphabetical order"

cat file1 file2 | tr "[A-Z]" "[a-z]" | sort | tail -3Kafka Streams API: the general structure of a KStreams application

StreamsConfig config = ...;

// Here we set all sorts of options

Topology topology = new StreamsBuilder()

//Here we build the topology

....build();

//Spring-KAFKA does it for us

KafkaStreams streams = new KafkaStreams(topology, config);

streams.start();

...

streams.close();In Spring, it is enough to define two things

@Bean KafkaStreamsConfiguration@Bean Topology

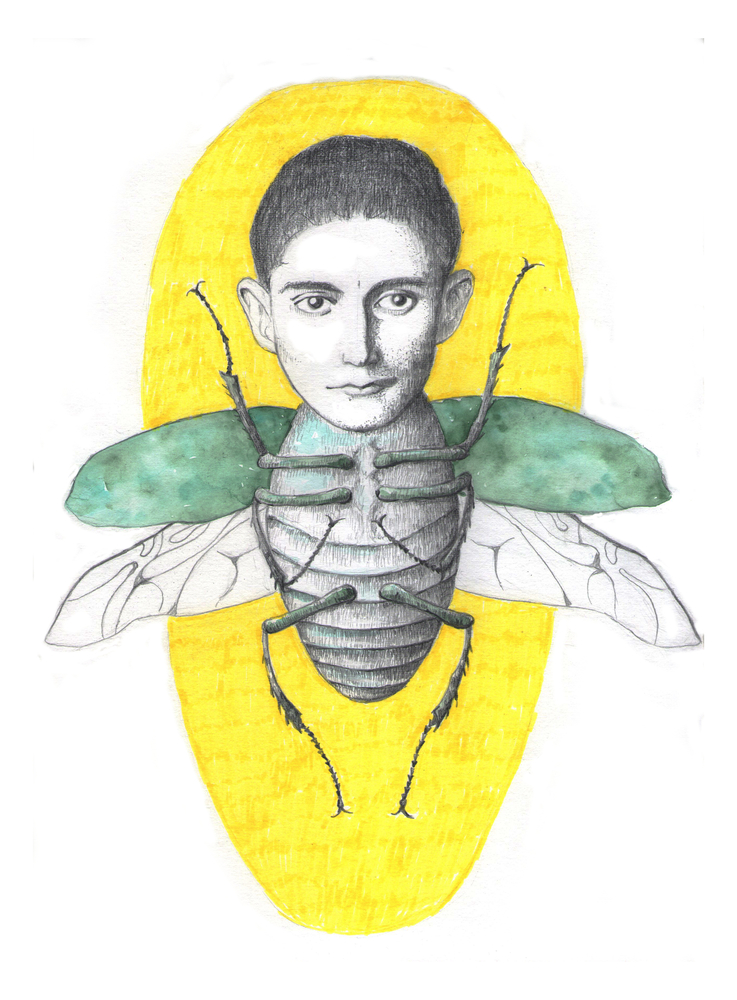

Our story

|

|

@Bean KafkaConfiguration

IMPORTANTLY!

@Bean(name =

KafkaStreamsDefaultConfiguration

. DEFAULT_STREAMS_CONFIG_BEAN_NAME)

public KafkaStreamsConfiguration getStreamsConfig() {

Map<String, Object> props = new HashMap<>();

// IMPORTANT!

props.put(StreamsConfig.APPLICATION_ID_CONFIG,

"stateless-demo-app");

// IMPORTANT!

props.put(StreamsConfig.NUM_STREAM_THREADS_CONFIG, 4);

props.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

...

KafkaStreamsConfiguration streamsConfig =

new KafkaStreamsConfiguration(props);

return streamsConfig;

}@Bean NewTopic

@Bean

NewTopic getFilteredTopic() {

Map<String, String> props = new HashMap<>();

props.put(

TopicConfig.CLEANUP_POLICY_CONFIG,

TopicConfig.CLEANUP_POLICY_COMPACT);

return new NewTopic("mytopic", 10, (short) 1).configs(props);

}@Bean Topology

@Bean

public Topology createTopology(StreamsBuilder streamsBuilder) {

KStream<String, Bet> input = streamsBuilder.stream(...);

KStream<String, Long> gain

= input.mapValues(v -> Math.round(v.getAmount() * v.getOdds()));

gain.to(GAIN_TOPIC, Produced.with(Serdes.String(),

new JsonSerde<>(Long.class)));

return streamsBuilder.build();

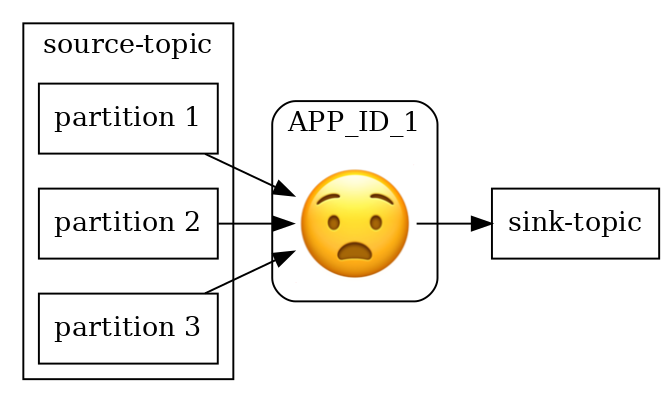

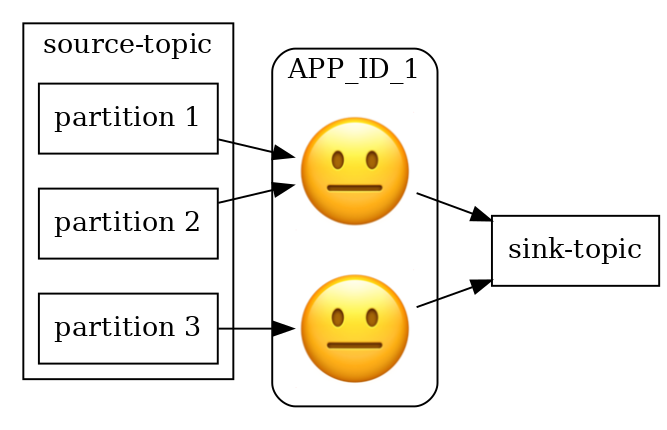

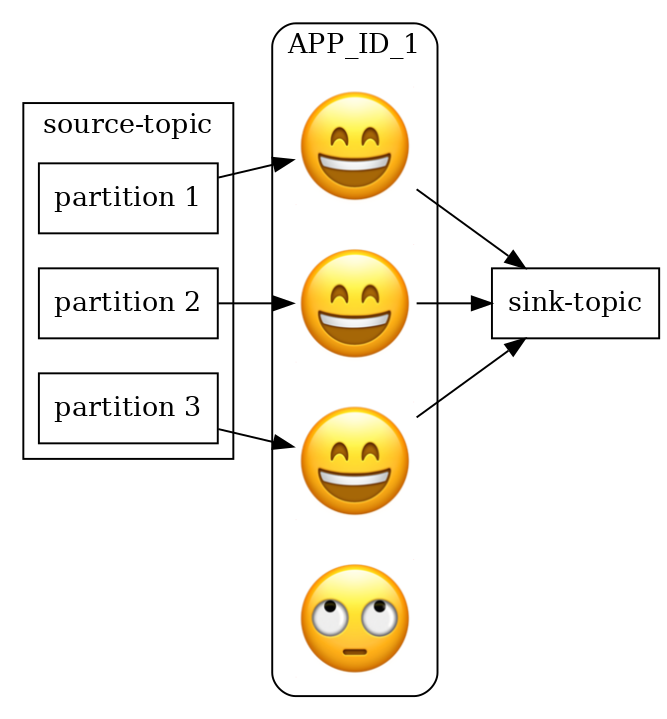

}Three lines of code, and what’s the big deal?

More messages per second? — more instances with the same 'application.id'!

Adding nodes

Limited only by the number of partitions

TopologyTestDriver: creating

KafkaStreamsConfiguration config = new KafkaConfiguration()

.getStreamsConfig();

StreamsBuilder sb = new StreamsBuilder();

Topology topology = new TopologyConfiguration().createTopology(sb);

TopologyTestDriver topologyTestDriver =

new TopologyTestDriver(topology,

config.asProperties());TestInput/OutputTopic: creating

TestInputTopic<String, Bet> inputTopic =

topologyTestDriver.createInputTopic(BET_TOPIC,

Serdes.String().serializer(),

new JsonSerde<>(Bet.class).serializer());

TestOutputTopic<String, Long> outputTopic =

topologyTestDriver.createOutputTopic(GAIN_TOPIC,

Serdes.String().deserializer(),

new JsonSerde<>(Long.class).deserializer());TopologyTestDriver: Usage

Bet bet = Bet.builder()

.bettor("John Doe")

.match("Germany-Belgium")

.outcome(Outcome.H)

.amount(100)

.odds(1.7).build();

inputTopic.pipeInput(bet.key(), bet);TopologyTestDriver: Usage

TestRecord<String, Long> record = outputTopic.readRecord();

assertEquals(bet.key(), record.key());

assertEquals(170L, record.value().longValue());If something goes wrong…

default.deserialization.exception.handler— could not deserializedefault.production.exception.handler— the broker rejected the message (for example, it is too large)

If everything fell apart completely

streams.setUncaughtExceptionHandler(

(Thread thread, Throwable throwable) -> {

. . .

});

In Spring, things are more complicated (see code)

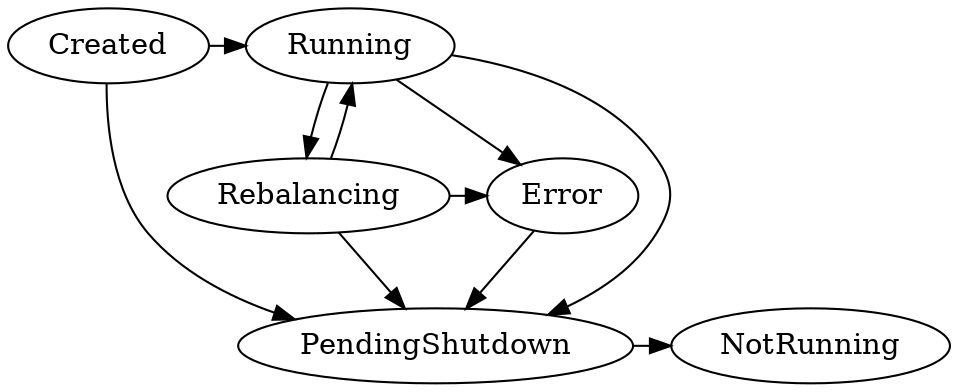

KafkaStreams application states

What else do I need to know about stateless transformations?

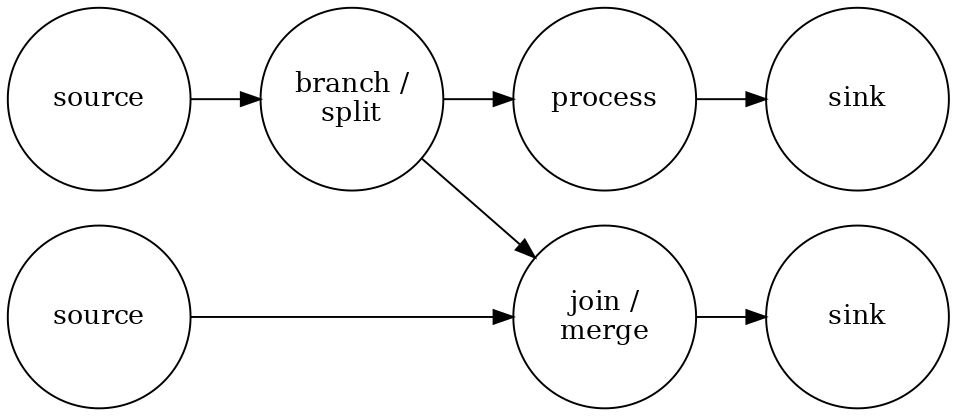

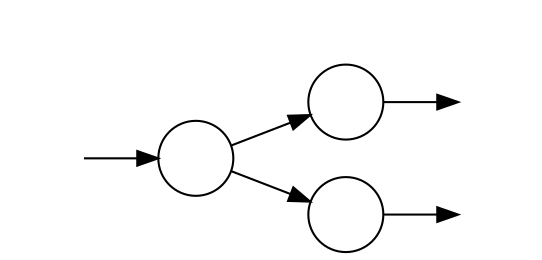

Easy branching of streams

Java streams can’t do that:

KStream<..> foo = ...

KStream<..> bar = foo.mapValues(...). map... to...

Kstream<..> baz = foo.filter(...). map... forEach...

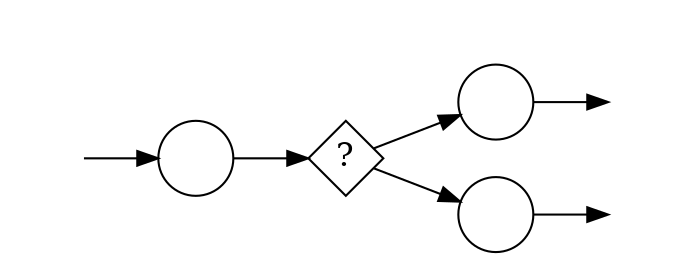

Branching streams by condition

From Version 2.8:

gain.split()

.branch((key, value) -> key.contains("A"),

Branched.withConsumer(ks -> ks.to("A")))

.branch((key, value) -> key.contains("B"),

Branched.withConsumer(ks -> ks.to("B")));

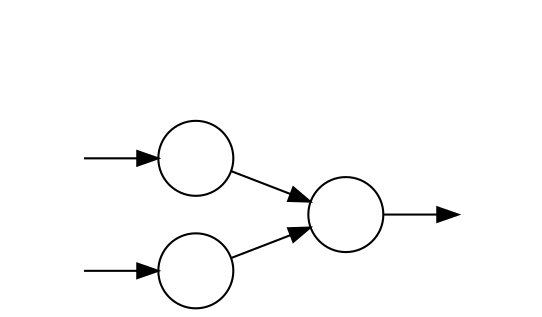

Simple merge

KStream<String, Integer> foo = ...

KStream<String, Integer> bar = ...

KStream<String, Integer> merge = foo.merge(bar);

Our plan

| Lecture 1.

Lecture 2.

|  |

Local state

Facebook’s RocksDB — what is it and what is it for?

|

|

RocksDB is similar to 'TreeMap<K,V>'

Save K,V in binary format

Lexicographic sorting

Iterator (snapshot view)

Delete Range (deleteRange)

Writing "Bet Totalling App"

What is the payout amount for bets made if the outcome is happened?

@Bean Topology

KStream<String, Bet> input = streamsBuilder.

stream(BET_TOPIC, Consumed.with(Serdes.String(),

new JsonSerde<>(Bet.class)));

KStream<String, Long> counted =

new TotallingTransformer()

.transformStream(streamsBuilder, input);Bets totalling

@Override

public KeyValue<String, Long> transform(String key, Bet value,

KeyValueStore<String, Long> stateStore) {

long current = Optional

.ofNullable(stateStore.get(key))

.orElse(0L);

current += value.getAmount();

stateStore.put(key, current);

return KeyValue.pair(key, current);

}StateStore is available in tests

@Test

void testTopology() {

topologyTestDriver.pipeInput(...);

topologyTestDriver.pipeInput(...);

KeyValueStore<String, Long> store =

topologyTestDriver

.getKeyValueStore(TotallingTransformer.STORE_NAME);

assertEquals(..., store.get(...));

assertEquals(..., store.get(...));

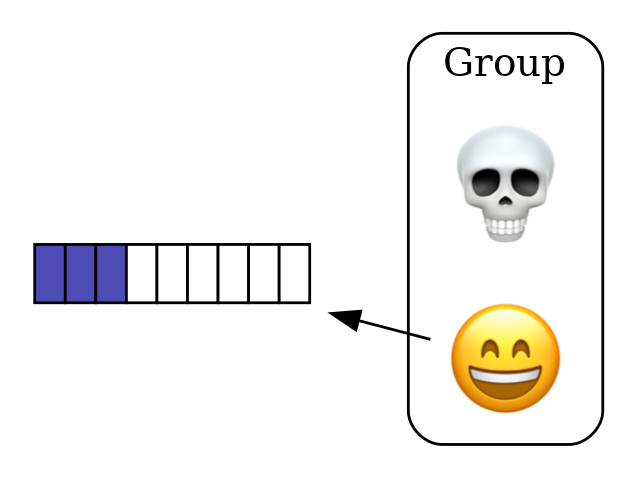

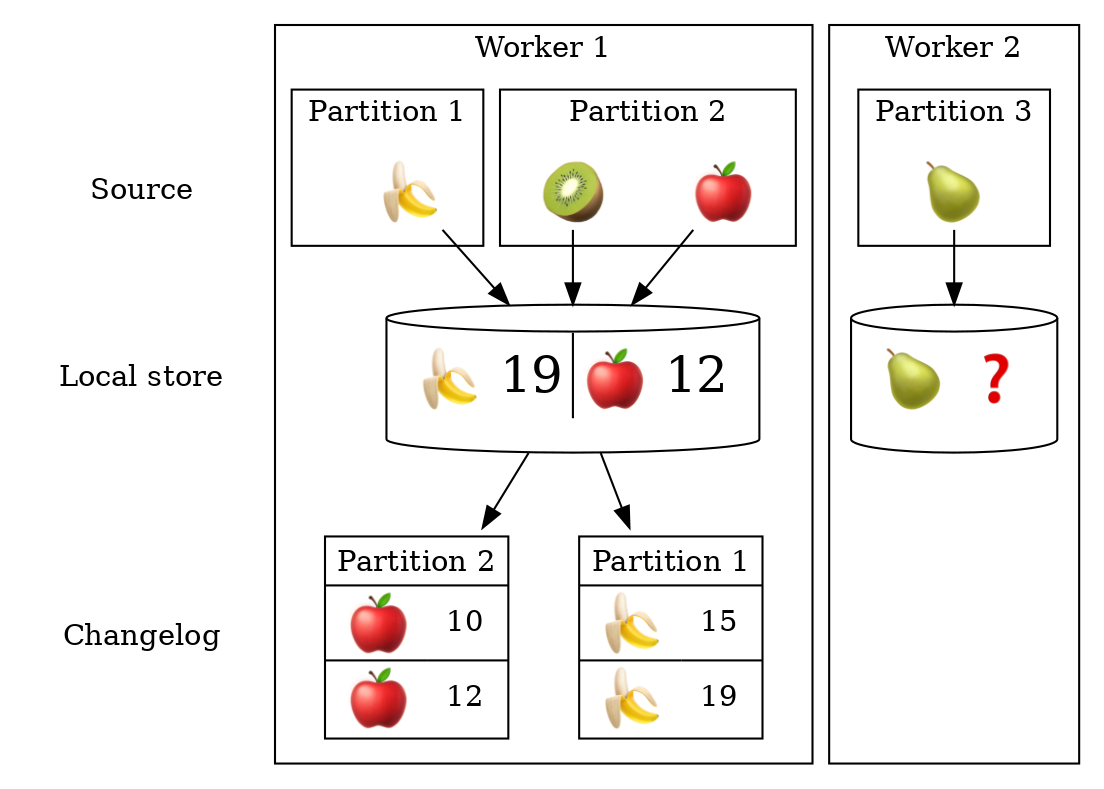

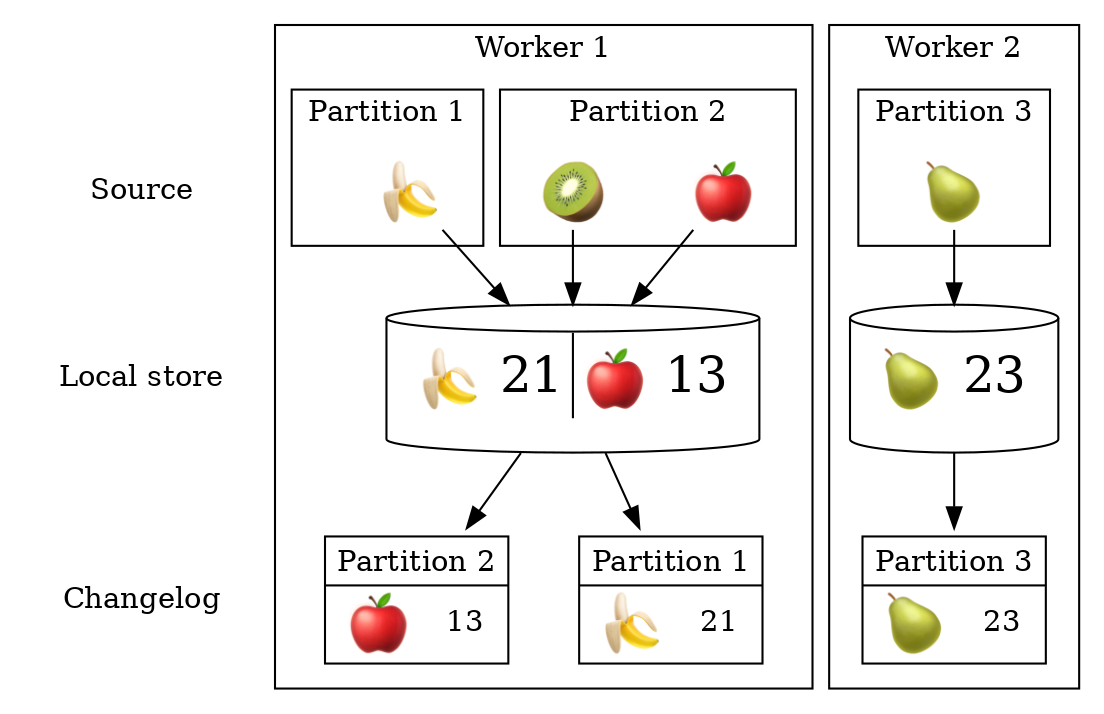

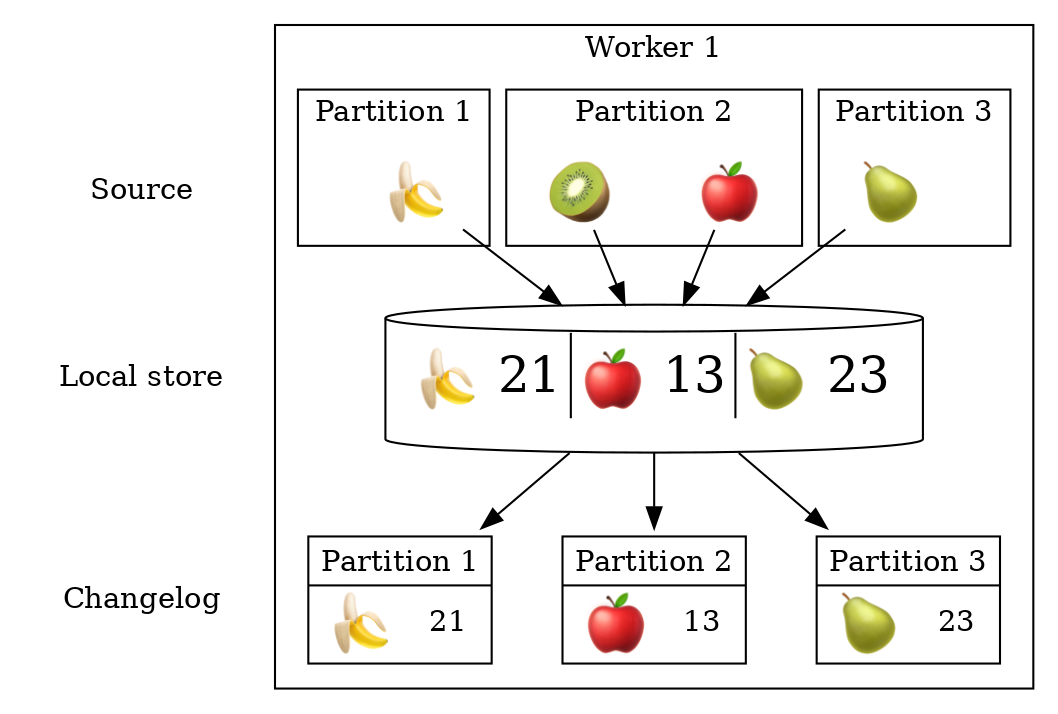

}Demo: Rebalancing / Replication

Rebalance/replication of partitions when starting/stopping workers.

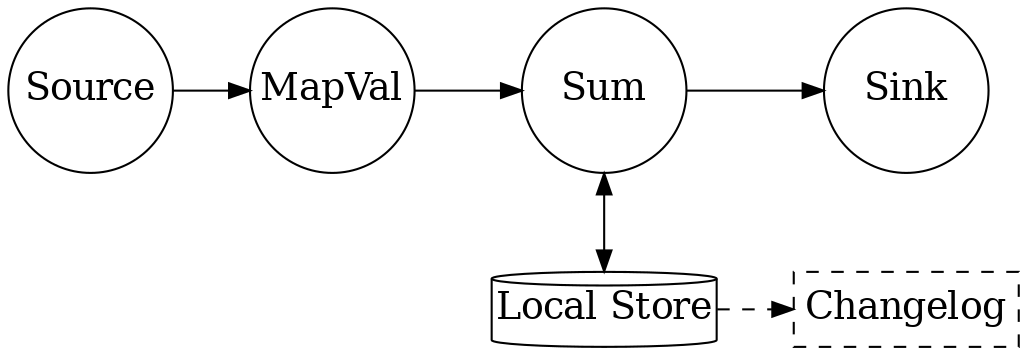

Replication of local state into a topic

$kafka-topics --zookeeper localhost --describe

Topic:bet-totalling-demo-app-totalling-store-changelog

PartitionCount:10

ReplicationFactor:1

Configs:cleanup.policy=compact

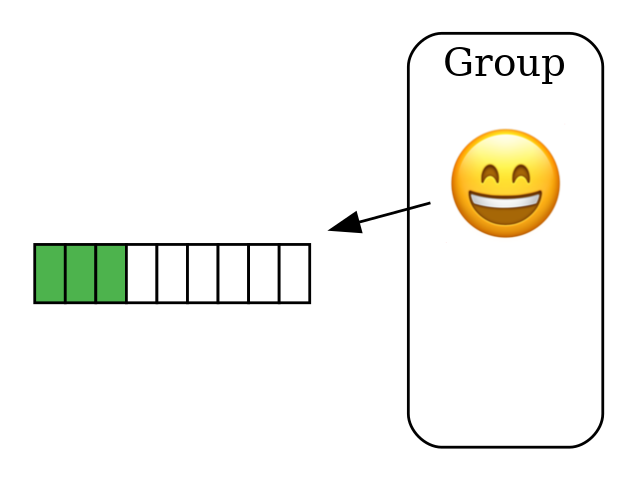

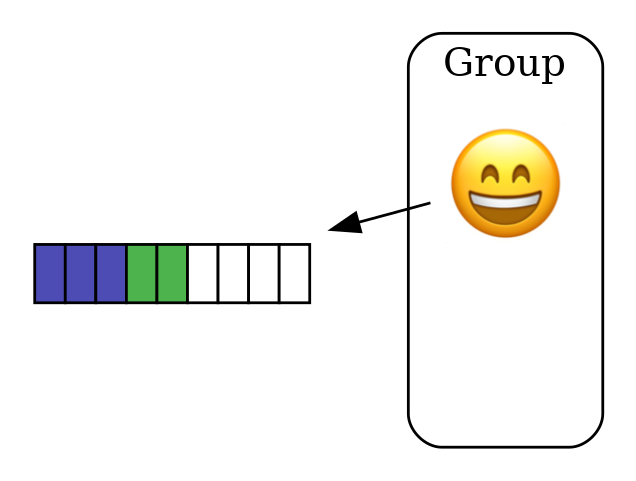

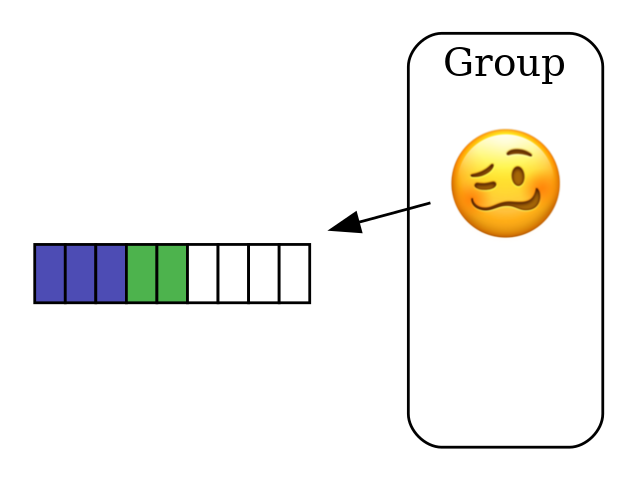

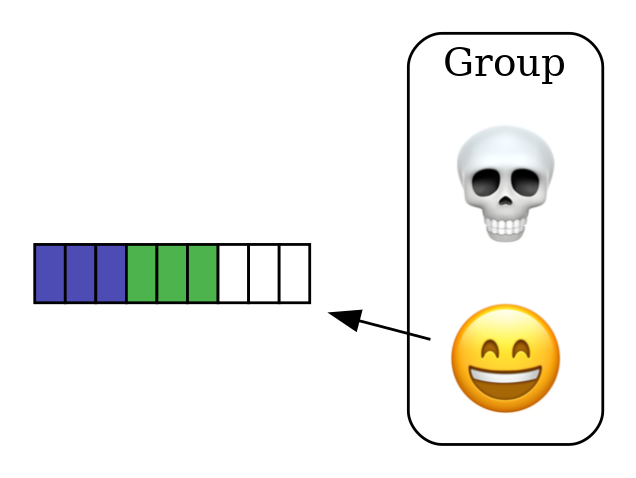

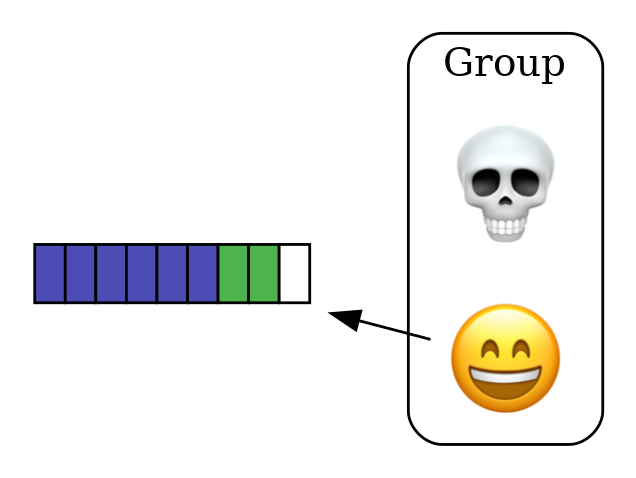

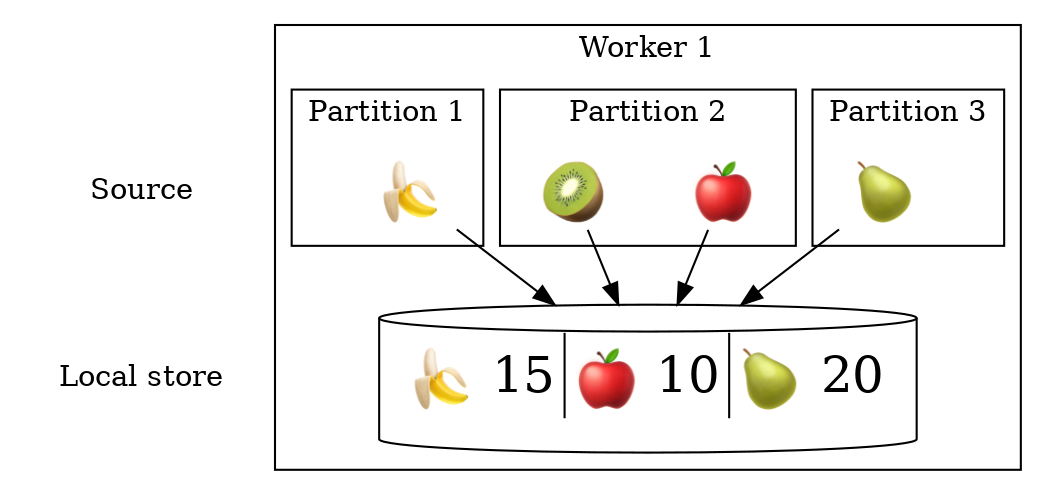

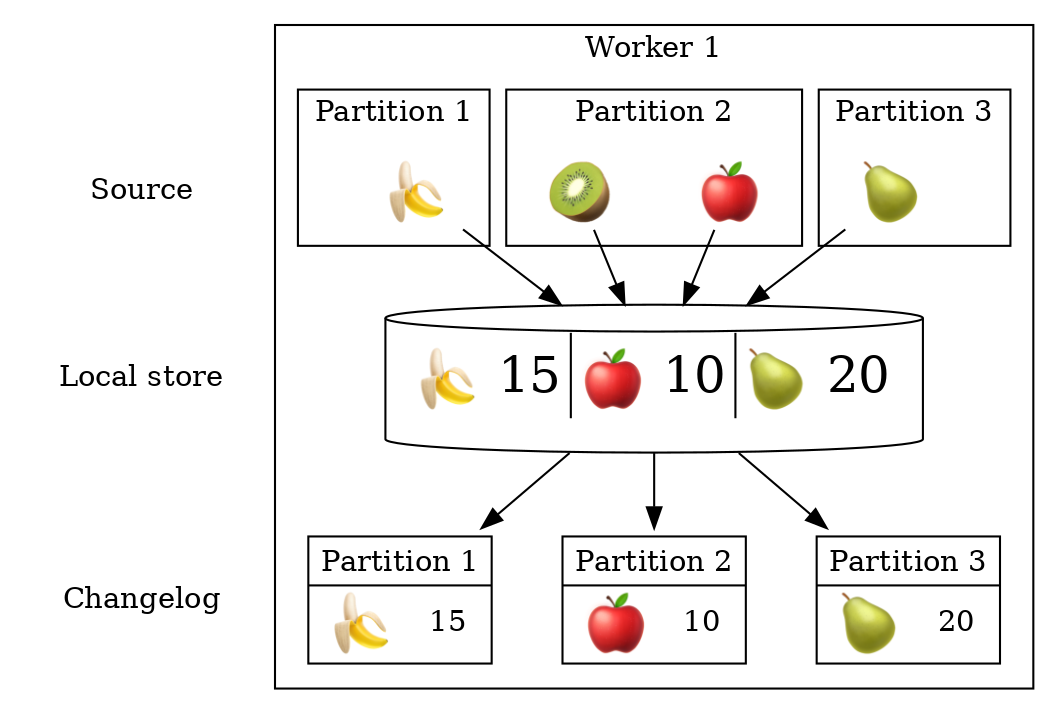

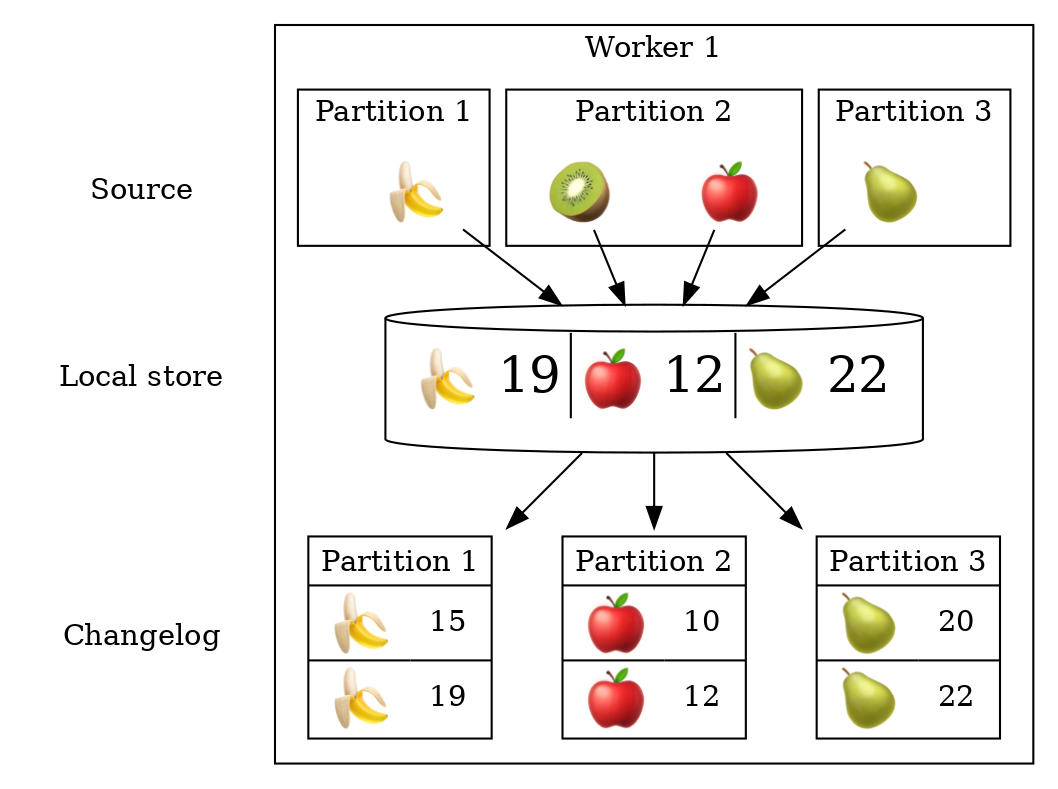

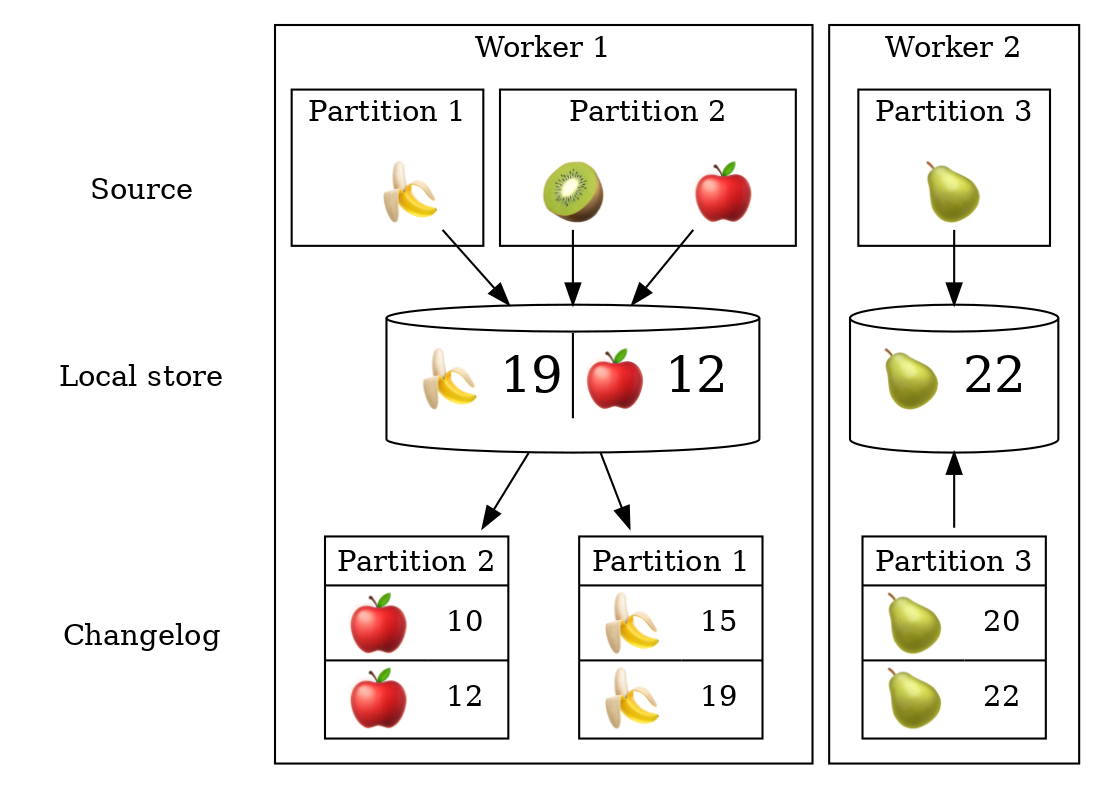

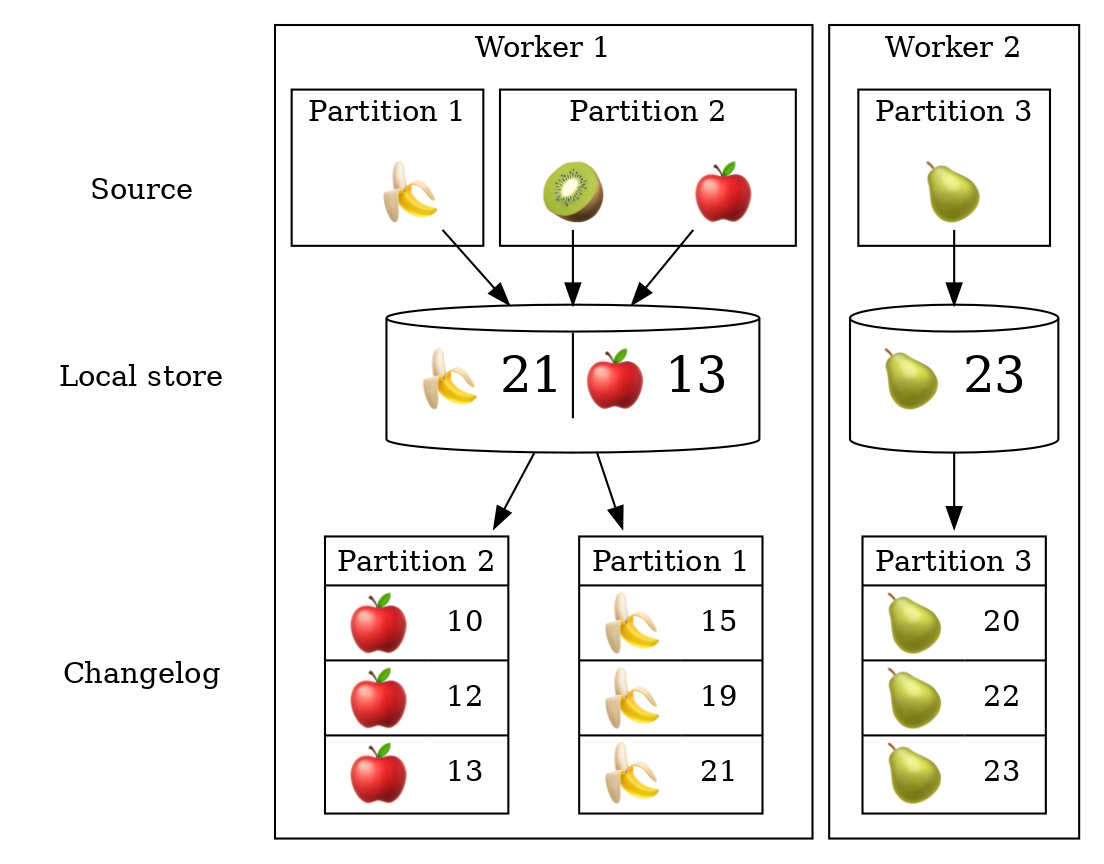

Partitioning and local state

Partitioning and local state

Partitioning and local state

Partitioning and local state

Partitioning and local state

Partitioning and local state

Partitioning and local state

Partitioning and local state

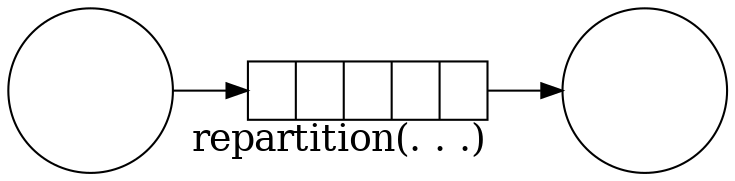

Repartition

Explicit using

repartition(Repartitioned<K, V> repartitioned)Implicit in key change operations + stateful operations

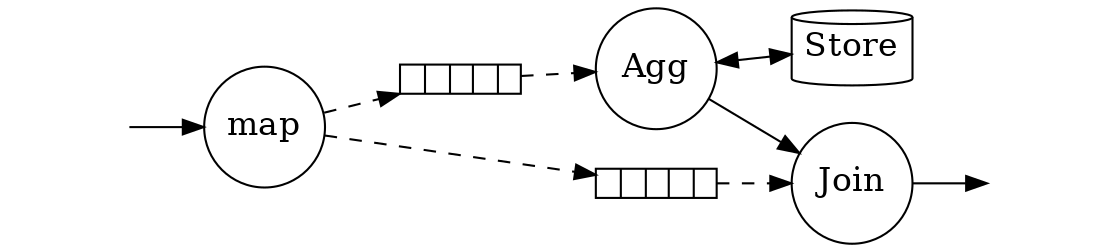

Duplicate implicit repartitioning

KStream source = builder.stream("topic1");

KStream mapped = source.map(...);

KTable counts = mapped.groupByKey().aggregate(...);

KStream sink = mapped.leftJoin(counts, ...);

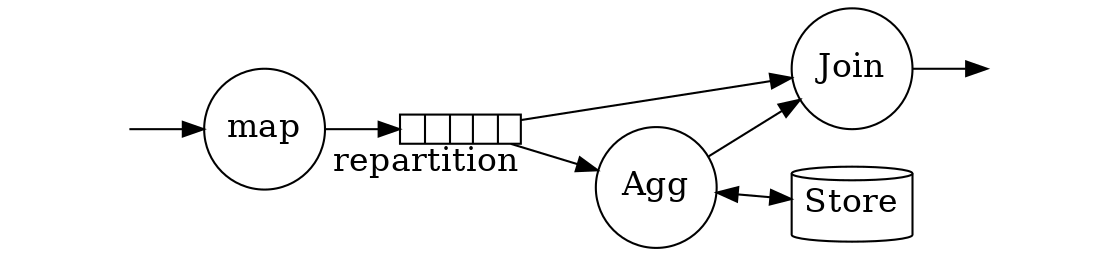

Getting rid of duplicate repartitioning

KStream source = builder.stream("topic1");

KStream shuffled = source.map(...). repartition(...);

KTable counts = shuffled.groupByKey().aggregate(...);

KStream sink = shuffled.leftJoin(counts, ...);

It is better not to touch the key when not needed

Key only: selectKey

Key and Value | Value Only |

|

|

|

|

|

|

|

|