Apache Kafka

what it is and how it will change the architecture of your application

@inponomarev

Ivan Ponomarev, Synthesized/MIPT

|

|

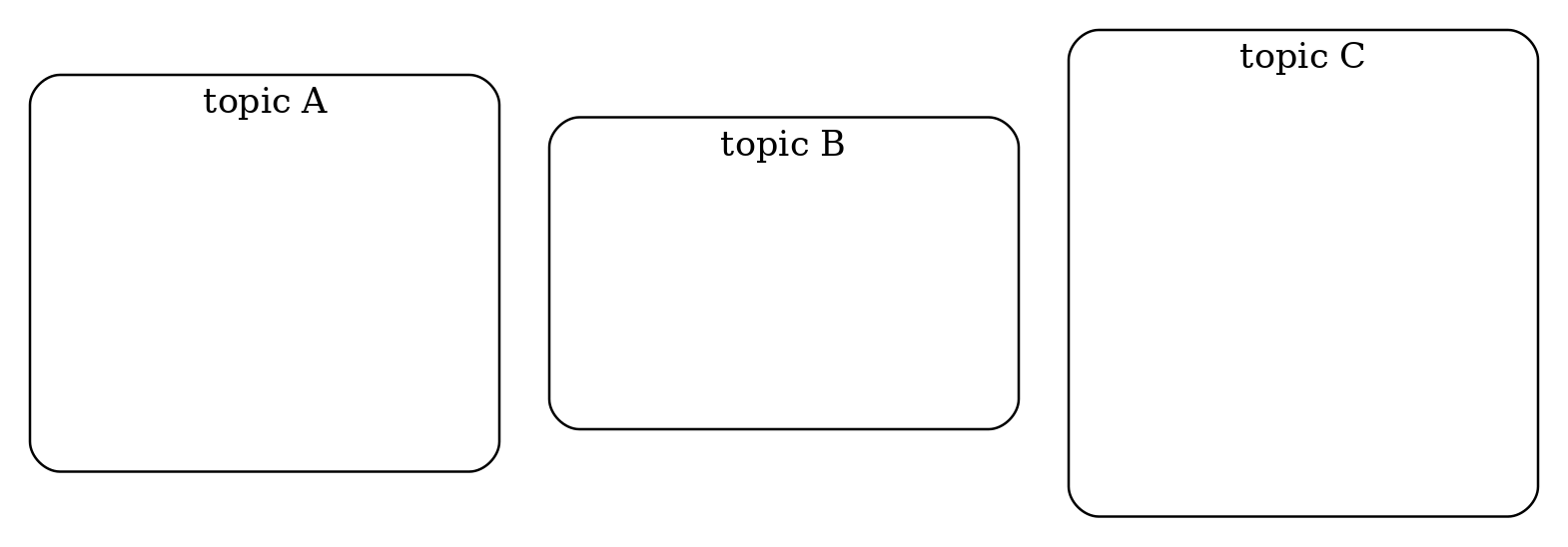

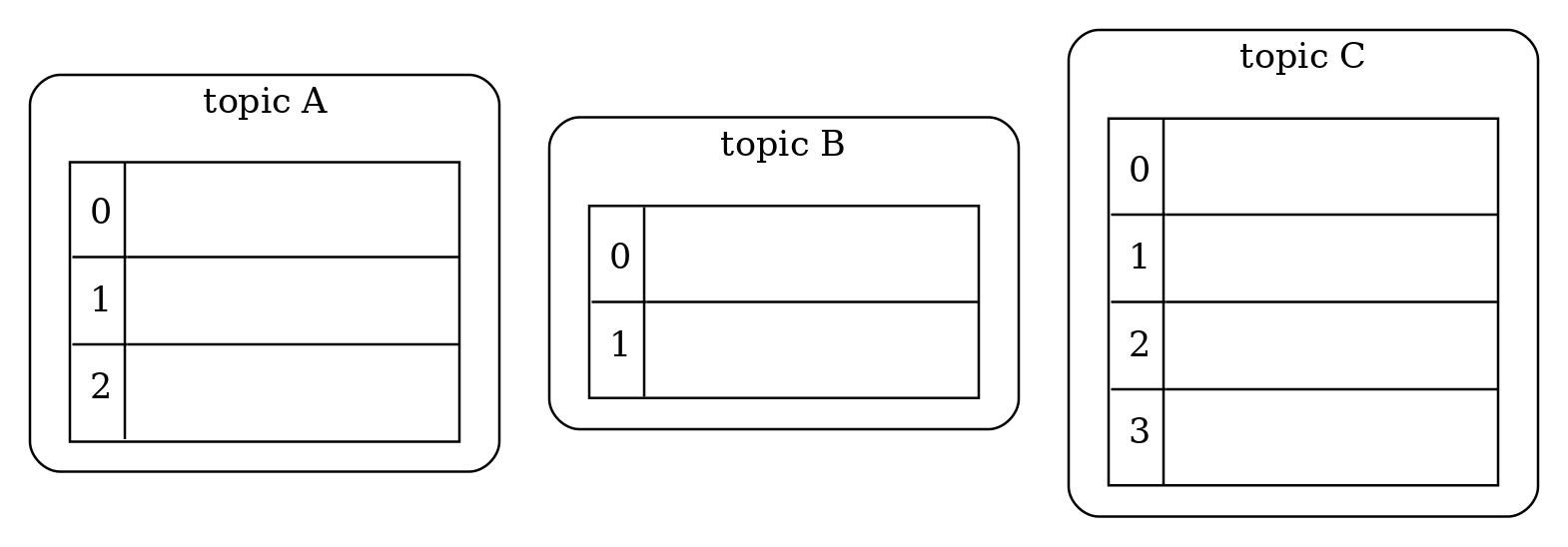

Our plan

|

|  |

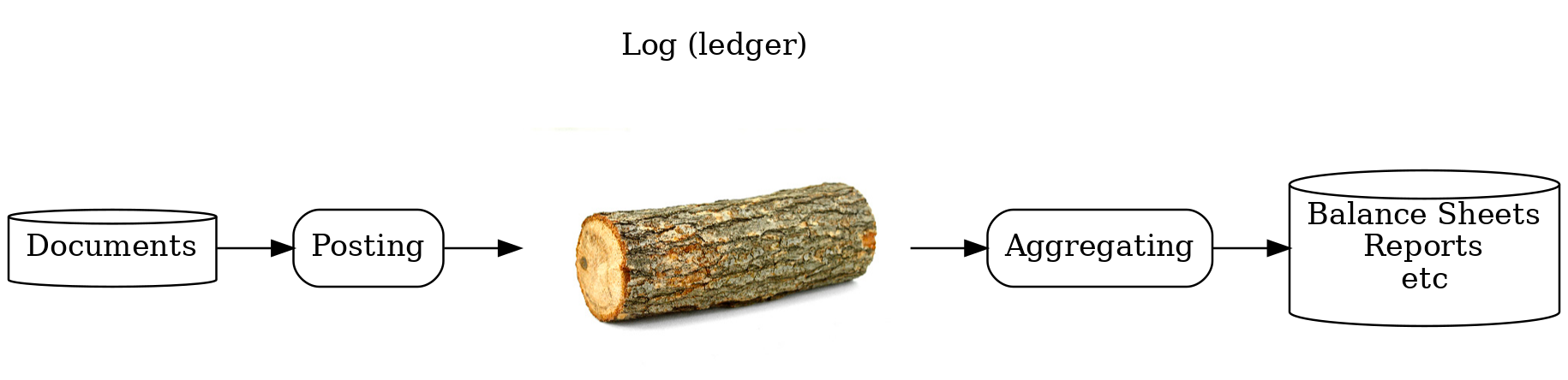

What is a log?

Append data to the end

Data can not be changed

Read sequentially

— What is our life?

— What is our life?

— A log!

How to automate a warehouse?

| item | bin | qty |

|---|---|---|

X | A | 8 |

X | B | 2 |

Y | B | 1 |

All is well, until…

What could it be?

|

What could it be?

|  |

What are we going to do?

"Current snapshot"? There’s nothing we can do.

"Database is a warehouse" is simple but doesn’t work

How should we actually design the warehouse automation?

|

|

How should we actually design the warehouse automation?

|

|

|

|

Warehouse Ledger

| date | item | bin | qty | desciption |

|---|---|---|---|---|

02.04.2020 | X | A | 10 | initial qty |

02.04.2020 | X | B | 2 | initial qty |

02.04.2020 | Y | B | 1 | initial qty |

Warehouse Ledger

| date | item | bin | qty | desciption |

|---|---|---|---|---|

02.04.2020 | X | A | 10 | initial qty |

02.04.2020 | X | B | 2 | initial qty |

02.04.2020 | Y | B | 1 | initial qty |

09.04.2020 | X | A | -2 | John Doe on assignment #1 |

Warehouse Ledger

| date | item | bin | qty | desciption |

|---|---|---|---|---|

02.04.2020 | X | A | 10 | initial qty |

02.04.2020 | X | B | 2 | initial qty |

02.04.2020 | Y | B | 1 | initial qty |

09.04.2020 | X | A | -2 | John Doe on assignment #1 |

09.04.2020 | X | B | 2 | John Doe on assignment #1 |

What if we made a mistake with the accounting?

| date | item | bin | qty | desciption |

|---|---|---|---|---|

9.04.2020 | X | A | -2 | John Doe on assignment #1 |

9.04.2020 | X | B | 2 | John Doe on assignment #1 |

9.04.2020 | X | A | 2 | Assignment #1 reversal |

9.04.2020 | X | B | -2 | Assignment #1 reversal |

What questions can already be answered?

How much do we have in stock of Y?

What is in bin B?

How many items did John Doe move on April 9?

What adjustments were made to the data?

Investigating the incident

On April 9, John Doe was to move the items from A to B.

Let’s see what is in A?

Let’s ask John?

What requirements can be easily implemented?

The load on the shelf is limited to 100 kg

— Add the "weight" field to the Ledger!

It is necessary to calculate the salary of warehouse workers

— You don’t even need to add anything.

Solution architecture: log does not work alone

Intermediate conclusions

The presence of a log allows you to

Add new functionality

Search for correlations of events, identify and investigate fraudulent behavior

Correct algorithmic errors and recalculate data

Our life is an append-only log

Our plan

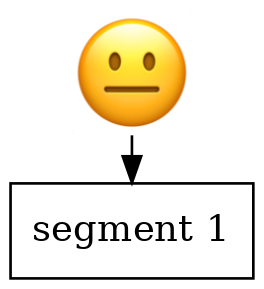

|

|  |

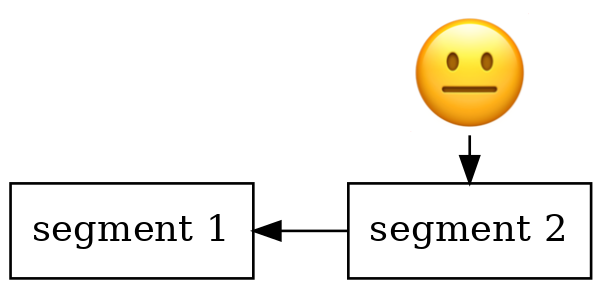

Our plan

|

|  |

Kafka is

|

In Kafka you can

|

|

You can’t do it in Kafka

|

|

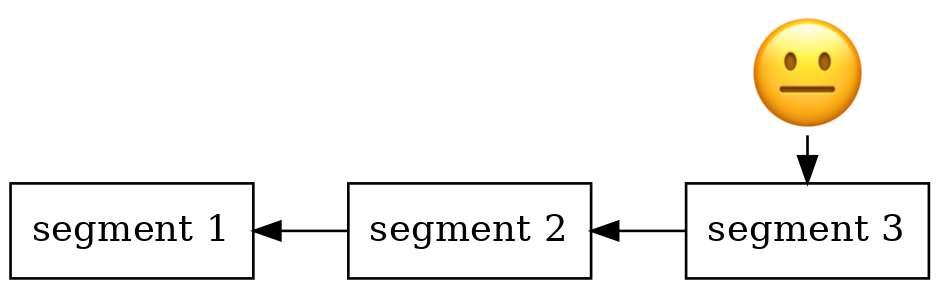

Our plan

|

|  |

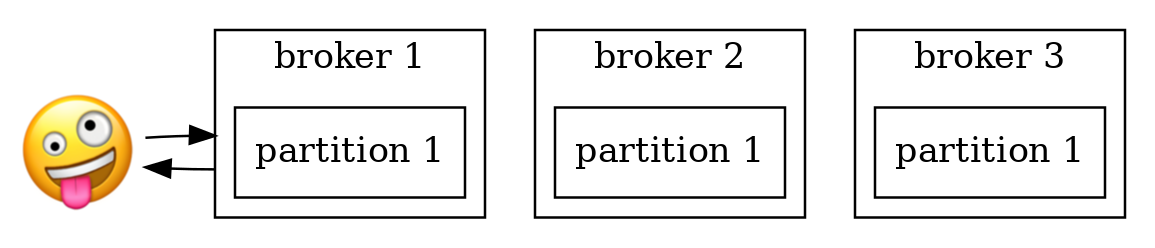

Kafka Cluster: Brokers and Zookeeper

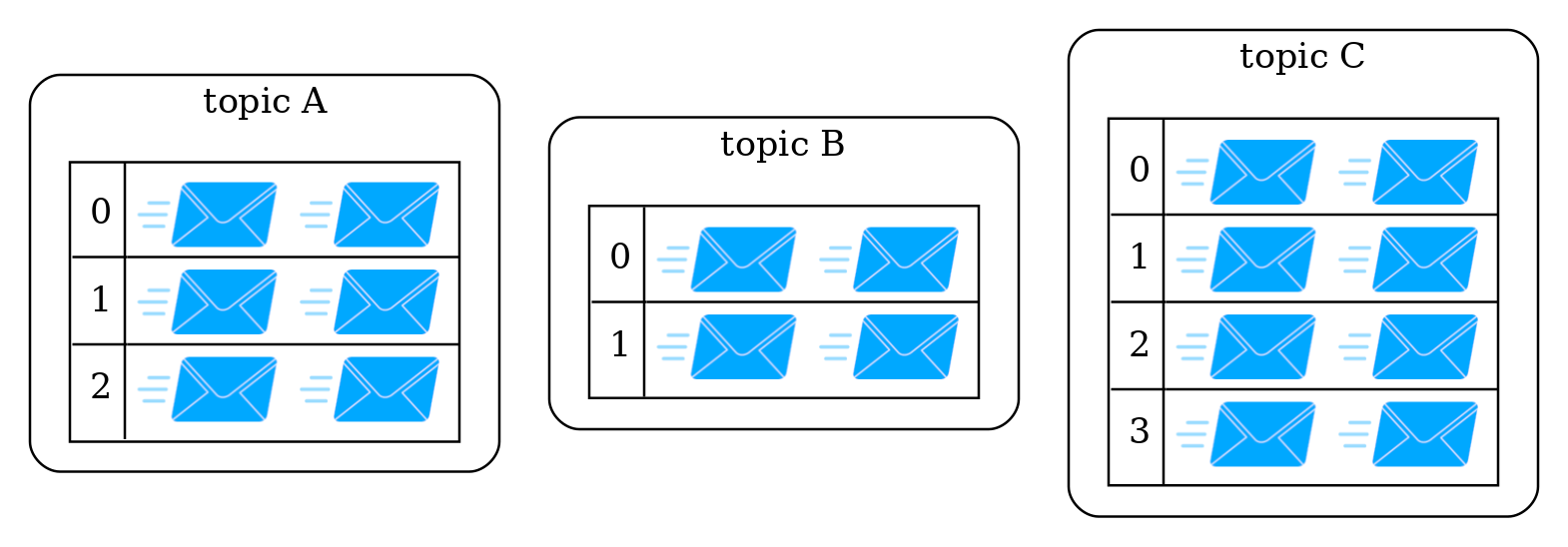

Topics, partitions and messages

Topics, partitions and messages

Topics, partitions and messages

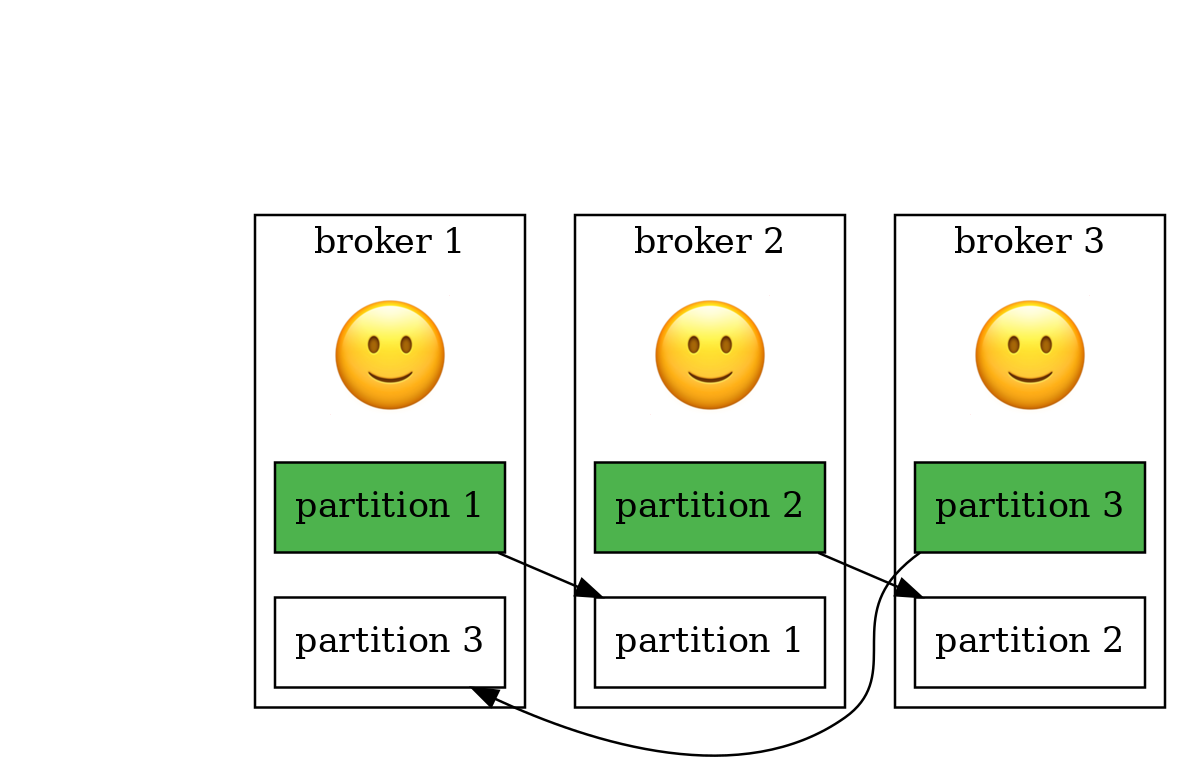

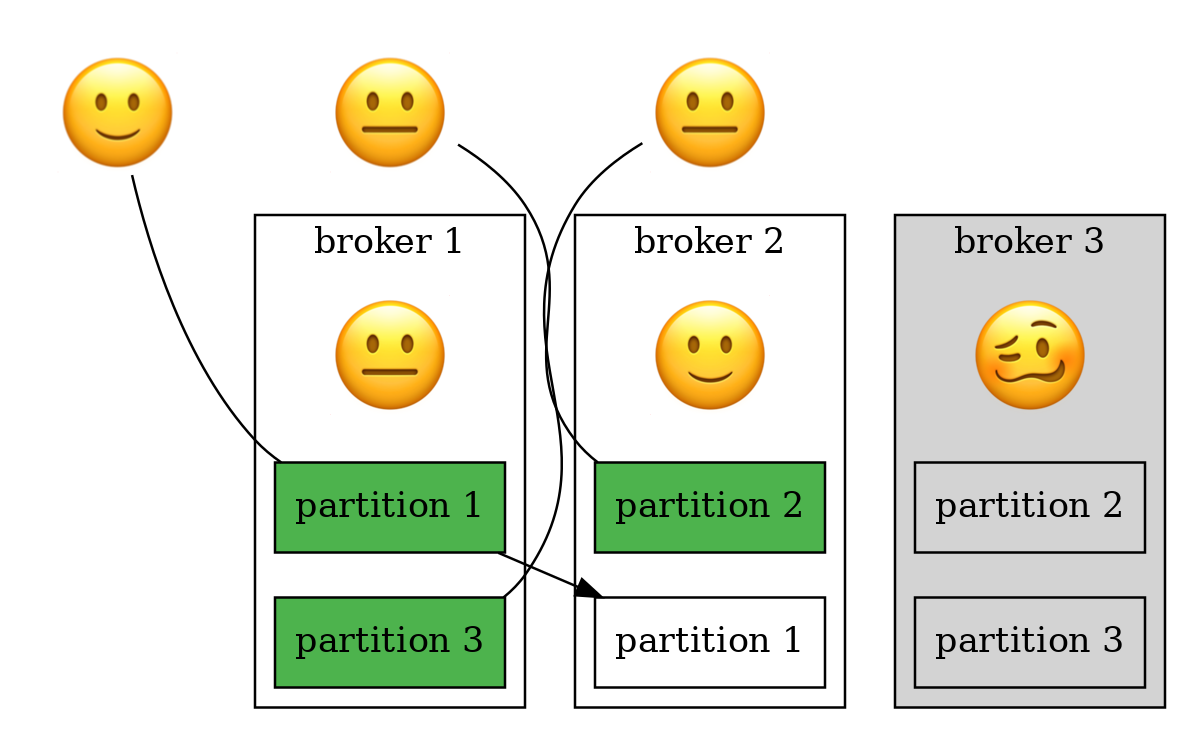

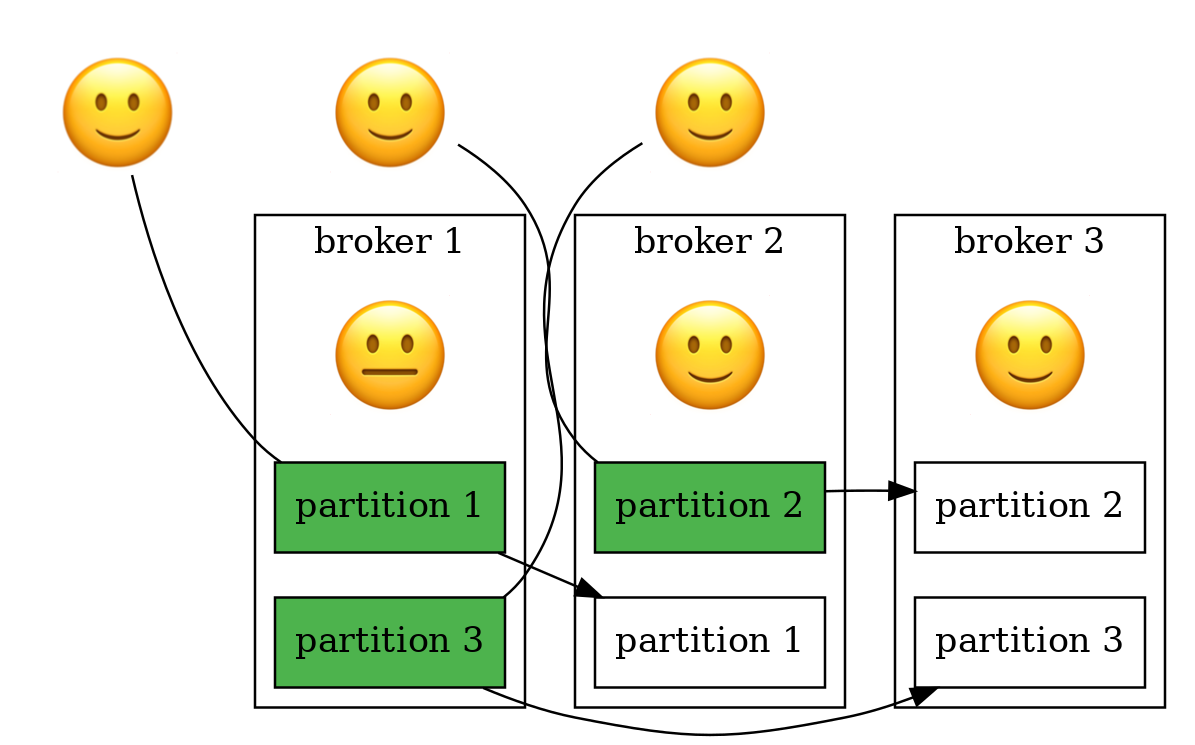

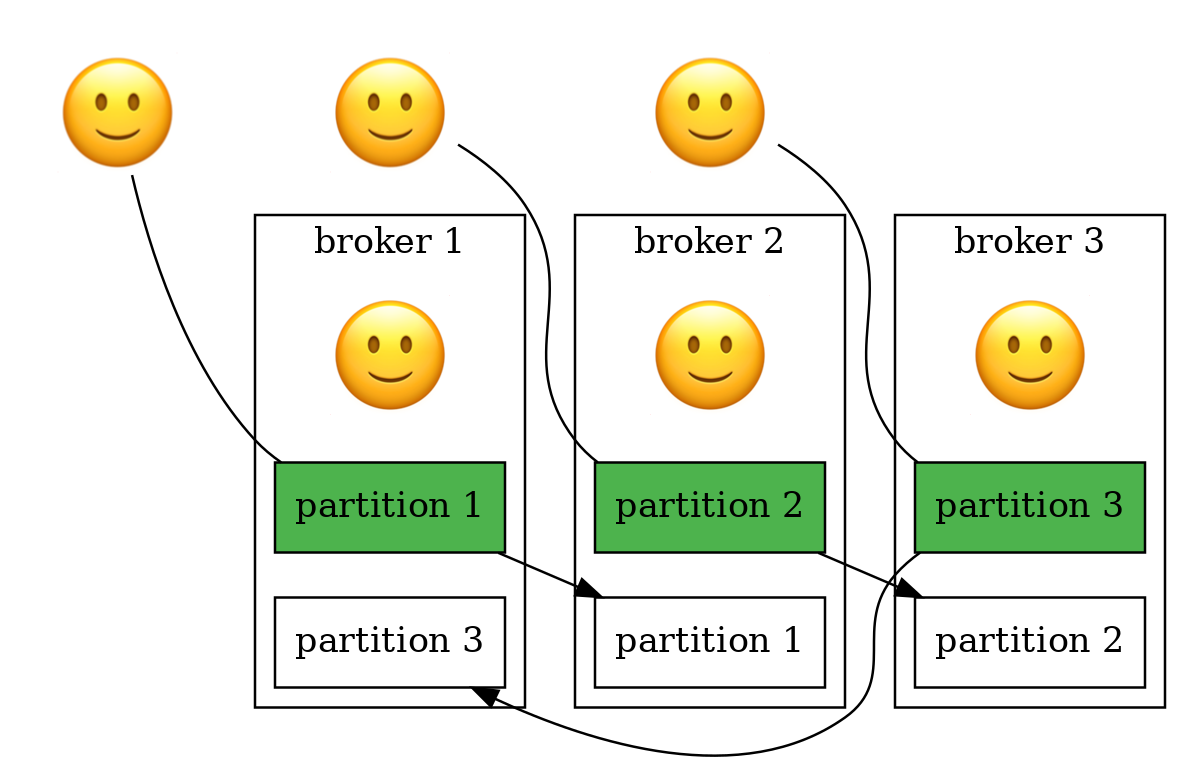

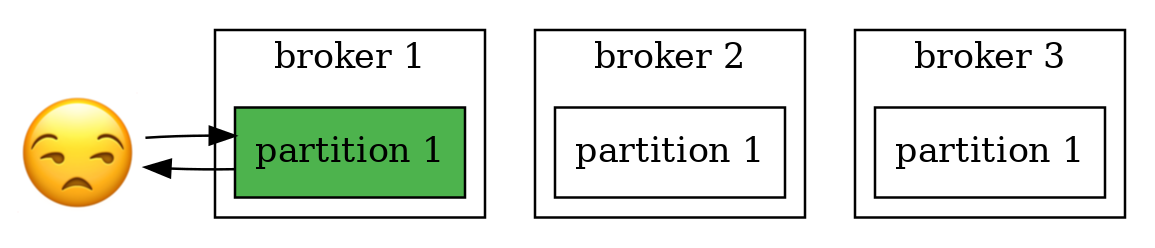

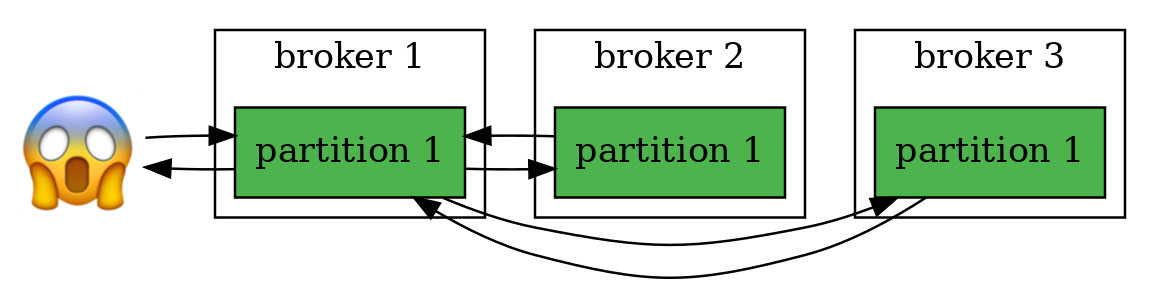

Replication of partitions

Replication of partitions

Replication of partitions

Replication of partitions

Replication of partitions

Replication of partitions

Our plan

|

|  |

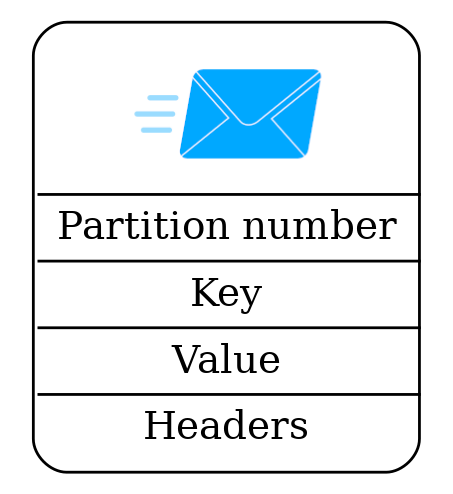

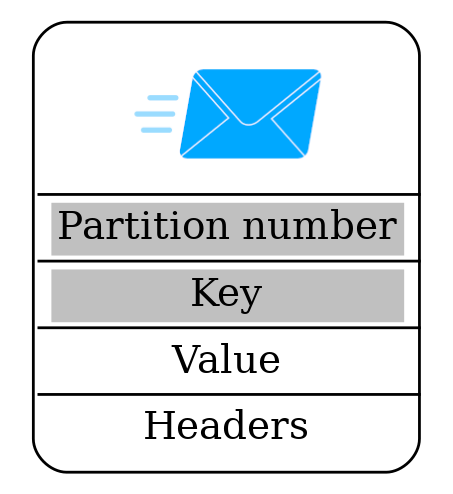

Anatomy of a message

Anatomy of a message

// hash the keyBytes to choose a partition

return Utils.toPositive(Utils.murmur2(keyBytes)) % numPartitions;Throughput vs Latency

batch.size and linger.ms

|  |

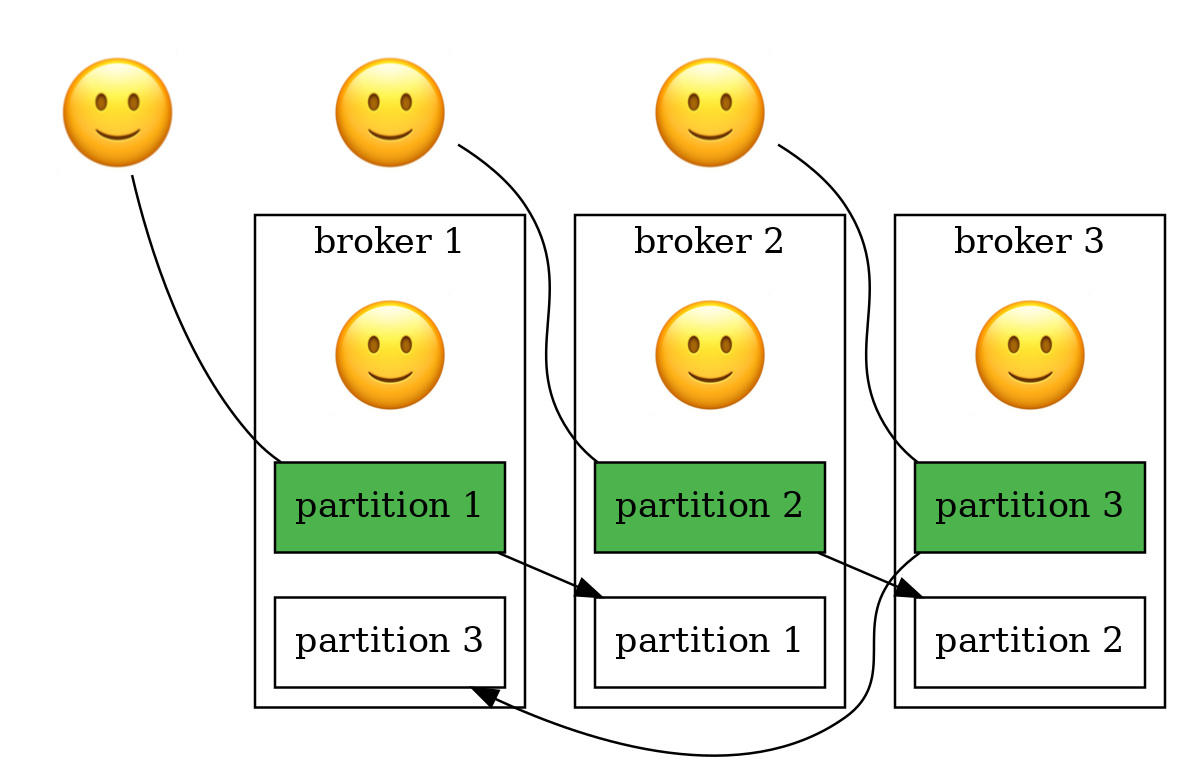

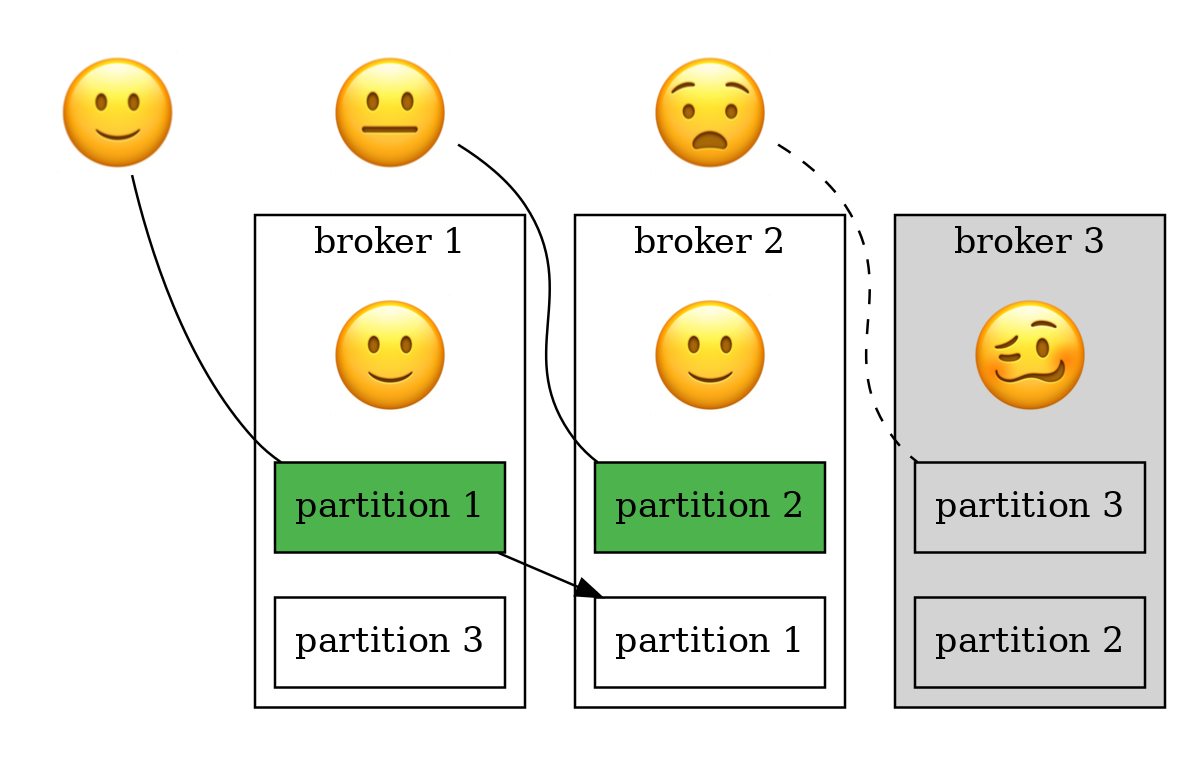

Writing to Kafka

acks = 0

Writing to Kafka

acks = 1

Writing to Kafka

acks = -1

min.insync.replicas

Our plan

|

|  |

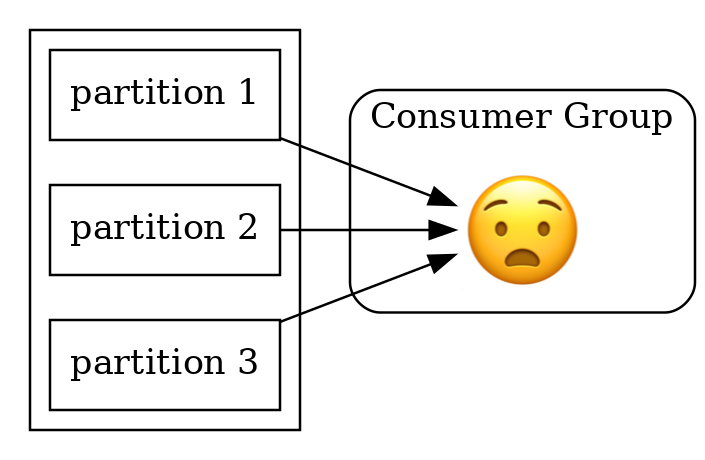

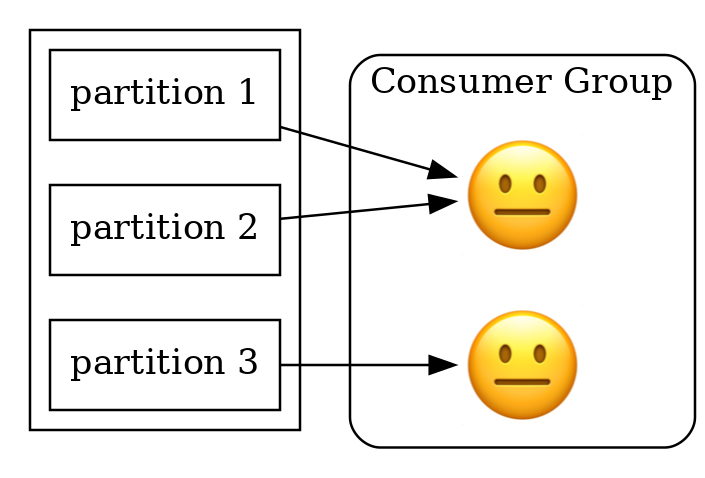

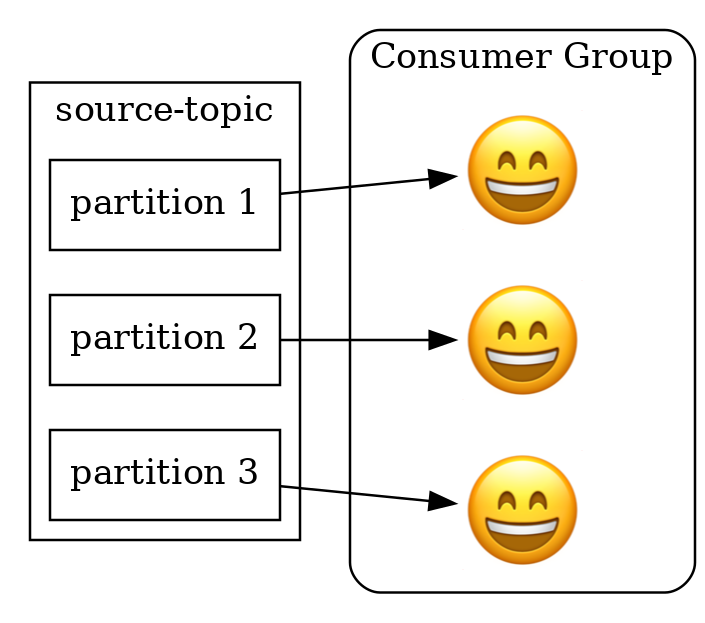

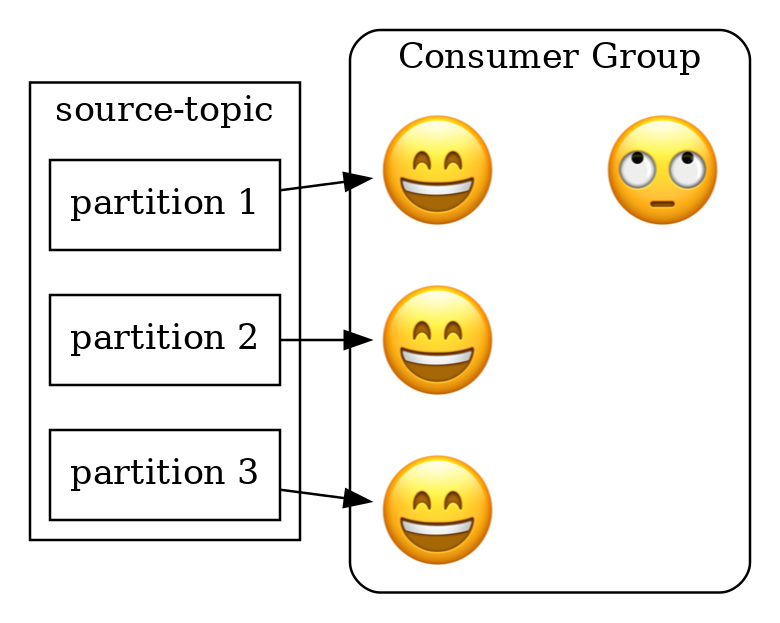

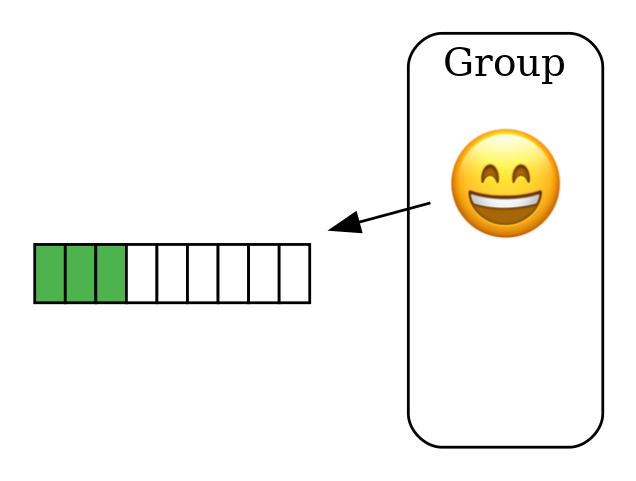

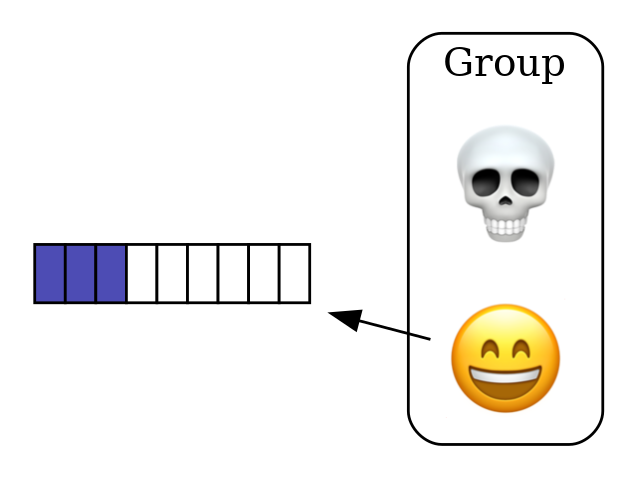

Reading from Kafka

Reading from Kafka

Reading from Kafka

Reading from Kafka

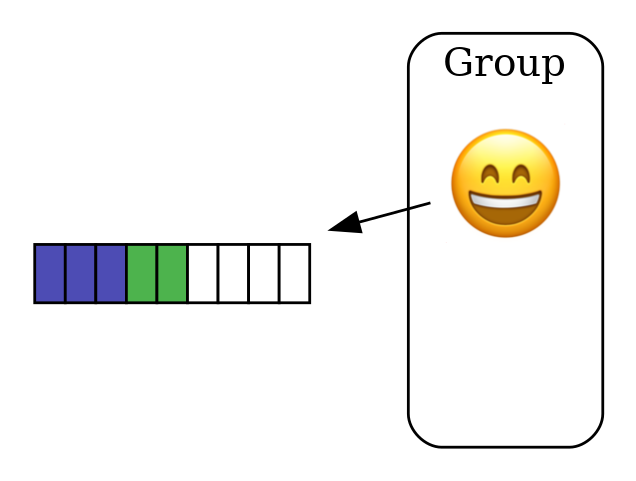

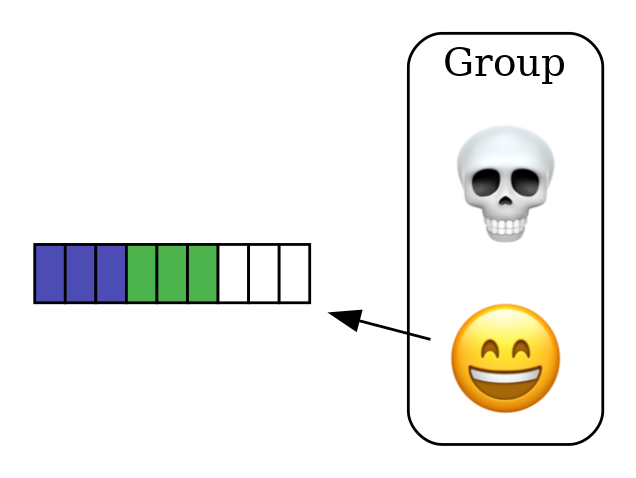

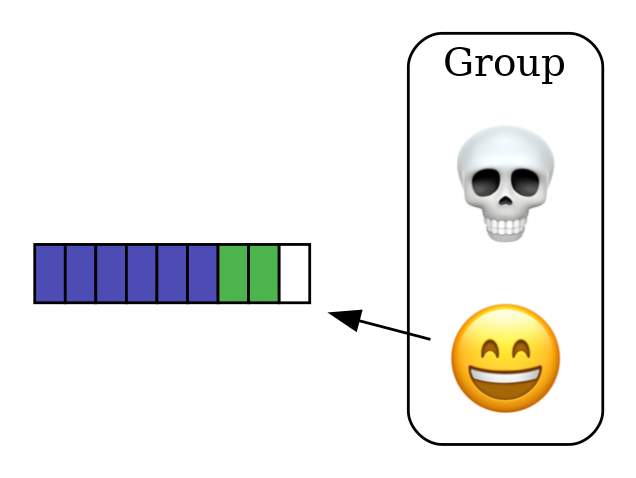

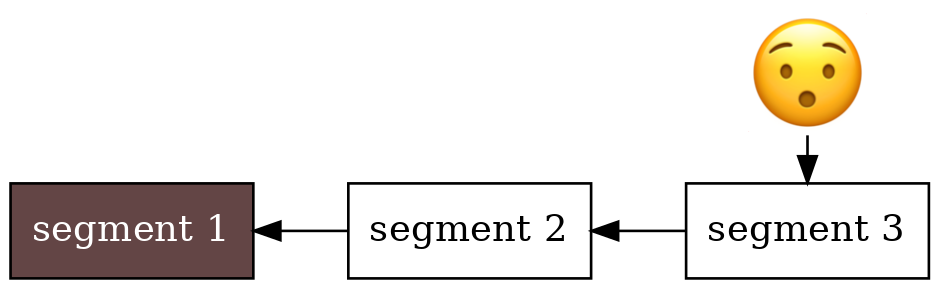

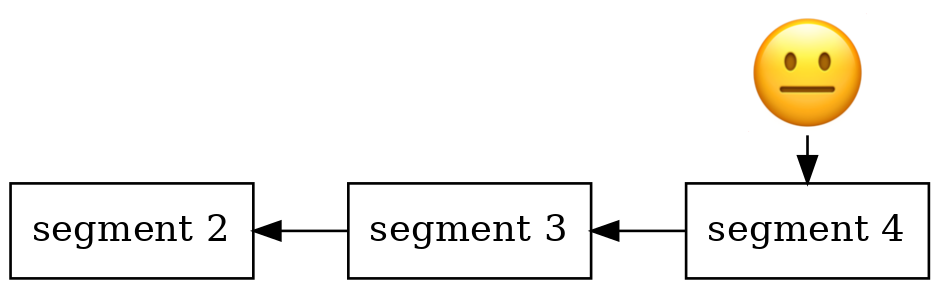

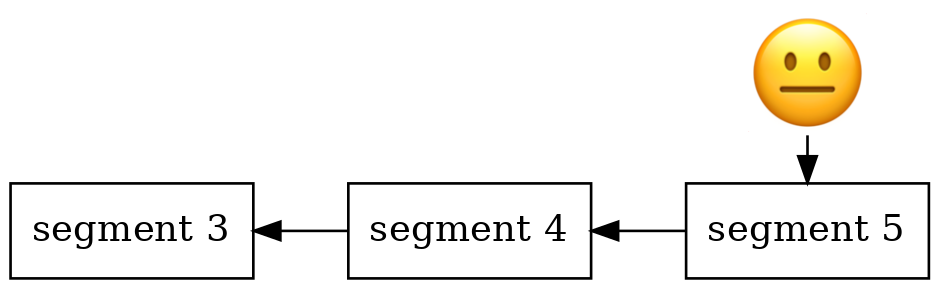

Offset Commit

Offset Commit

Offset Commit

Offset Commit

Offset Commit

Offset Commit

Offset Commit

Our plan

|

|  |

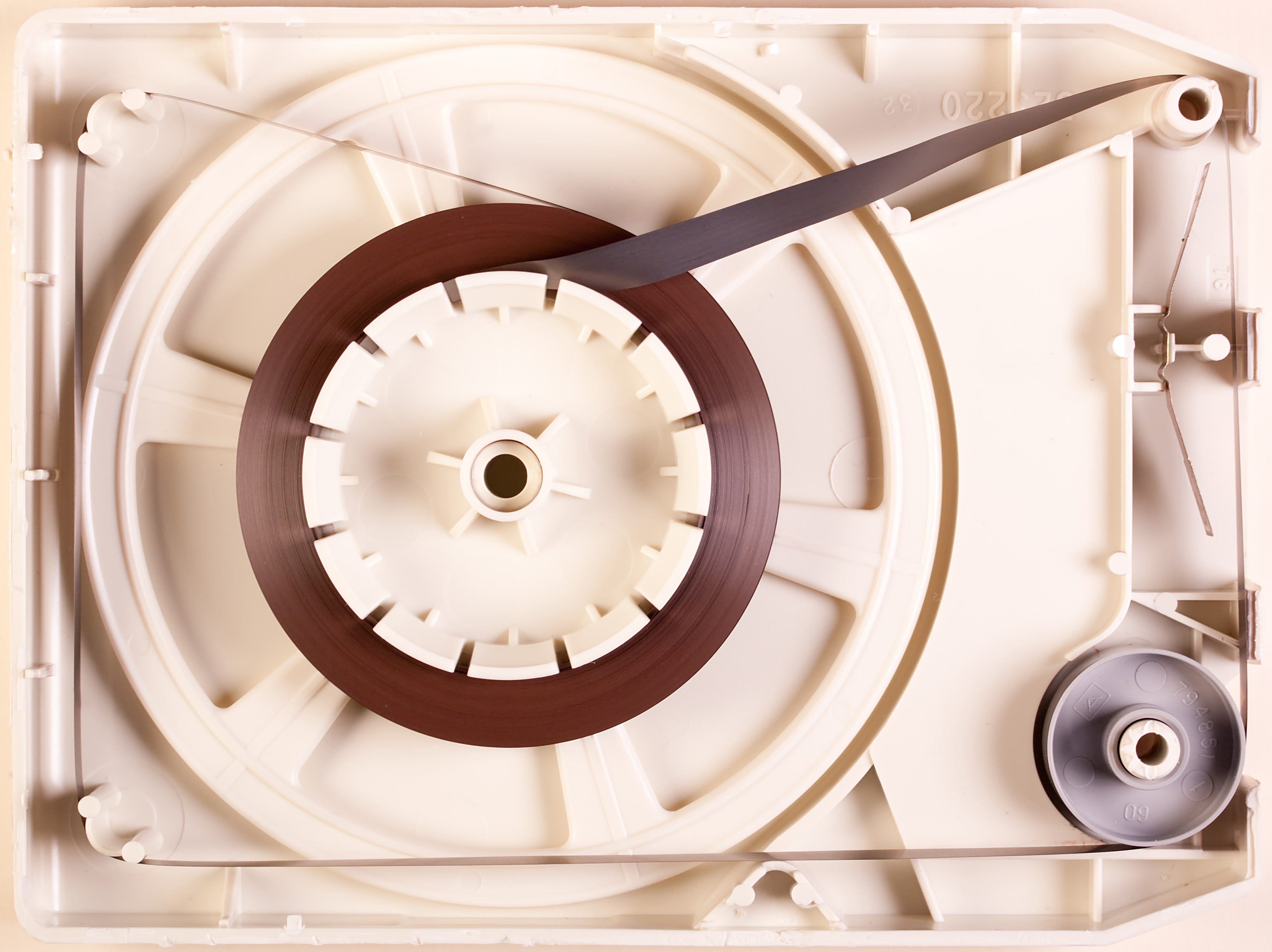

How Retention works

How Retention works

How Retention works

How Retention works

How Retention works

How Retention works

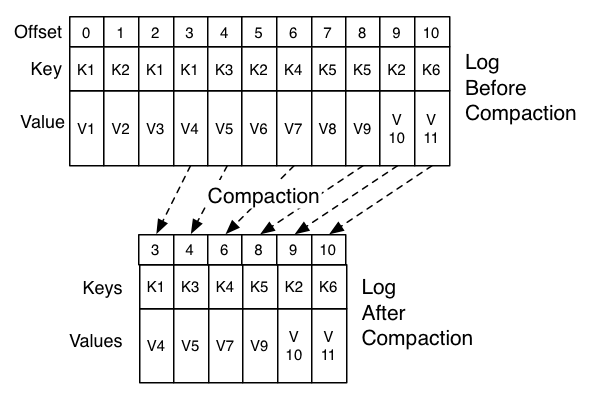

Compactification of topics

Our plan

|

|  |

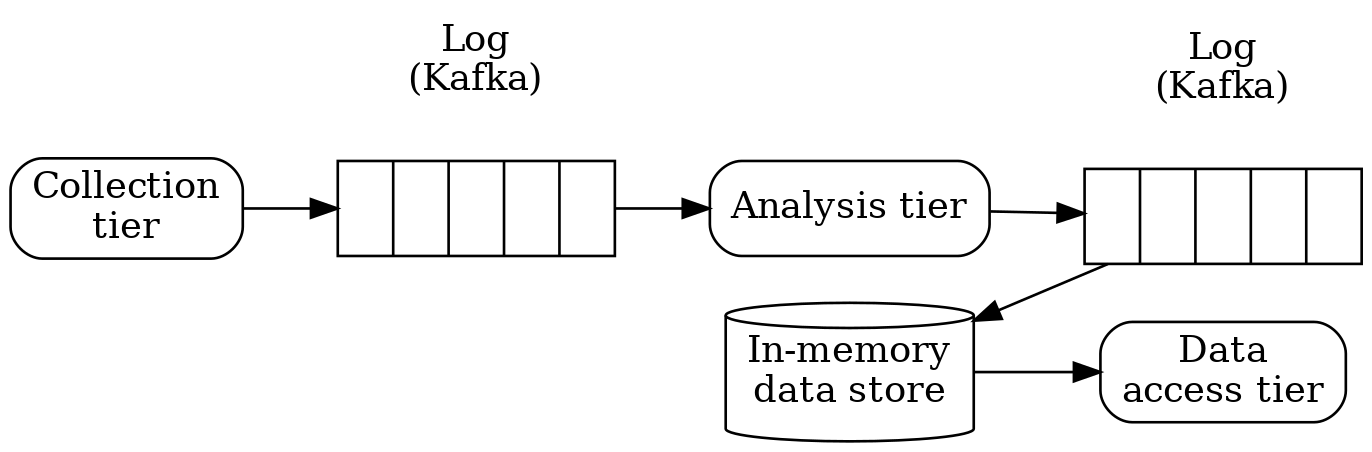

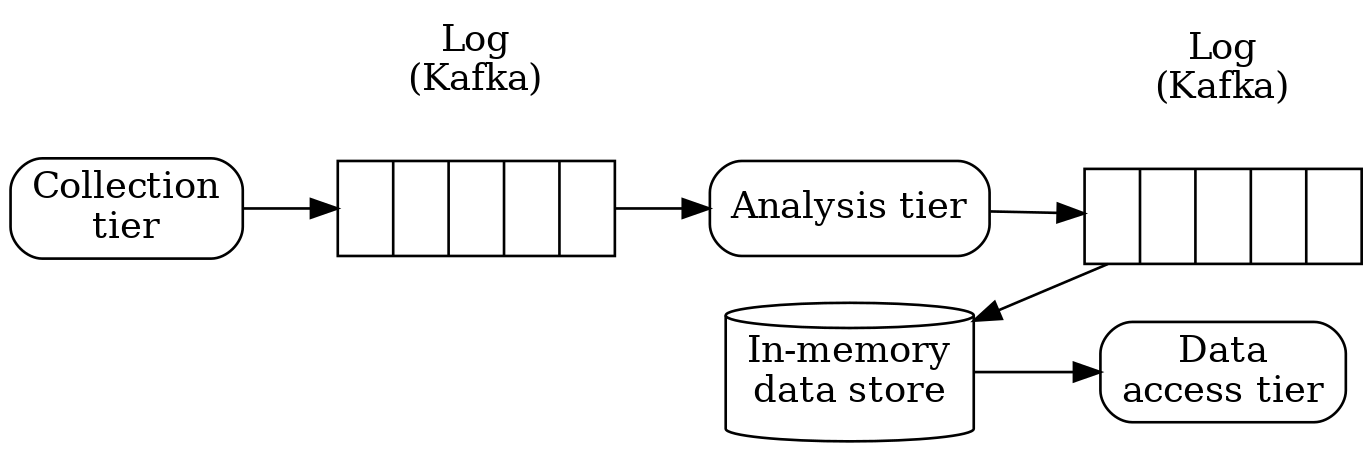

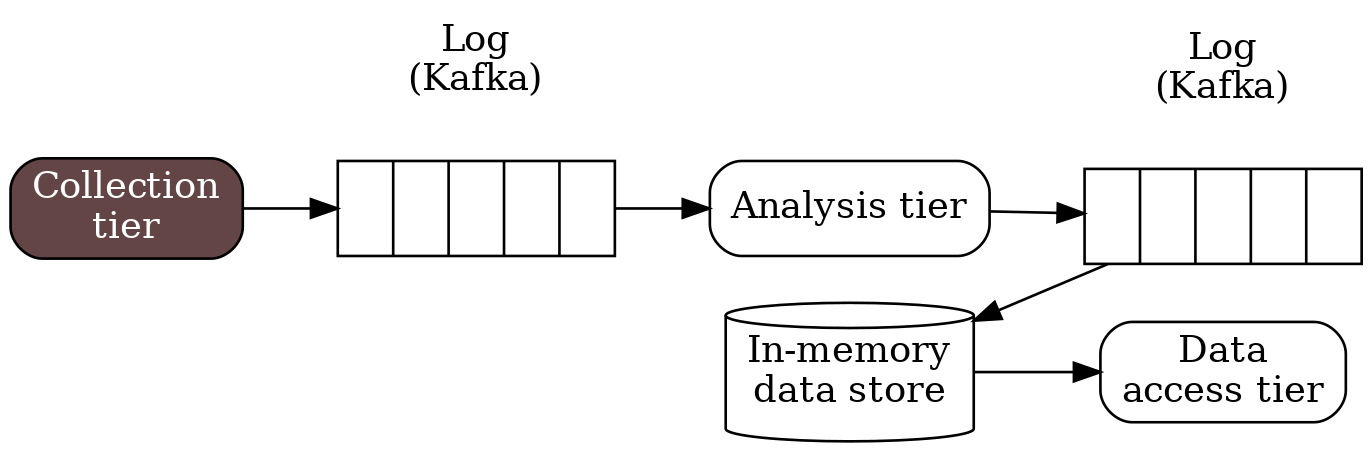

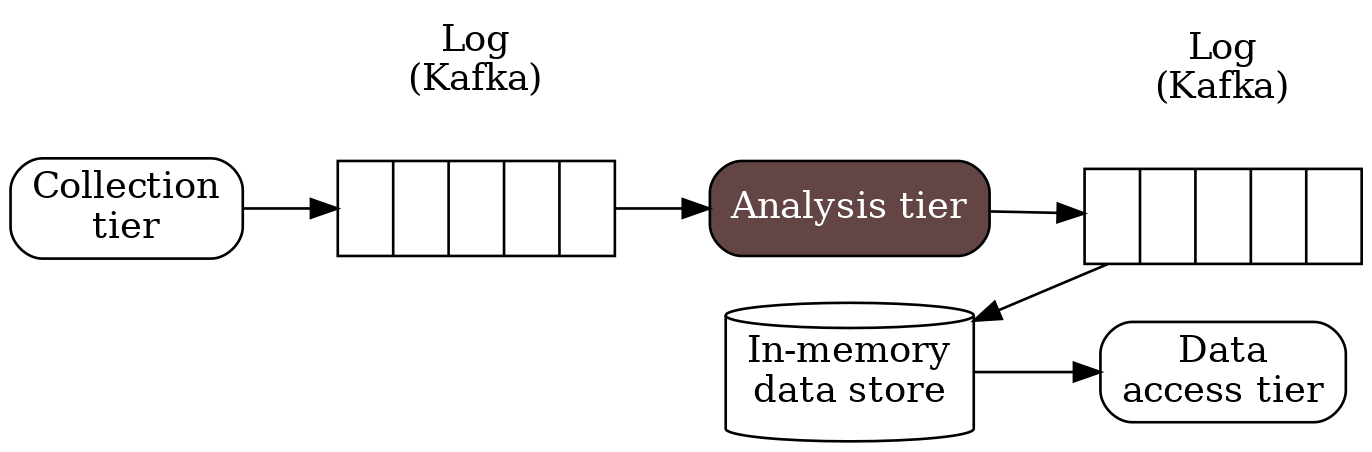

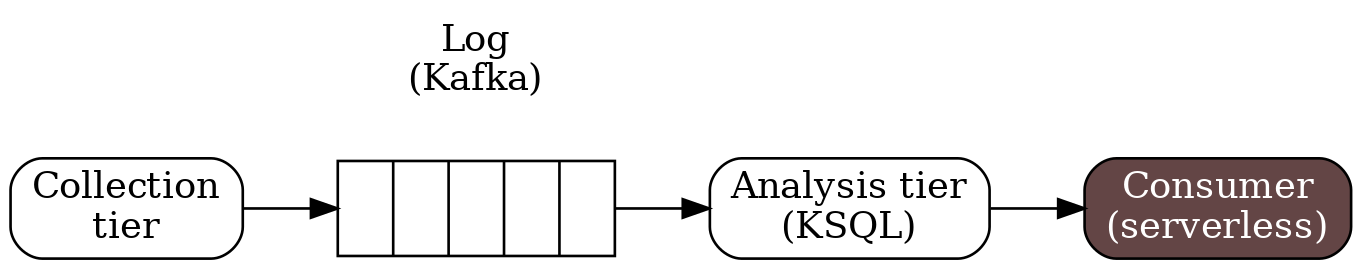

Stream data processing architecture

Existing stream processing frameworks

When I am asked which streaming framework to use

When I am asked which streaming framework to use

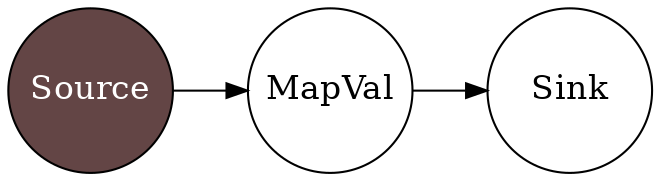

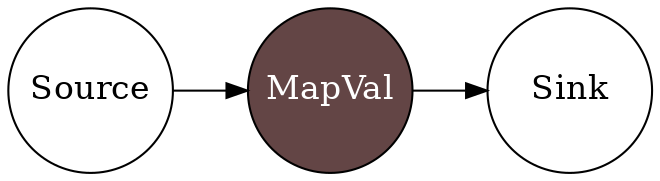

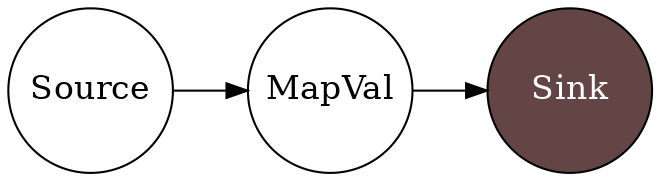

Stateless Transformation

KStream<String, String> stream = streamsBuilder.stream(

SRC_TOPIC, Consumed.with(Serdes.String(), Serdes.String());Stateless Transformation

KStream<String, String> upperCasedStream =

stream.mapValues(String::toUpperCase);Stateless Transformation

upperCasedStream.to(SINK_TOPIC,

Produced.with(Serdes.String(), Serdes.String());Three lines of code, and what’s the big deal?

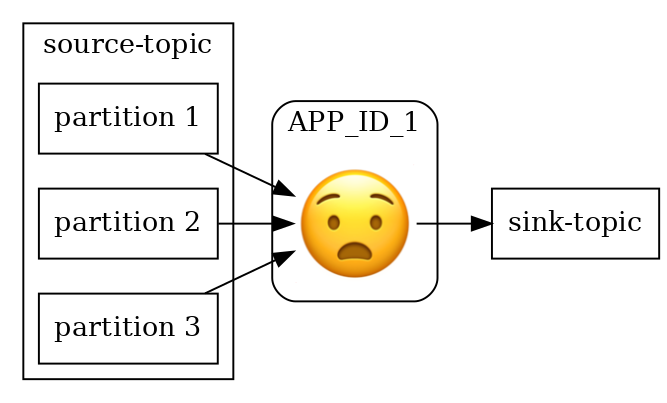

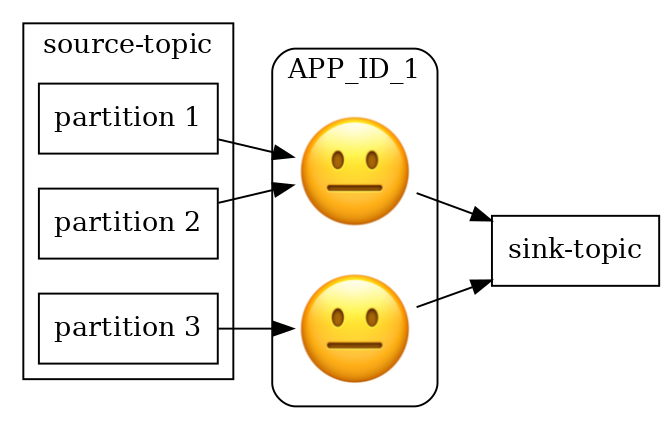

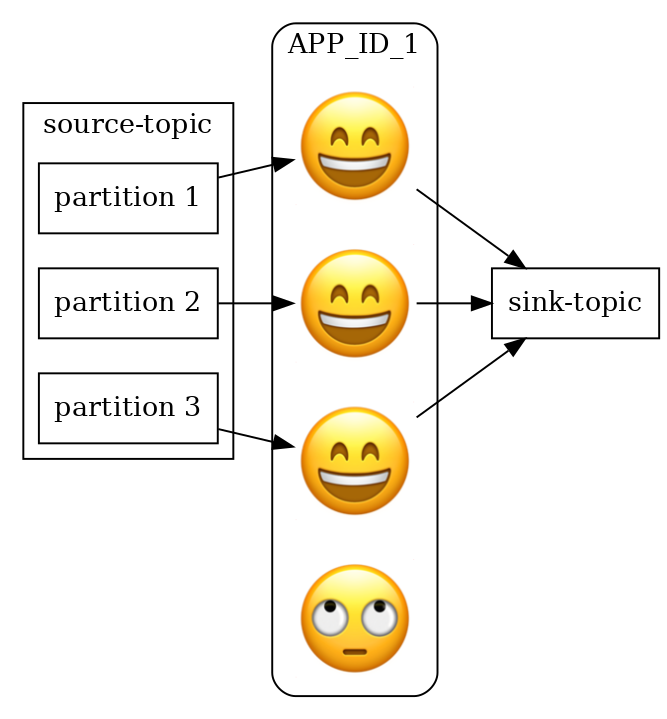

More messages per second? — more instances with the same 'application.id'!

Adding nodes

Limited only by the number of partitions

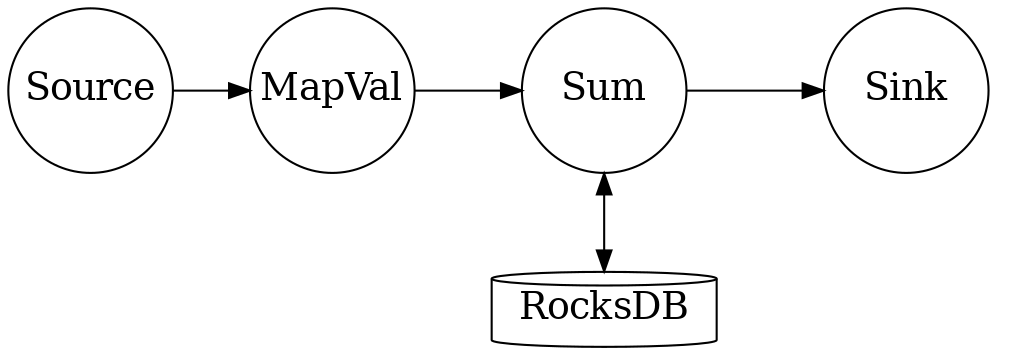

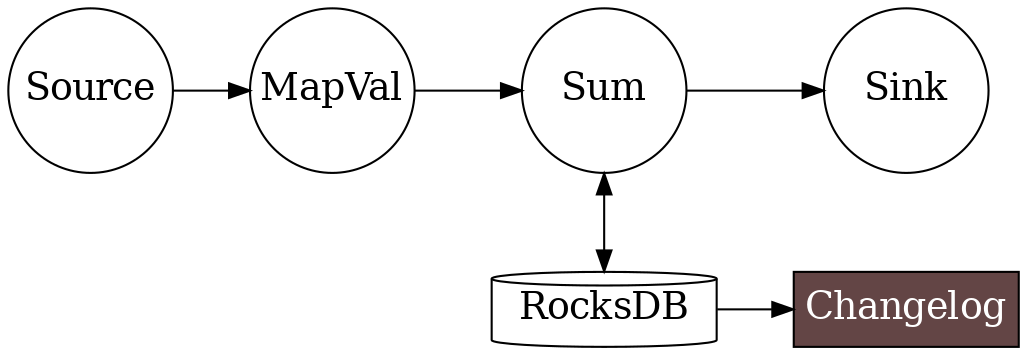

Magic of Stateful Transformation

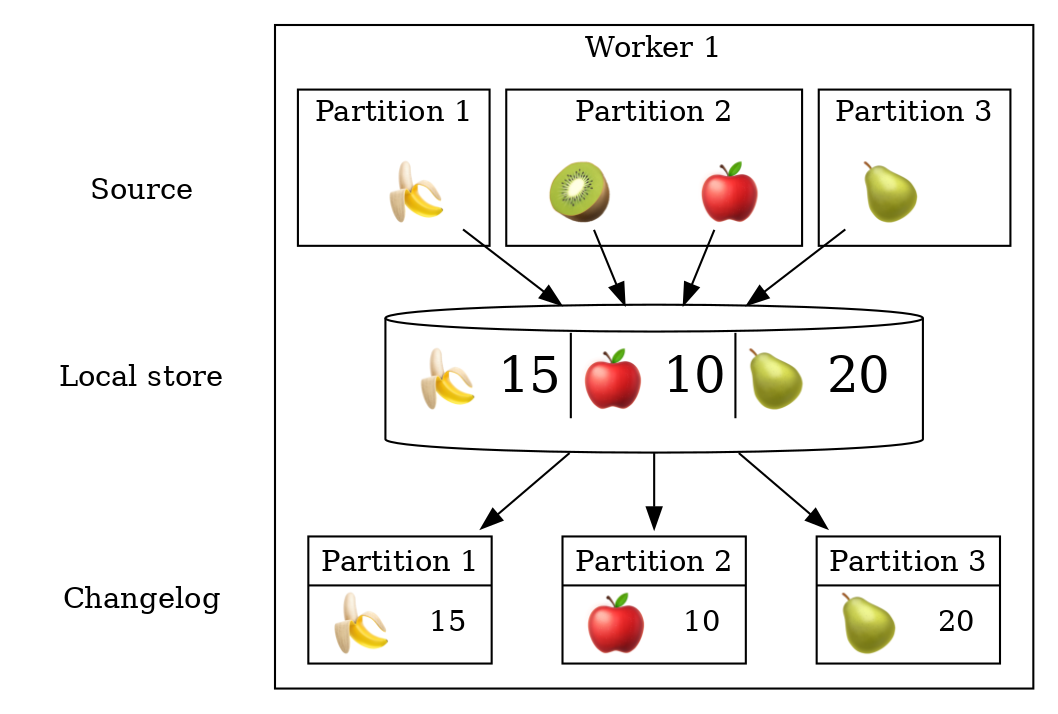

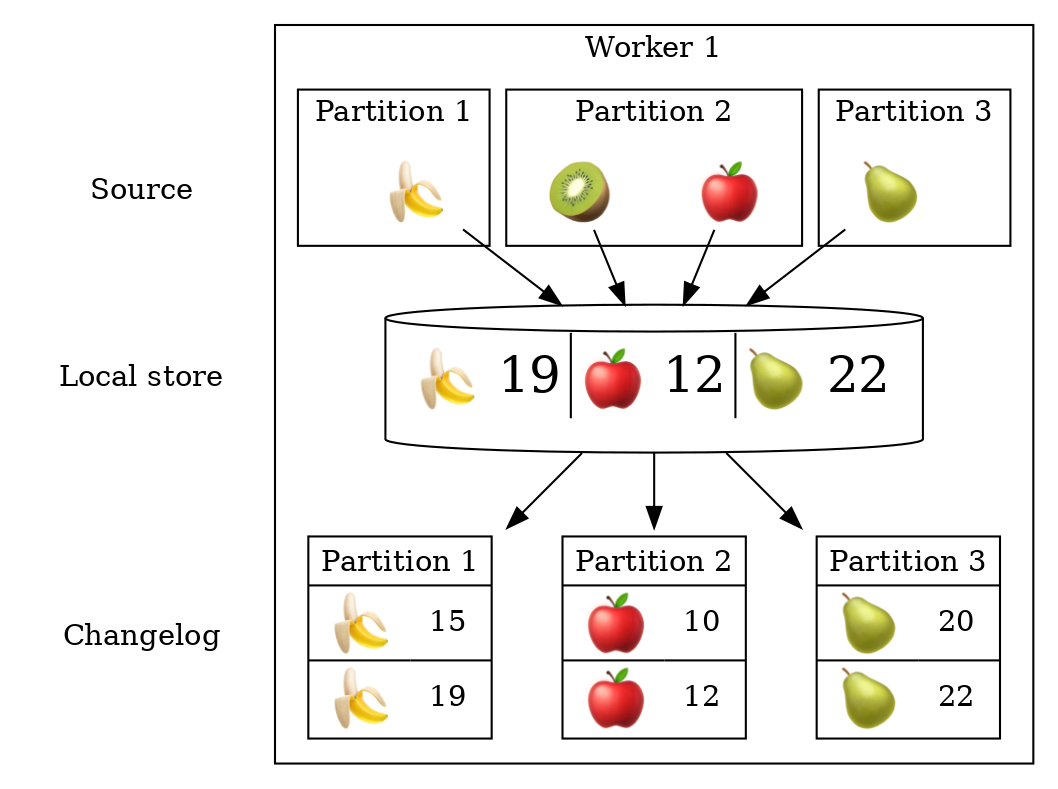

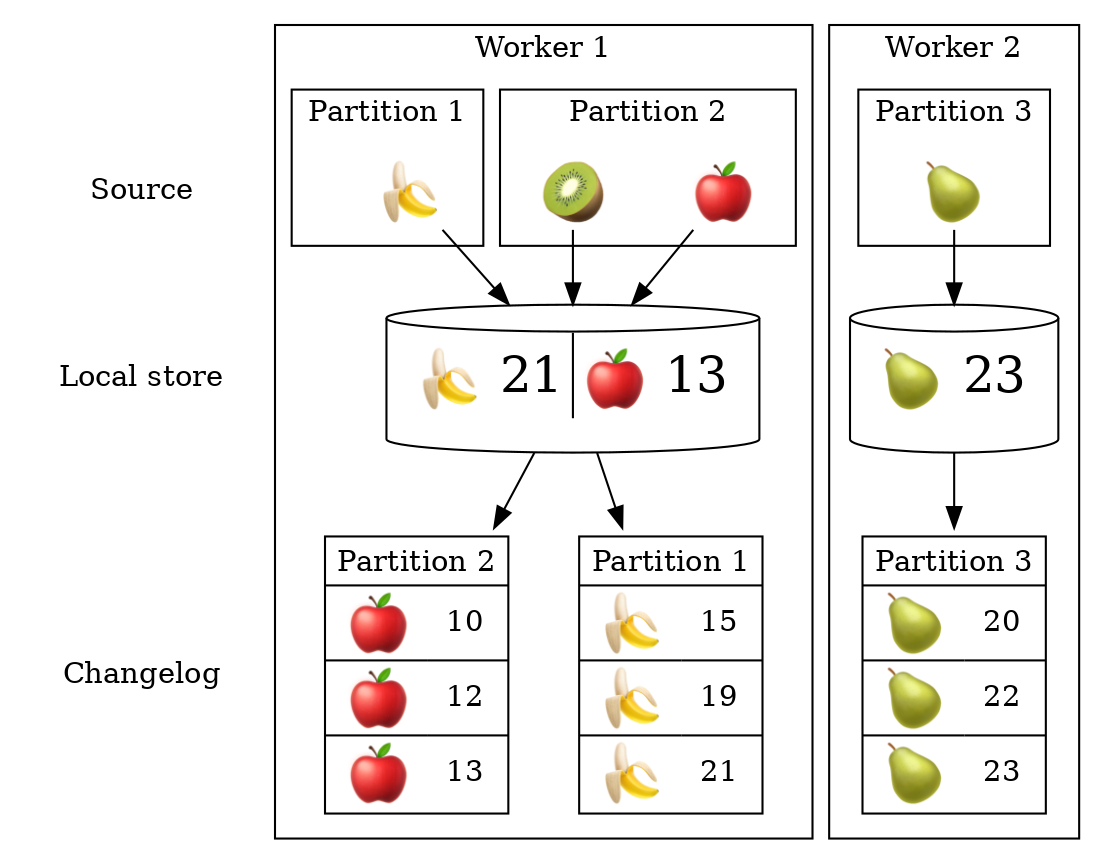

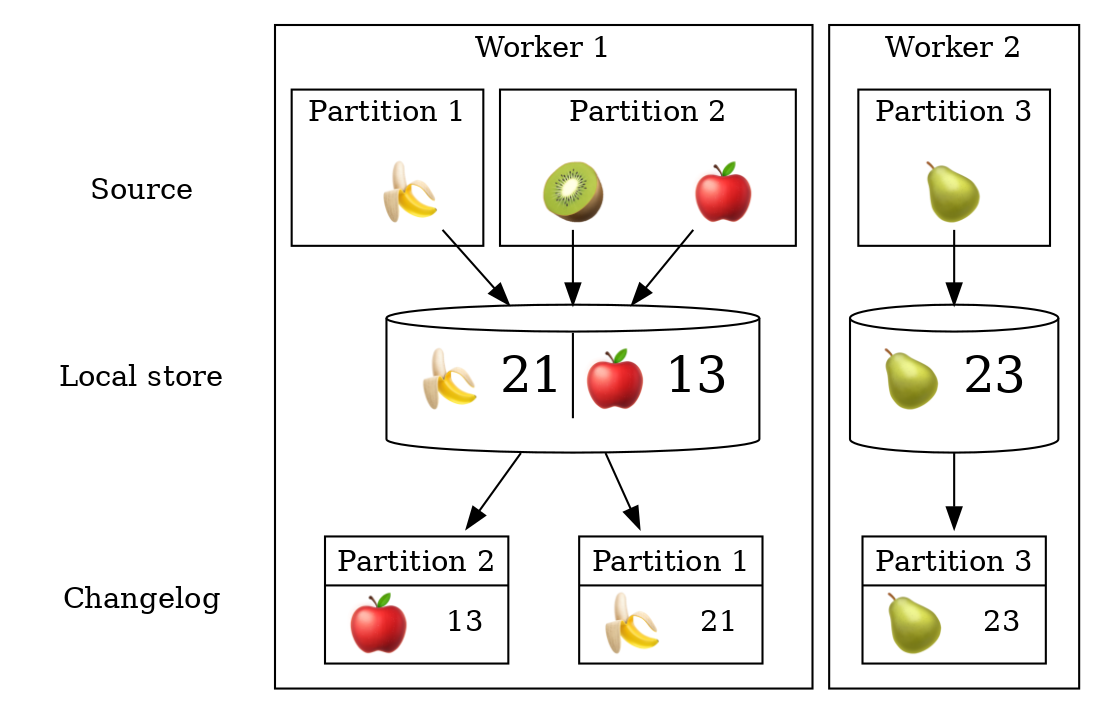

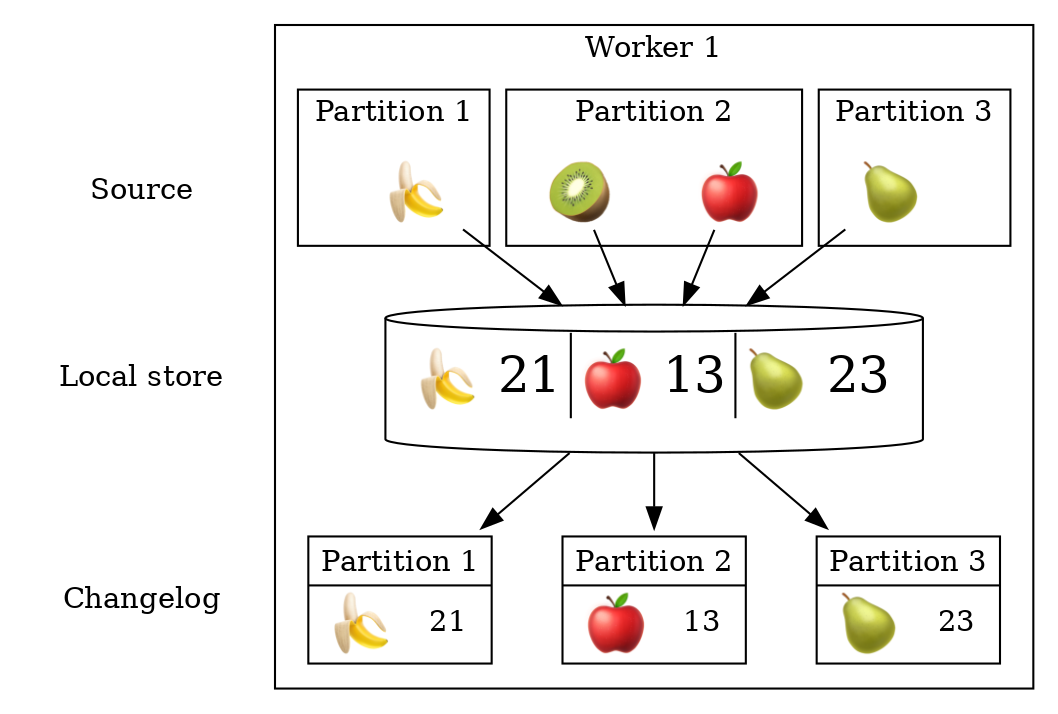

Changes are replicated to a topic!

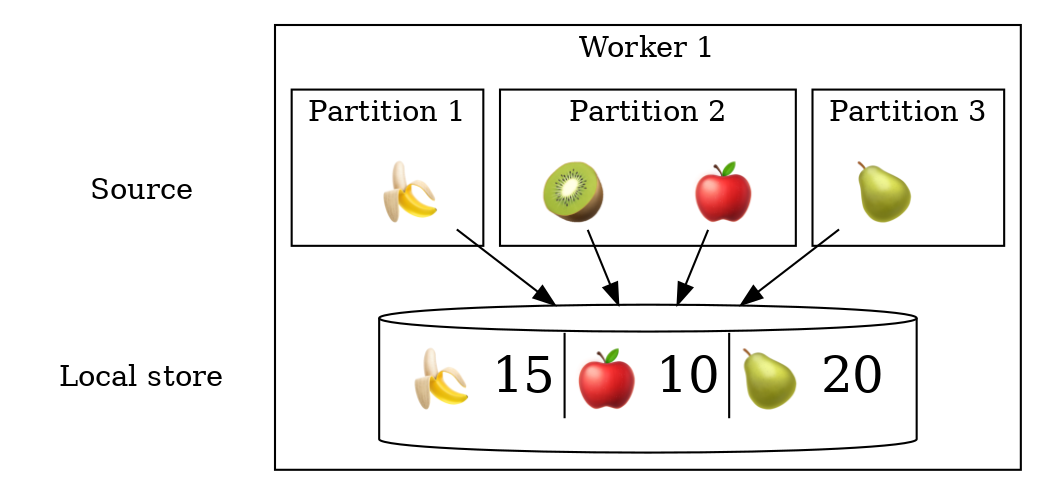

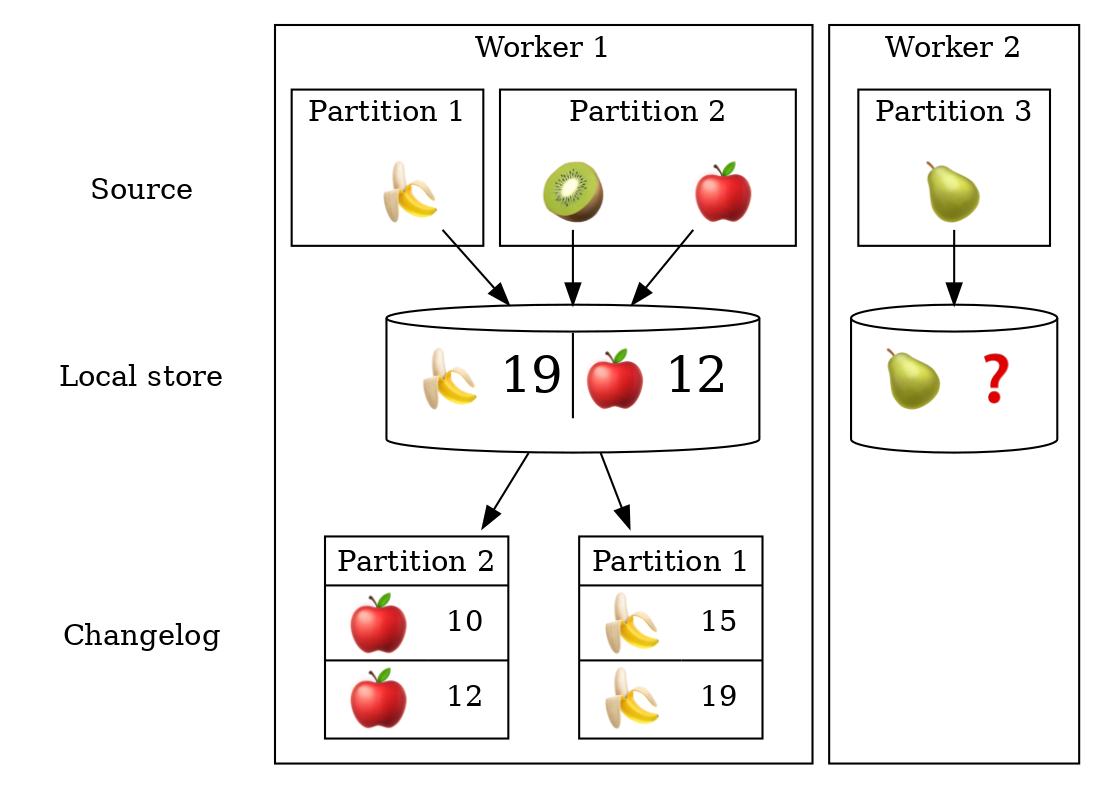

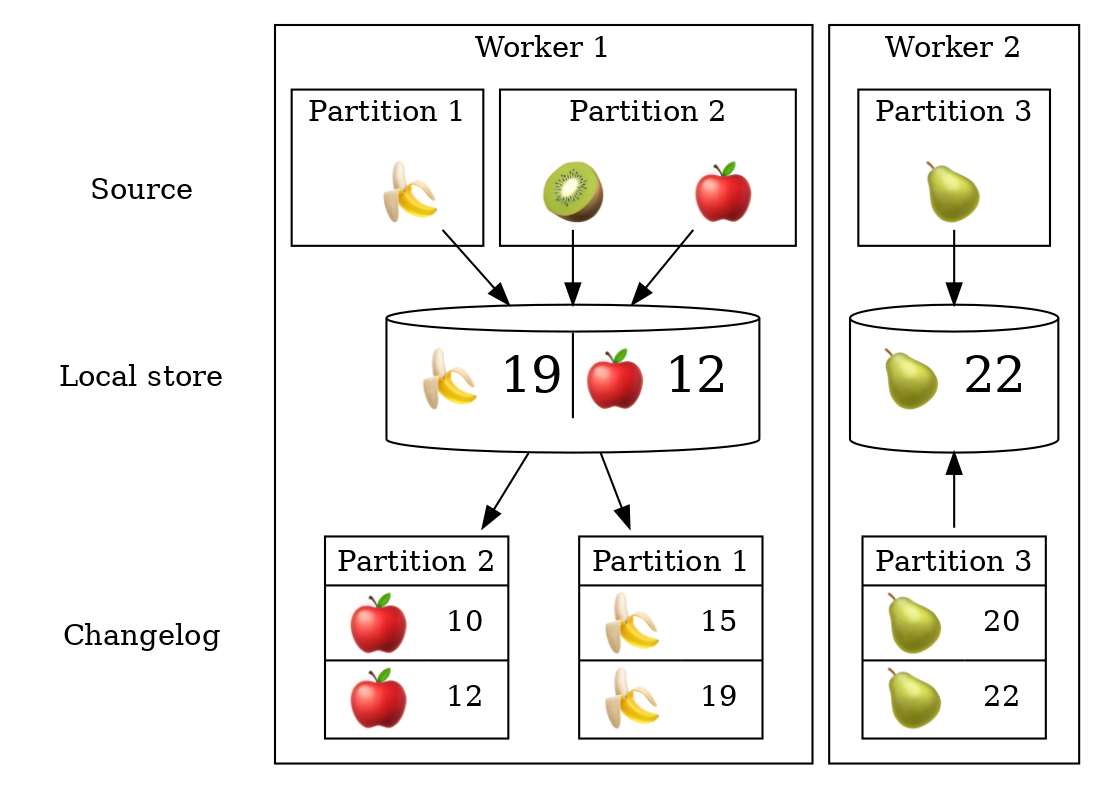

Partitioning and local state

Partitioning and local state

Partitioning and local state

Partitioning and local state

Партиционирование и local state

Partitioning and local state

Partitioning and local state

Partitioning and local state

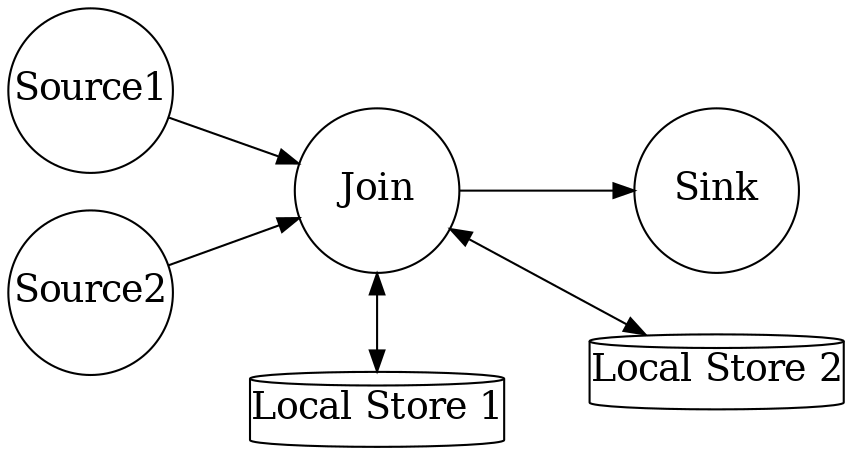

What else can streams do?

Join sources!

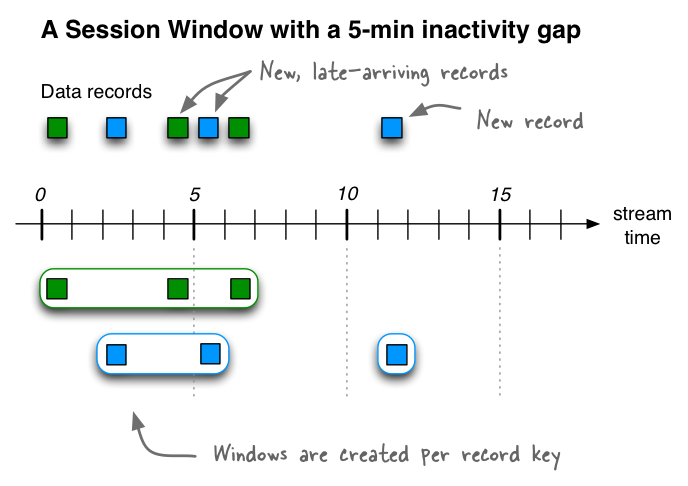

Aggregate data in time windows

Source: Kafka Streams in Action image::tumbling-window.png[width="70%"]

Aggregate data related to the work session

KSQL

CREATE STREAM pageviews_enriched AS

SELECT pv.viewtime,

pv.userid AS userid,

pv.pageid,

pv.timestring,

u.gender,

u.regionid,

u.interests,

u.contactinfo

FROM pageviews_transformed pv

LEFT JOIN users_5part u ON pv.userid = u.userid

EMIT CHANGES;KSQL

CREATE TABLE pageviews_per_region_per_30secs AS

SELECT regionid,

count(*)

FROM pageviews_enriched

WINDOW TUMBLING (SIZE 30 SECONDS)

WHERE UCASE(gender)='FEMALE' AND LCASE(regionid)='region_6'

GROUP BY regionid

EMIT CHANGES;Where are streaming systems needed?

Monitoring! Logs!

Track user activity

Anomaly detection (including fraud detection)

Things to keep in mind

If you change the data schema, the migration is not similar to RDBMS.

Once-only delivery:

In normal mode, a failure in KStreams causes the data to be read again.

Once-only delivery mode implies moving data between Kafka topics only.

If you need to store data all the time

Lambda architecture

| Nikita Salnikov-Tarnovsky //https://2019.jokerconf.com/2019/talks/2qw2ljhlfoeiipjf0gfzzb/[Streaming application is not only code, but also 3-4 years of support in the product] |

Our plan

|

|  |

Kafka and Javascript

Kafka and Javascript

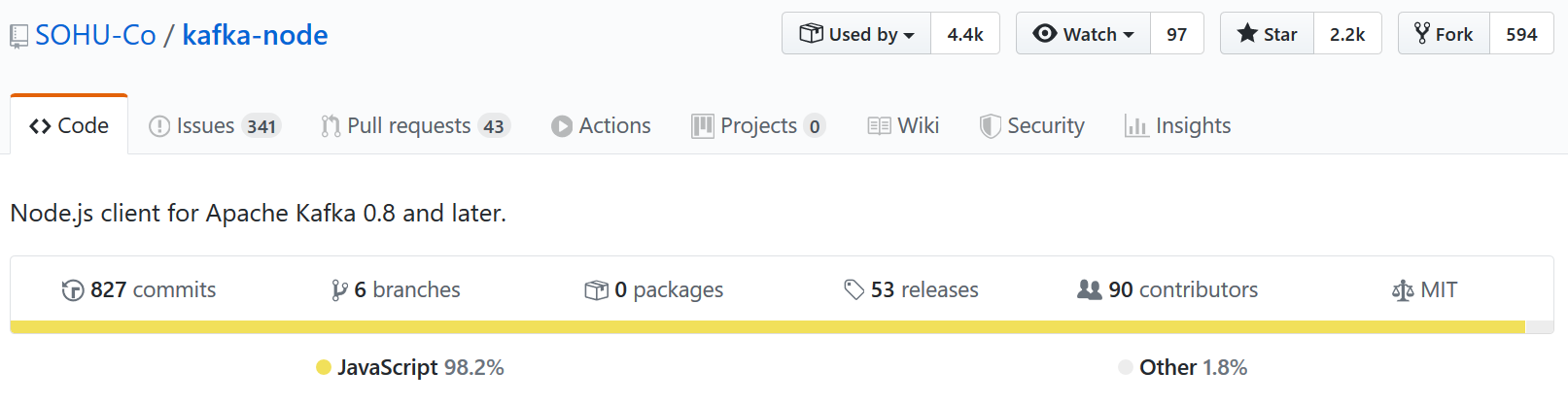

kafka-node

Leader in stars and number of uses

Pure JavaScript implementation

Limited number of features.

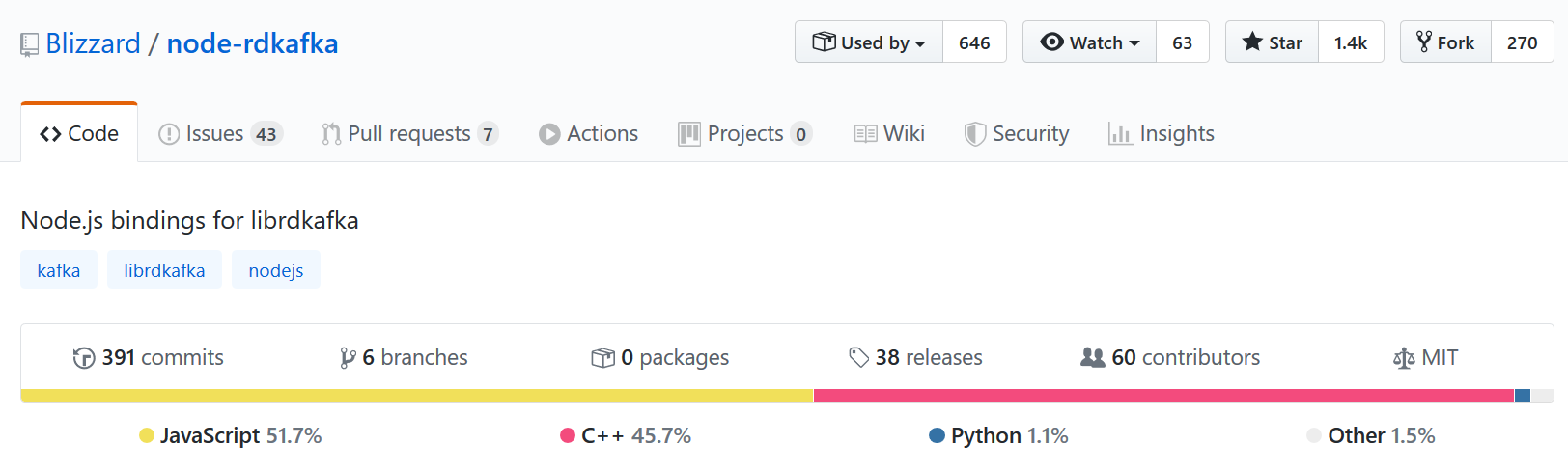

node-rdkafka

Github metrics are inferior comparing to kafka-node

JavaScript/C++

Wrapper around librdkafka, which is a very mature project

Able to work with Confluent Cloud

Converting/output stage on node.js?

Converting/output stage on node.js?

|

|

KSQL + Serverless

Our plan

|

|  |

You decided to try Kafka. Where to start?

'kafkacat' is the best CLI tool

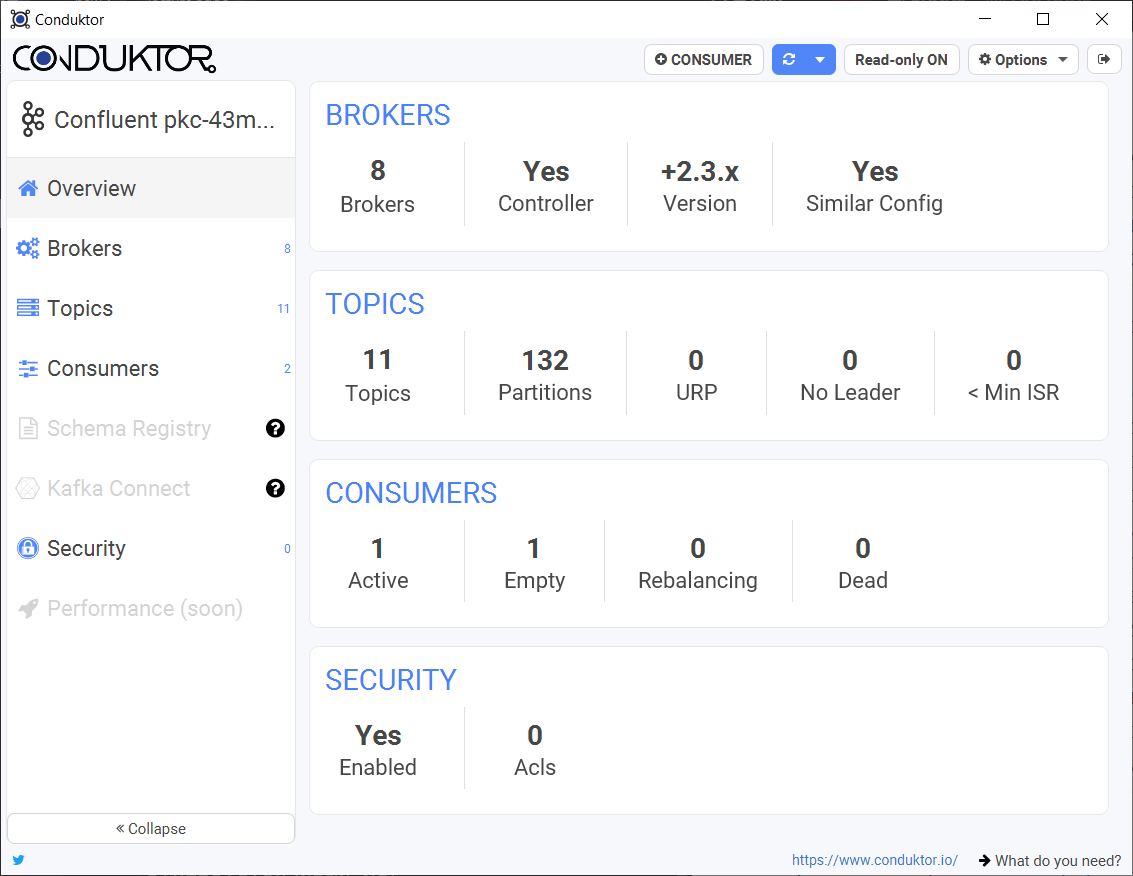

Conduktor — the best GUI tool

It’s hard to run Kafka in production

|

Kafka: The Definitive Guide

|

|

Сообщества, конференции

Kafka Summit Conference: https://kafka-summit.org/

Telegram

Grefnevaya Kafka

Meetup in Moscow

Moscow Apache Kafka® Meetup by Confluent — quarterly

That’s all!

|

Thanks!